This Neural Network Built by Japanese Researchers Can ‘Read Minds’

Share

It already seems a little like computers can read our minds; features like Google’s auto-complete, Facebook’s friend suggestions, and the targeted ads that appear while you’re browsing the web sometimes make you wonder, “How did they know?” For better or worse, it seems we’re slowly but surely moving in the direction of computers reading our minds for real, and a new study from researchers in Kyoto, Japan is an unequivocal step in that direction.

A team from Kyoto University used a deep neural network to read and interpret people’s thoughts. Sound crazy? This actually isn’t the first time it’s been done. The difference is that previous methods—and results—were simpler, deconstructing images based on their pixels and basic shapes. The new technique, dubbed “deep image reconstruction,” moves beyond binary pixels, giving researchers the ability to decode images that have multiple layers of color and structure.

“Our brain processes visual information by hierarchically extracting different levels of features or components of different complexities," said Yukiyasu Kamitani, one of the scientists involved in the study. "These neural networks or AI models can be used as a proxy for the hierarchical structure of the human brain.”

The study lasted 10 months and consisted of three people viewing images of three different categories: natural phenomena (such as animals or people), artificial geometric shapes, and letters of the alphabet for varying lengths of time.

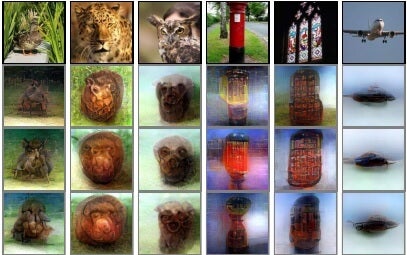

Reconstructions utilizing the DGN. Three reconstructed images correspond to reconstructions from three subjects.

The viewers’ brain activity was measured either while they were looking at the images or afterward. To measure brain activity after people had viewed the images, they were simply asked to think about the images they’d been shown.

Recorded activity was then fed into a neural network that "decoded" the data and used it to generate its own interpretations of the peoples’ thoughts.

In humans (and, actually, all mammals) the visual cortex is located at the back of the brain, in the occipital lobe, which is above the cerebellum. Activity in the visual cortex was measured using functional magnetic resonance imaging (fMRI), which is translated into hierarchical features of a deep neural network.

Starting from a random image, the network repeatedly optimizes that image’s pixel values. The neural network’s features of the input image become similar to the features decoded from brain activity.

Importantly, the team’s model was trained using only natural images (of people or nature), but it was able to reconstruct artificial shapes. This means the model truly ‘generated’ images based on brain activity, as opposed to matching that activity to existing examples.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

Not surprisingly, the model did have a harder time trying to decode brain activity when people were asked to remember images, as compared to activity when directly viewing images. Our brains can’t remember every detail of an image we saw, so our recollections tend to be a bit fuzzy.

The reconstructed images from the study retain some resemblance to the original images viewed by participants, but mostly, they look like minimally-detailed blobs. However, the technology’s accuracy is only going to improve, and its applications will increase accordingly.

Imagine “instant art,” where you could produce art just by picturing it in your head. Or what if an AI could record your brain activity as you’re asleep and dreaming, then re-create your dreams in order to analyze them? Last year, completely paralyzed patients were able to communicate with their families for the first time using a brain-computer interface.

There are countless creative and significant ways to use a model like the one in the Kyoto study. But brain-machine interfaces are also one of those technologies we can imagine having eerie, Black Mirror-esque consequences if not handled wisely. Neuroethicists have already outlined four new human rights we would need to implement to keep mind-reading technology from going sorely wrong.

Despite this, the Japanese team certainly isn’t alone in its efforts to advance mind-reading AI. Elon Musk famously founded Neuralink with the purpose of building brain-machine interfaces to connect people and computers. Kernel is working on making chips that can read and write neural code.

Whether it’s to recreate images, mine our deep subconscious, or give us entirely new capabilities, though, it’s in our best interest that mind-reading technology proceeds with caution.

Image Credit: igor kisselev / Shutterstock.com

Vanessa has been writing about science and technology for eight years and was senior editor at SingularityHub. She's interested in biotechnology and genetic engineering, the nitty-gritty of the renewable energy transition, the roles technology and science play in geopolitics and international development, and countless other topics.

Related Articles

Scientists Want to Give ChatGPT an Inner Monologue to Improve Its ‘Thinking’

Humanity’s Last Exam Stumps Top AI Models—and That’s a Good Thing

AI Now Beats the Average Human in Tests of Creativity

What we’re reading