What Global Challenges Will We Solve With Exascale Supercomputers?

Share

Though nearly seventy percent of Earth’s surface is comprised of water, only three percent is considered fresh and drinkable—and most of that striking minority is trapped in glaciers or polar ice caps. Juxtapose the dearth of natural drinking water with the disquieting realization that nearly a billion people still lack unfettered access to clean water, and the world’s oceans suddenly look a lot smaller. Indeed, for being one of Earth’s most abundant molecules, water engenders a serious problem for many civilizations.

This global quandary has led to the ambitious goal of making oceans drinkable—but doing so is going to require a ton of innovation and processing power. Let's take a look at how the next generation of supercomputers might help solve our water challenges and more.

Researchers at the Lawrence Livermore National Laboratory believe the answer lies in carbon nanotubes (and a whole lot more, but let’s start here for now).

The microscopic cylinders serve as the perfect desalination filters: their radius is wide enough to let water molecules slip through, but narrow enough to block the larger salt particles. The scale we’re talking about here is truly unimaginable; the width of a single nanotube is more than 10,000 times smaller than a human hair.

Fasten a few billion of these nanotubes together, and the result is a strikingly effective apparatus for producing drinkable seawater. Granted, finding an optimal configuration for billions of microscopic cylinders is much easier said than done, and scientists hope to use computing as the next-generation method to do so.

If researchers could efficiently test variations of nanotube filters, with options to specify parameters like width, water salinity, filter times, and so on, then progress in the field would grow by leaps and bounds. Imagine having to manually run experiments by manipulating materials that require an electron microscope to discern. The complexity, inefficiency, and downright tedium that would surround such an ordeal is evident, and fast software analysis becomes that much more desirable.

Enter exascale computing, the next step in scalable information processing.

Supercomputers have grown more powerful over the years. The last much-lauded boundary (petascale) was crossed in 2008 when the fastest supercomputer in the world recorded a speed of over a quadrillion calculations per second. Up next, in what would be comparable to about 50 million desktop workstations daisy-chained together, an exascale machine would complete some quintillion calculations per second. For reference, it would outperform today's fastest supercomputer, the Sunway TaihuLight, by a factor of 8-10.

The impact exascale computing could have on issues like desalination is exciting, and engineers are working tirelessly to birth the first machine of its kind. In fact, the US Department of Energy has commissioned its own exascale computing project and has given grants to six companies, including HP, IBM, AMD, Intel, and NVIDIA, to support exascale research and development.

Scientists have placed an almost overweening faith in the technology. Progress in fields like quantum mechanics, wind energy, weather prediction, and now global seawater distillation often stagnate due to a lack of computing resources. Even the world’s fastest processors throttle when trying to algorithmically reason their way through petabytes of data.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

Exascale may prove a necessary tool to not only produce a cheaper, more efficient salt water filter, but also expedite development in troves of other spectacular research.

From a consumer or public perspective, access to an exascale machine would be facilitated entirely through the cloud. For instance, Google recently announced their Cloud TPU architecture, which would allow users to access 11.5 petaflops of computing power to accelerate training of their machine learning models. Such promising product releases reveal the potential for technology giants to continually upgrade their cloud offerings. Should exascale become the standard in the next handful of years, anyone with a stable internet connection would be able to access a Google or AWS cluster and do some serious number crunching.

Alright, back to fresh water.

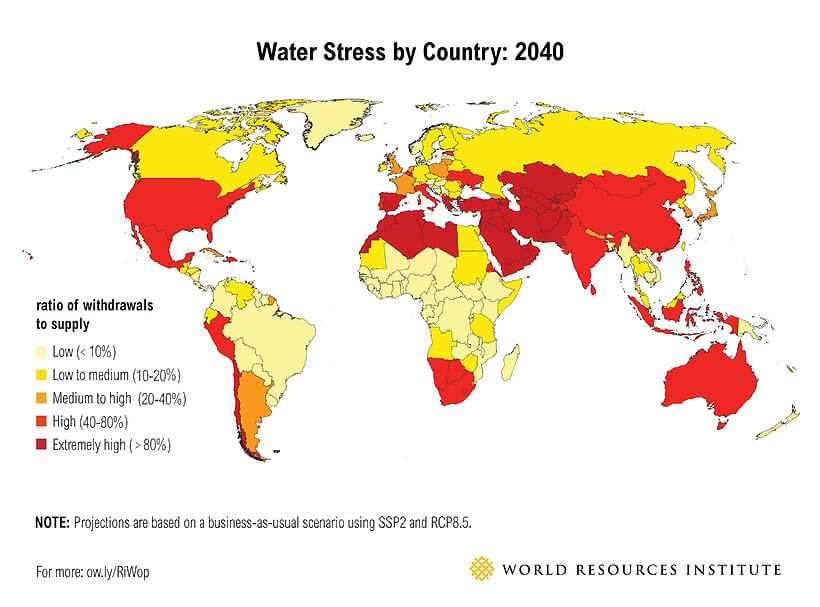

Studies show that the demand for fresh water is expected to outpace the global supply of it by nearly 40 percent in 2030 (PDF). Several parts of the world are plagued by the accelerating effects of climate change, and such setbacks are only aggrandized by other polarizing issues like bitter regulatory debates or crushing poverty.

A telling water stress map that relates water shortages to their incident supply. Image Credit: World Resources Institute

That being said, exascale computing will enable longer, more efficient simulations that could comprise weeks of progress in a matter of minutes. Researchers could make the necessary adjustments, fine-tune prototypes, and even secure a commercial license to manufacture years before their previous timeline had insinuated.

The buck of these advances doesn’t stop at matters of desalination, though—supernova research, in-depth weather prediction, and even particle simulation on the atomic scale burst through as front-runners of the exascale project. The next step in supercomputing is promising, its potential impact is awe-inspiring, and it posits incredible change for generations to come.

Image Credit: portal gda / Flickr

Nikhil is an EECS student at UC Berkeley who loves to share his passion for technology with others. In addition to contributing to the HuffPo, he also uploads YouTube videos on ground-breaking new technologies to an audience of 20,000.

Related Articles

Scientists Send Secure Quantum Keys Over 62 Miles of Fiber—Without Trusted Devices

This Light-Powered AI Chip Is 100x Faster Than a Top Nvidia GPU

How Scientists Are Growing Computers From Human Brain Cells—and Why They Want to Keep Doing It

What we’re reading