Run a supercomputer every second of every day, and eventually its storage will fill up, its speed decrease, and its components burn out.

Yet our brains run with astonishing efficacy nearly every moment of our lives. For 40 years, scientists have wondered how delicate biological components, strung together in a seemingly chaotic heap, can maintain continuous information storage over decades. Even as individual neurons die, our neural networks readjust, fine-tuning their connections to sustain optimal data transmission. Unlike a game of telephone with messages that increasingly deteriorate, somehow our neurons self-assemble into a “magical” state, where they can renew almost every single component of their interior protein makeup, yet still hold onto the memories stored within.

This week, a team from Washington University in St. Louis combined neural recordings from rats with computer modeling to uncover one of the largest mysteries of the brain: why, despite noisy components, it’s so damn powerful. By analyzing firing patterns from hundreds of neurons over days, the team found evidence that supports a type of “computational regime” that may underlie every thought and behavior that naturally emerge from electrical sparks in the brain—including consciousness.

The answer, as it happens, has roots in an abstruse and controversial idea in theoretical physics: criticality. For one of the first times, the team observed an abstract “pull” that lures neural networks back into an optimal functional state, so they never stray far from their dedicated “set points” determined by evolution. Even more mind-blowing? That attractive force somehow emerges from a hidden universe of physical laws buried inside the architecture of entire neural networks, without any single neuron dictating its course.

“It’s an elegant idea: that the brain can tune an emergent property to a point neatly predicted by the physicists,” said lead author Dr. Keith Hengen.

A Balanced Point

“Attractor point” sounds like pickup artist lingo, but it’s a mathematical way to describe balance in natural forces (cue Star Wars music). An easy-to-imagine example is a coiled spring, like those inside mattresses: you can stretch or crush them over years, but they generally snap back to their initial state.

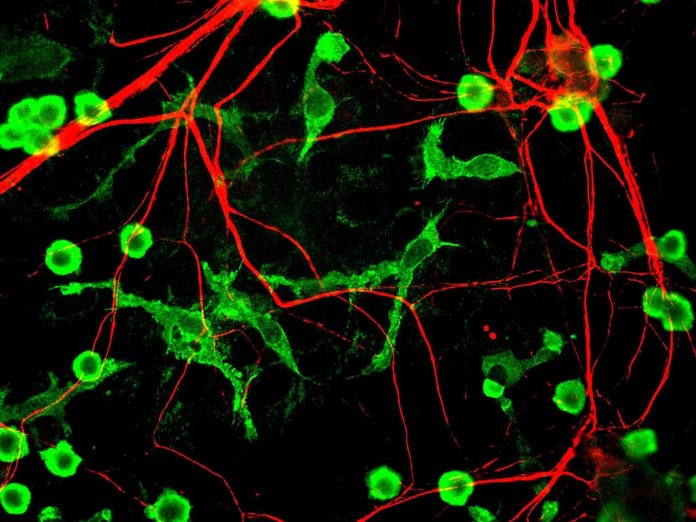

That initial state is an attractor. A similar principle, though far more abstract, guides neural activity, especially the main drivers of the brain’s communication: inhibitory and excitatory neurons. Think of them as the yin and yang of electrical activity in the brain. Both send “spikes” of electricity to their neighbors, with inhibitory neurons dampening the transmission and excitatory ones amplifying the message. The more signals come in, the more spikes they send out—something called a “firing rate,” kind of like the beats-per-minute music of brain activity.

Yet even individual neurons have a capped level of activation. Normally, they can never fire so much it messes up their physical structures. In other words, neurons are self-limiting. On a wider scale, neural networks also have a global “tuning nob” that works on the majority of synapses, mushroom-shaped structures protruding from neural branches where neurons talk to each other.

If the network gets too excited, the nob dials down to “quiet” transmission signals before the brain over-activates to a state of chaos—seeing things that aren’t there, such as in schizophrenia. But the dial also prevents neural networks from being too lackadaisical, as can happen in other neurological disorders including dementia.

“When neurons combine, they actively seek out a critical regime,” explained Hegen. Somehow groups of interlinked neurons achieve a state of activity right at the border of chaos and quiescence, ensuring they have an optimally high level of information storage and processing—without tipping over into an avalanche of activity and subsequent burnout.

Eyes Wide Shut

Understanding how the brain reaches criticality is enormous, not just for preserving the brain’s abilities with age and disease, but also for building better brain-mimicking machines. So far, the team said, work on criticality has been theoretical; we wanted to hunt down actual signals in the brain.

Hegen’s team took advantage of modern high-density electrodes, which can record from hundreds of neurons over a period of days. They set off with two questions: one, can the cortex—the outermost brain region involved in higher cognitive functions—maintain brain activity at a critical point? Two, is it because of individual neurons, which tend to constrain their own activity levels?

Here comes the fun part: rats with pirate-eye patches. Blocking incoming light signals in one eye causes massive reorganization of neural activity over time, and the team monitored these changes over the course of a week. First, in rats running around their cages with implanted electrodes, the team recorded their neural activity while the animals had both eyes open. Using a mathematical method to parse the data into “neuronal avalanches”—cascades of electrical spikes that remain relatively local in a network—the team found that the visual cortex undulated on the brink of criticality, regardless of daytime or night. Question one, solved.

The team next occluded a single eye in their rats. After a little more than a day, neurons that carry information from the pirate patch-eye went quiet. Yet by day five, the neurons rebounded back in activity to their “attractor” baseline—exactly what the team predicted.

But surprisingly, network criticality didn’t follow a similar timeline. Almost immediately after blocking the eye, scientists saw a massive shift in their network state away from criticality—that is, away from optimal computation.

“It seems that as soon as there’s a mismatch between what the animal expects and what it’s getting through that eye, the computational dynamic falls apart,” said Hengen.

In two days, however, the network inched back to a near criticality state, long before individual neurons recovered their activity levels. In other words, maximal computation in the brain isn’t because individual neuron components are also working at their maximum; rather, even with imperfect components, neural networks naturally converge towards criticality, or optimal solutions.

It’s an emergent property at its finest: the result of individual neural computation is more than its sum. “[It’s] what we [can] learn from lots of electrodes,” commented Dr. Erik Herzog, a neuroscientist at Washington University who was not involved in the study.

Bring It Down

Emergent phenomena, such as complex thought and consciousness, are often brushed to philosophical discussion—are our minds more than electrical firing? Is there some special, abstract property such as qualia that emerges from measurable, physical laws?

Rather than resorting to hand-waving theories, the team went the second route: they hunted down the biological bases of criticality. Using computational methods, they tried a handful of different models of the visual cortex, playing around with various parameters until they found a model that behaved the same way as their one-eyed rats.

We explored over 400 combinations of different parameters, the team said, and less than 0.5 percent of the models matched our observation. The successful models had one thing in common: they all pointed to inhibitory connections as the crux of achieving criticality.

In other words, optimal computation in the brain isn’t because of magic fairy dust; the architecture of inhibitory connections is a foundational root upon which mind-bending abstract physical principles, such as criticality, can grow and guide brain function.

That’s enormously good news for deep learning and other AI models. Most currently employ few inhibitory connections, and the study immediately points at a way to move towards criticality in artificial neural networks. Larger storage and better data transmission—who doesn’t want that? Going even further, to some, criticality may even present a way towards nailing down consciousness in our brains and potentially in machines, though the idea is controversial.

More immediately, the team believes that criticality can be used to examine neural networks in neurological disorders. Impaired self-regulation can result in Alzheimer’s, epilepsy, autism, and schizophrenia, said Hengen. Scientists have long known many of our most troubling brain disorders are because of network imbalances, but pinpointing a measurable, exact cause is difficult. Thanks to criticality, we may finally have a way to peek inside the hidden world of physical laws in our brains—and tune them towards health.

“It makes intuitive sense, that evolution selected for the bits and pieces that give rise to an optimal solution [in brain computation]. But time will tell. There’s a lot of work to be done,” said Hengen.

Image Credit: Wikimedia Commons