DeepMind AI One-Ups Mathematicians at a Calculation Crucial to Computing

Share

DeepMind has done it again.

After solving a fundamental challenge in biology—predicting protein structure—and untangling the mathematics of knot theory, it’s taken aim at a fundamental computing process embedded inside thousands of everyday applications. From parsing images to modeling weather or even probing the inner workings of artificial neural networks, the AI could theoretically speed up calculations across a range of fields, increasing efficiency while cutting energy use and costs.

But more impressive is how they did it. The record-breaking algorithm, dubbed AlphaTensor, is a spinoff of AlphaZero, which famously trounced human players in chess and Go.

“Algorithms have been used throughout the world’s civilizations to perform fundamental operations for thousands of years,” wrote co-authors Drs. Matej Balog and Alhussein Fawzi at DeepMind. “However, discovering algorithms is highly challenging.”

AlphaTensor blazes a trail to a new world where AI designs programs that outperform anything humans engineer, while simultaneously improving its own machine “brain.”

“This work pushes into uncharted territory by using AI for an optimization problem that people have worked on for decades…the solutions that it finds can be immediately developed to improve computational run times,” said Dr. Federico Levi, a senior editor at Nature, which published the study.

Enter the Matrix Multiplication

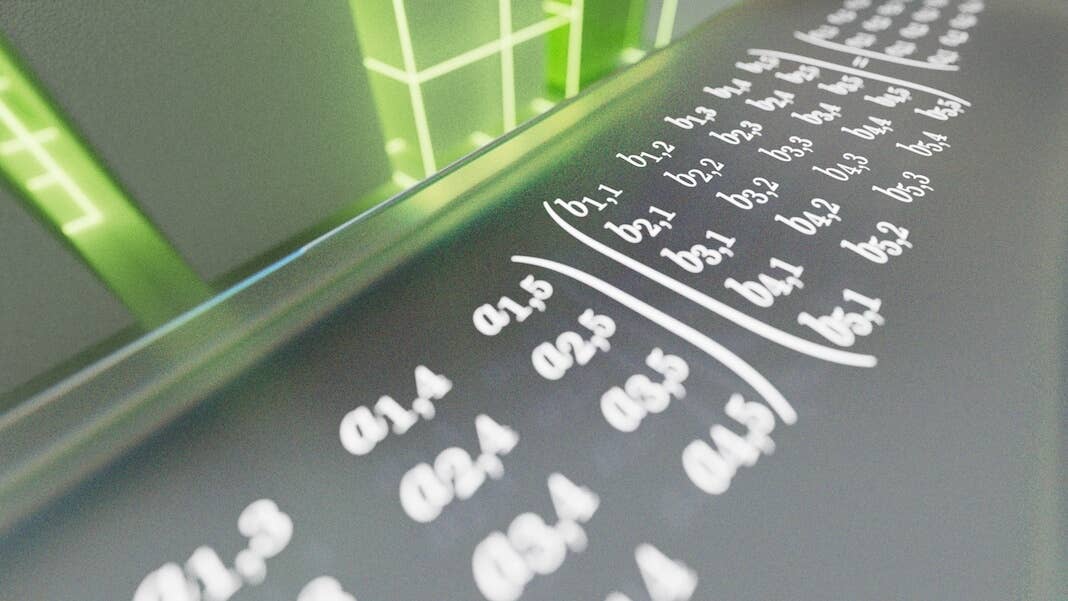

The problem AlphaTensor confronts is matrix multiplication. If you’re suddenly envisioning rows and columns of green numbers scrolling down your screen, you’re not alone. Roughly speaking, a matrix is kind of like that—a grid of numbers that digitally represents data of your choosing. It could be pixels in an image, the frequencies of a sound clip, or the look and actions of characters in video games.

Matrix multiplication takes two grids of numbers and multiplies one by the other. It’s a calculation often taught in high school but is also critical for computing systems. Here, rows of numbers in one matrix are multiplied with columns in another. The results generate an outcome—for example, a command to zoom in or tilt your view of a video game scene. Although these calculations operate under the hood, anyone using a phone or computer depends on their results every single day.

You can see how the problem can get extremely difficult, extremely fast. Multiplying large matrices is incredibly energy and time intensive. Each number pair has to be multiplied individually to construct a new matrix. As the matrices grow, the problem rapidly becomes untenable—even more so than predicting the best chess or Go moves. Some experts estimate there are more ways to solve matrix multiplication than the number of atoms in the universe.

Back in 1969, Volker Strassen, a German mathematician, showed there are ways to cut corners, slashing one round of two-by-two matrix multiplication from a total of eight to seven. It might not sound impressive, but Strassen’s method showed it’s possible to beat long-held standards of operations—that is, algorithms—for matrix multiplication. His approach, the Strassen algorithm, has reigned as the most efficient approach for over 50 years.

But what if there are even more efficient methods? “Nobody knows the best algorithm for solving it,” Dr. François Le Gall at Nagoya University in Japan, who was not involved in the work, told MIT Technology Review. “It’s one of the biggest open problems in computer science.”

AI Chasing Algorithms

If human intuition is faltering, why not tap into a mechanical mind?

In the new study, the DeepMind team turned matrix multiplication into a game. Similar to its predecessor AlphaZero, AlphaTensor uses deep reinforcement learning, a machine learning method inspired by the way biological brains learn. Here, an AI agent (often an artificial neural network) interacts with its environment to solve a multistep problem. If it succeeds, it earns a “reward”—that is, the AI’s network parameters are updated so it’s more likely to succeed again in the future.

It’s like learning to flip a pancake. Lots will initially fall on the floor, but eventually your neural networks will learn the arm and hand movements for a perfect flip.

The training ground for AlphaTensor is a sort of 3D board game. It’s essentially a one-player puzzle roughly similar to Sudoku. The AI must multiply grids of numbers in the fewest steps possible, while choosing from a myriad of allowable moves—over a trillion of them.

These allowable moves were meticulously designed into AlphaTensor. At a press briefing, co-author Dr. Hussain Fawzi explained: “Formulating the space of algorithmic discovery is very intricate…even harder is, how can we navigate in this space.”

In other words, when faced with a mind-boggling array of options, how can we narrow them down to improve our chances of finding the needle in the haystack? And how can we best strategize to get to the needle without digging through the entire haystack?

One trick the team incorporated into AlphaTensor is a method called tree search. Rather than, metaphorically speaking, randomly digging through the haystack, here the AI probes “roads” that could lead to a better outcome. The intermediate learnings then help the AI plan its next move to boost the chances for success. The team also showed the algorithm samples of successful games, like teaching a child the opening moves of chess. Finally, once the AI discovered valuable moves, the team allowed it to reorder those operations for more tailored learning in search of a better result.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

Breaking New Ground

AlphaTensor played well. In a series of tests, the team challenged the AI to find the most effective solutions for matrices up to five-by-five—that is, with five numbers each in a row or column.

The algorithm rapidly rediscovered Strassen’s original hack, but then surpassed all solutions previously devised by the human mind. Testing the AI with different sizes of matrices, AlphaTensor found more efficient solutions for over 70. “In fact, AlphaTensor typically discovers thousands of algorithms for each size of matrix,” the team said. “It’s mind boggling.”

In one case, multiplying a five-by-five matrix with a four-by-five one, the AI slashed the previous record of 80 individual multiplications to only 76. It also shined on larger matrices, reducing the number of computations needed for two eleven-by-eleven matrices from 919 to 896.

Proof-of-concept in hand, the team turned to practical use. Computer chips are often designed to optimize different computations—GPUs for graphics, for example, or AI chips for machine learning—and matching an algorithm with the best-suited hardware increases efficiency.

Here, the team used AlphaTensor to find algorithms for two popular chips in machine learning: the NVIDIA V100 GPU and Google TPU. Altogether, the AI-developed algorithms boosted computational speed by up to 20 percent.

It’s hard to say whether the AI can also speed up smartphones, laptops, or other everyday devices. However, “this development would be very exciting if it can be used in practice,” said MIT’s Dr. Virginia Williams. “A boost in performance would improve a lot of applications.”

The Mind of an AI

Despite AlphaTensor trouncing the latest human record for matrix multiplication, the DeepMind team can’t yet explain why.

“It has got this amazing intuition by playing these games,” said DeepMind scientist and co-author Dr. Pushmeet Kohli at a press briefing.

Evolving algorithms also doesn’t have to be man versus machines.

While AlphaTensor is a stepping stone towards faster algorithms, even faster ones could exist. “Because it needs to restrict its search to algorithms of a specific form, it could miss other types of algorithms that might be more efficient,” Balog and Fawzi wrote.

Perhaps an even more intriguing path would combine human and machine intuition. “It would be nice to figure out whether this new method actually subsumes all the previous ones, or whether you can combine them and get something even better,” said Williams. Other experts agree. With a wealth of algorithms at their disposal, scientists can begin dissecting them for clues to what made AlphaTensor’s solutions tick, paving the way for the next breakthrough.

Image Credit: DeepMind

Dr. Shelly Xuelai Fan is a neuroscientist-turned-science-writer. She's fascinated with research about the brain, AI, longevity, biotech, and especially their intersection. As a digital nomad, she enjoys exploring new cultures, local foods, and the great outdoors.

Related Articles

Sparks of Genius to Flashes of Idiocy: How to Solve AI’s ‘Jagged Intelligence’ Problem

Researchers Break Open AI’s Black Box—and Use What They Find Inside to Control It

What the Rise of AI Scientists May Mean for Human Research

What we’re reading