Self-driving cars are taking longer to arrive on our roads than we thought they would. Auto industry experts and tech companies predicted they’d be here by 2020 and go mainstream by 2021. But it turns out that putting cars on the road without drivers is a far more complicated endeavor than initially envisioned, and we’re still inching very slowly towards a vision of autonomous individual transport.

But the extended timeline hasn’t discouraged researchers and engineers, who are hard at work figuring out how to make self-driving cars efficient, affordable, and most importantly, safe. To that end, a research team from the University of Michigan recently had a novel idea: expose driverless cars to terrible drivers. They described their approach in a paper published last week in Nature.

It may not be too hard for self-driving algorithms to get down the basics of operating a vehicle, but what throws them (and humans) is egregious road behavior from other drivers, and random hazardous scenarios (a cyclist suddenly veers into the middle of the road; a child runs in front of a car to retrieve a toy; an animal trots right into your headlights out of nowhere).

Luckily these aren’t too common, which is why they’re considered edge cases—rare occurrences that pop up when you’re not expecting them. Edge cases account for a lot of the risk on the road, but they’re hard to categorize or plan for since they’re not highly likely for drivers to encounter. Human drivers are often able to react to these scenarios in time to avoid fatalities, but teaching algorithms to do the same is a bit of a tall order.

As Henry Liu, the paper’s lead author, put it, “For human drivers, we might have…one fatality per 100 million miles. So if you want to validate an autonomous vehicle to safety performances better than human drivers, then statistically you really need billions of miles.”

Rather than driving billions of miles to build up an adequate sample of edge cases, why not cut straight to the chase and build a virtual environment that’s full of them?

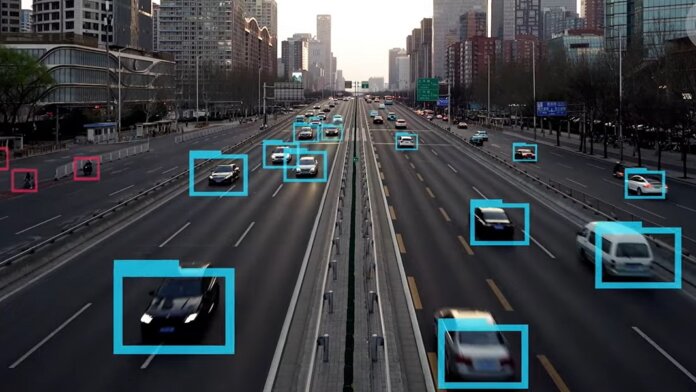

That’s exactly what Liu’s team did. They built a virtual environment filled with cars, trucks, deer, cyclists, and pedestrians. Their test tracks—both highway and urban—used augmented reality to combine simulated background vehicles with physical road infrastructure and a real autonomous test car, with the augmented reality obstacles being fed into the car’s sensors so the car would react as if they were real.

The team skewed the training data to focus on dangerous driving, calling the approach “dense deep-reinforcement-learning.” The situations the car encountered weren’t pre-programmed, but were generated by the AI, so as it goes along the AI learns how to better test the vehicle.

The system learned to identify hazards (and filter out non-hazards) far faster than conventionally-trained self-driving algorithms. The team wrote that their AI agents were able to “accelerate the evaluation process by multiple orders of magnitude, 10^3 to 10^5 times faster.”

Training self-driving algorithms in a virtual environment isn’t a new concept, but the Michigan team’s focus on complex scenarios provides a safe way to expose autonomous cars to dangerous situations. The team also built up a training data set of edge cases for other “safety-critical autonomous systems” to use.

With a few more tools like this, perhaps self-driving cars will be here sooner than we’re now predicting.

Image Credit: Nature/Henry Liu et. al.