Giving AI a Sense of Empathy Could Protect Us From Its Worst Impulses

Share

In the movie M3GAN, a toy developer gives her recently orphaned niece, Cady, a child-sized AI-powered robot with one goal: to protect Cady. The robot M3GAN sympathizes with Cady’s trauma. But things soon go south, with the pint-sized robot attacking anything and anyone who it perceives to be a threat to Cady.

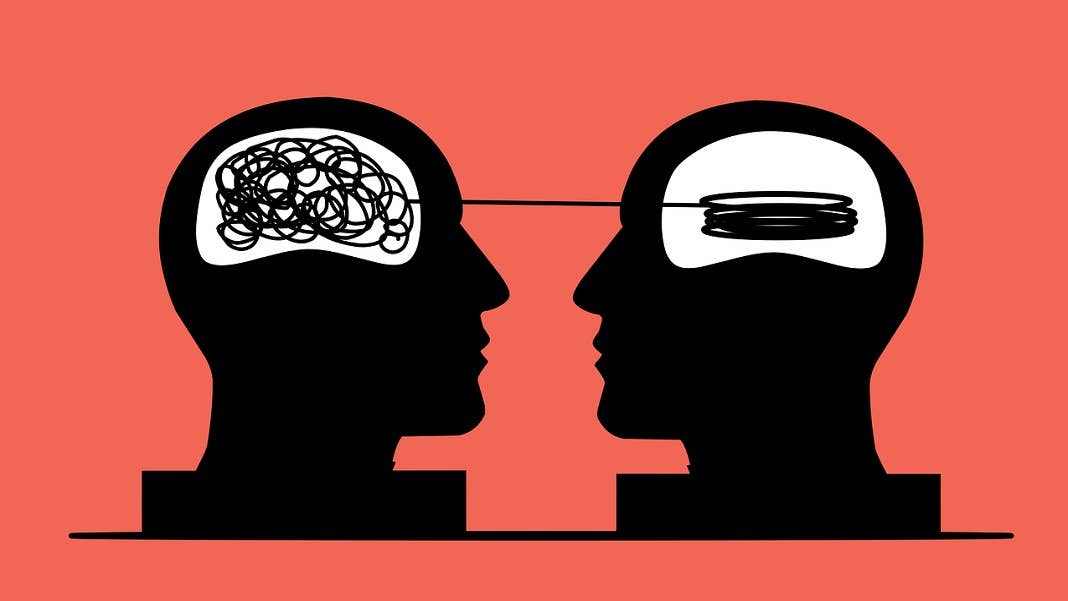

M3GAN wasn’t malicious. It followed its programming, but without any care or respect for other beings—ultimately including Cady. In a sense, as it engaged with the physical world, M3GAN became an AI sociopath.

Sociopathic AI isn’t just a topic explored in Hollywood. To Dr. Leonardo Christov-Moore at the University of Southern California and colleagues, it’s high time we build artificial empathy into AI—and nip any antisocial behaviors in the bud.

In an essay published last week in Science Robotics, the team argued for a neuroscience perspective to embed empathy into lines of code. The key is to add “gut instincts” for survival—for example, the need to avoid physical pain. With a sense of how it may be “hurt,” an AI agent could then map that knowledge onto others. It’s similar to the way humans gauge each others’ feelings: I understand and feel your pain because I’ve been there before.

AI agents based on empathy add an additional layer of guardrails that “prevents irreversible grave harm,” said Christov-Moore. It’s very difficult to do harm to others if you’re digitally mimicking—and thus “experiencing”—the consequences.

Digital da Vinci

The rapid rise of ChatGPT and other large generative models took everyone by surprise, immediately raising questions about how they can integrate into our world. Some countries are already banning the technology due to cybersecurity risks and privacy protections. AI experts also raised alarm bells in an open letter earlier this year that warned of the technology’s “profound risks to society.”

We are still adapting to an AI-powered world. But as these algorithms increasingly weave their way into the fabric of society, it’s high time to look ahead to their potential consequences. How do we guide AI agents to do no harm, but instead work with humanity and help society?

It’s a tough problem. Most AI algorithms remain a black box. We don’t know how or why many algorithms generate decisions.

Yet the agents have an uncanny ability to come up with “amazing and also mysterious” solutions that are counter-intuitive to humans, said Christov-Moore. Give them a challenge—say, finding ways to build as many therapeutic proteins as possible—and they’ll often imagine solutions that humans haven’t even considered.

Untethered creativity comes at a cost. “The problem is it’s possible they could pick a solution that might result in catastrophic irreversible harm to living beings, and humans in particular,” said Christov-Moore.

Adding a dose of artificial empathy to AI may be the strongest guardrail we have at this point.

Let’s Talk Feelings

Empathy isn’t sympathy.

As an example: I recently poured hydrogen peroxide onto a fresh three-inch-wide wound. Sympathy is when you understand it was painful and show care and compassion. Empathy is when you vividly imagine how the pain would feel on you (and cringe).

Previous research in neuroscience shows that empathy can be roughly broken down into two main components. One is purely logical: you observe someone’s behavior, decode their experience, and infer what’s happening to them.

Most existing methods for artificial empathy take this route, but it’s a fast-track to sociopathic AI. Similar to notorious human counterparts, these agents may mimic feelings but not experience them, so they can predict and manipulate those feelings in others without any moral reason to avoid harm or suffering.

The second component completes the picture. Here, the AI is given a sense of vulnerability shared across humans and other systems.

“If I just know what state you’re in, but I’m not sharing it at all, then there’s no reason why it would move me unless I had some sort of very strong moral code I had developed,” said Christov-Moore.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

A Vulnerable AI

One way to code vulnerability is to imbue the AI with a sense of staying alive.

Humans get hungry. Overheated. Frostbitten. Elated. Depressed. Thanks to evolution, we have a narrow but flexible window for each biological measurement that helps maintain overall physical and mental health, called homeostasis. Knowing the capabilities of our bodies and minds makes it possible to seek out whatever solutions are possible when we’re plopped into unexpected dynamic environments.

These biological constraints aren’t a bug but rather a feature for generating empathy in AI, said the authors.

One previous idea for programming artificial empathy into AI is to write explicit rules for right versus wrong. It comes with obvious problems. Rule-based systems are rigid and difficult to navigate around morally gray areas. They’re also hard to establish, with different cultures having vastly diverse frameworks of what’s acceptable.

In contrast, the drive for survival is universal, and a starting point for building vulnerable AI.

“At the end of the day, the main thing is your brain…has to be dealing with how to maintain a vulnerable body in the world, and your assessment of how well you’re doing at that,” said Christov-Moore.

These data manifest into consciousness as feelings that influence our choices: comfortable, uncomfortable, go here, eat there. These drives are “the underlying score to the movie of our lives, and give us a sense of [if things] are going well or they aren’t,” said Christov-Moore. Without a vulnerable body that needs to be maintained—either digitally or physically as robots—an AI agent can’t have skin in the game for collaborative life that drives it towards or away from certain behaviors.

So how to build a vulnerable AI?

“You need to experience something like suffering,” said Christov-Moore.

The team laid out a practical blueprint. The main goal is to maintain homeostasis. In the first step, the AI “kid” roams around an environment filled with obstacles while searching for beneficial rewards and keeping itself alive. Next, it starts to develop an idea of what others are thinking by watching them. It’s like a first date: the AI kid tries to imagine what another AI is “thinking” (how about some fresh flowers?), and when it’s wrong (the other AI hates flowers), suffers a sort of sadness and adjusts its expectations. With multiple tries, the AI eventually learns and adapts to the other’s preferences.

Finally, the AI maps the other’s internal models onto itself while maintaining its own integrity. When making a decision, it can then simultaneously consider multiple viewpoints by weighing each input for a single decision—in turn making it smarter and more cooperative.

For now, these are only theoretic scenarios. Similar to humans, these AI agents aren’t perfect. They make bad decisions when stressed on time and ignore long-term consequences.

That said, the AI “creates a deterrent baked into its very intelligence…that deters it from decisions that might cause something like harm to other living agents as well as itself,” said Christov-Moore. “By balancing harm, well-being, and flourishing in multiple conflicting scenarios in this world, the AI may arrive at counter-intuitive solutions to pressing civilization-level problems that we have never even thought of. If we can clear this next hurdle…AI may go from being a civilization-level risk to the greatest ally we’ve ever had.”

Image Credit: Mohamed Hassan from Pixabay

Dr. Shelly Xuelai Fan is a neuroscientist-turned-science-writer. She's fascinated with research about the brain, AI, longevity, biotech, and especially their intersection. As a digital nomad, she enjoys exploring new cultures, local foods, and the great outdoors.

Related Articles

What the Rise of AI Scientists May Mean for Human Research

Scientists Want to Give ChatGPT an Inner Monologue to Improve Its ‘Thinking’

Humanity’s Last Exam Stumps Top AI Models—and That’s a Good Thing

What we’re reading