This Enormous Computer Chip Beat the World’s Top Supercomputer at Molecular Modeling

Share

Computer chips are a hot commodity. Nvidia is now one of the most valuable companies in the world, and the Taiwanese manufacturer of Nvidia's chips, TSMC, has been called a geopolitical force. It should come as no surprise, then, that a growing number of hardware startups and established companies are looking to take a jewel or two from the crown.

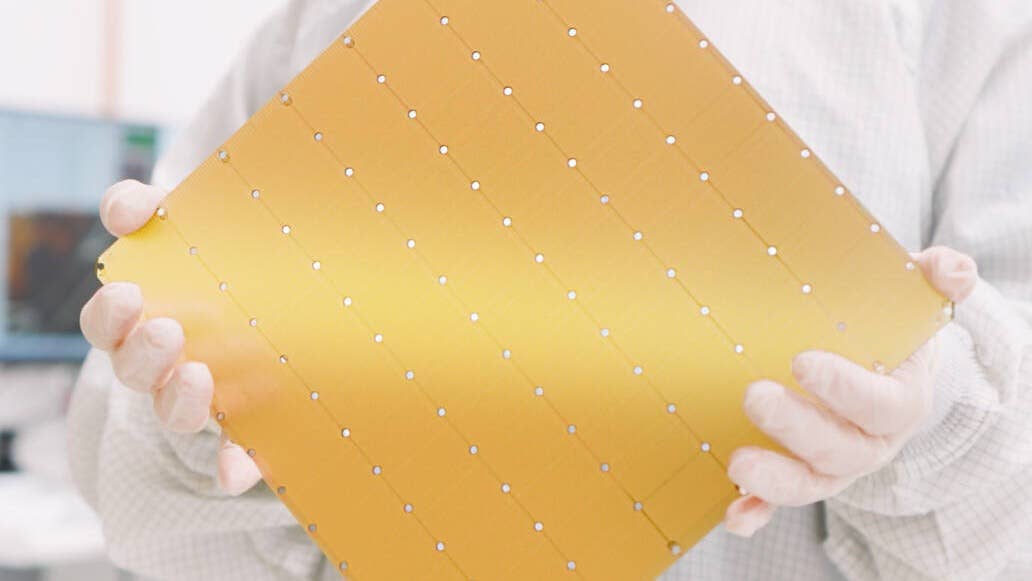

Of these, Cerebras is one of the weirdest. The company makes computer chips the size of tortillas bristling with just under a million processors, each linked to its own local memory. The processors are small but lightning quick as they don't shuttle information to and from shared memory located far away. And the connections between processors—which in most supercomputers require linking separate chips across room-sized machines—are quick too.

This means the chips are stellar for specific tasks. Recent preprint studies in two of these—one simulating molecules and the other training and running large language models—show the wafer-scale advantage can be formidable. The chips outperformed Frontier, the world's top supercomputer, in the former. They also showed a stripped down AI model could use a third of the usual energy without sacrificing performance.

Molecular Matrix

The materials we make things with are crucial drivers of technology. They usher in new possibilities by breaking old limits in strength or heat resistance. Take fusion power. If researchers can make it work, the technology promises to be a new, clean source of energy. But liberating that energy requires materials to withstand extreme conditions.

Scientists use supercomputers to model how the metals lining fusion reactors might deal with the heat. These simulations zoom in on individual atoms and use the laws of physics to guide their motions and interactions at grand scales. Today’s supercomputers can model materials containing billions or even trillions of atoms with high precision.

But while the scale and quality of these simulations has progressed a lot over the years, their speed has stalled. Due to the way supercomputers are designed, they can only model so many interactions per second, and making the machines bigger only compounds the problem. This means the total length of molecular simulations has a hard practical limit.

Cerebras partnered with Sandia, Lawrence Livermore, and Los Alamos National Laboratories to see if a wafer-scale chip could speed things up.

The team assigned a single simulated atom to each processor. So they could quickly exchange information about their position, motion, and energy, the processors modeling atoms that would be physically close in the real world were neighbors on the chip too. Depending on their properties at any given time, atoms could hop between processors as they moved about.

The team modeled 800,000 atoms in three materials—copper, tungsten, and tantalum—that might be useful in fusion reactors. The results were pretty stunning, with simulations of tantalum yielding a 179-fold speedup over the Frontier supercomputer. That means the chip could crunch a year's worth of work on a supercomputer into a few days and significantly extend the length of simulation from microseconds to milliseconds. It was also vastly more efficient at the task.

"I have been working in atomistic simulation of materials for more than 20 years. During that time, I have participated in massive improvements in both the size and accuracy of the simulations. However, despite all this, we have been unable to increase the actual simulation rate. The wall-clock time required to run simulations has barely budged in the last 15 years," Aidan Thompson of Sandia National Laboratories said in a statement. “With the Cerebras Wafer-Scale Engine, we can all of a sudden drive at hypersonic speeds."

Although the chip increases modeling speed, it can't compete on scale. The number of simulated atoms is limited to the number of processors on the chip. Next steps include assigning multiple atoms to each processor and using new wafer-scale supercomputers that link 64 Cerebras systems together. The team estimates these machines could model as many as 40 million tantalum atoms at speeds similar to those in the study.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

AI Light

While simulating the physical world could be a core competency for wafer-scale chips, they've always been focused on artificial intelligence. The latest AI models have grown exponentially, meaning the energy and cost of training and running them has exploded. Wafer-scale chips may be able to make AI more efficient.

In a separate study, researchers from Neural Magic and Cerebras worked to shrink the size of Meta's 7-billion-parameter Llama language model. To do this, they made what's called a "sparse" AI model where many of the algorithm's parameters are set to zero. In theory, this means they can be skipped, making the algorithm smaller, faster, and more efficient. But today's leading AI chips—called graphics processing units (or GPUs)—read algorithms in chunks, meaning they can't skip every zeroed out parameter.

Because memory is distributed across a wafer-scale chip, it can read every parameter and skip zeroes wherever they occur. Even so, extremely sparse models don't usually perform as well as dense models. But here, the team found a way to recover lost performance with a little extra training. Their model maintained performance—even with 70 percent of the parameters zeroed out. Running on a Cerebras chip, it sipped a meager 30 percent of the energy and ran in a third of the time of the full-sized model.

Wafer-Scale Wins?

While all this is impressive, Cerebras is still niche. Nvidia's more conventional chips remain firmly in control of the market. At least for now, that appears unlikely to change. Companies have invested heavily in expertise and infrastructure built around Nvidia.

But wafer-scale may continue to prove itself in niche, but still crucial, applications in research. And it may be the approach becomes more common overall. The ability to make wafer-scale chips is only now being perfected. In a hint at what's to come for the field as a whole, the biggest chipmaker in the world, TSMC, recently said it's building out its wafer-scale capabilities. This could make the chips more common and capable.

For their part, the team behind the molecular modeling work say wafer-scale's influence could be more dramatic. Like GPUs before them, adding wafer-scale chips to the supercomputing mix could yield some formidable machines in the future.

“Future work will focus on extending the strong-scaling efficiency demonstrated here to facility-level deployments, potentially leading to an even greater paradigm shift in the Top500 supercomputer list than that introduced by the GPU revolution,” the team wrote in their paper.

Image Credit: Cerebras

Jason is editorial director at SingularityHub. He researched and wrote about finance and economics before moving on to science and technology. He's curious about pretty much everything, but especially loves learning about and sharing big ideas and advances in artificial intelligence, computing, robotics, biotech, neuroscience, and space.

Related Articles

Scientists Send Secure Quantum Keys Over 62 Miles of Fiber—Without Trusted Devices

This Light-Powered AI Chip Is 100x Faster Than a Top Nvidia GPU

How Scientists Are Growing Computers From Human Brain Cells—and Why They Want to Keep Doing It

What we’re reading