Today, Oak Ridge National Laboratory’s Frontier supercomputer was crowned fastest on the planet in the semiannual Top500 list. Frontier more than doubled the speed of the last titleholder, Japan’s Fugaku supercomputer, and is the first to officially clock speeds over a quintillion calculations a second—a milestone computing has pursued for 14 years.

That’s a big number. So before we go on, it’s worth putting into more human terms.

Imagine giving all 7.9 billion people on the planet a pencil and a list of simple arithmetic or multiplication problems. Now, ask everyone to solve one problem per second for four and half years. By marshaling the math skills of the Earth’s population for a half-decade, you’ve now solved over a quintillion problems.

Frontier can do the same work in a second, and keep it up indefinitely. A thousand years’ worth of arithmetic by everyone on Earth would take Frontier just a little under four minutes.

This blistering performance kicks off a new era known as exascale computing.

The Age of Exascale

The number of floating-point operations, or simple mathematical problems, a computer solves per second is denoted FLOP/s or colloquially “flops.” Progress is tracked in multiples of a thousand: A thousand flops equals a kiloflop, a million flops equals a megaflop, and so on.

The ASCI Red supercomputer was the first to record speeds of a trillion flops, or a teraflop, in 1997. (Notably, an Xbox Series X game console now packs 12 teraflops.) Roadrunner first broke the petaflop barrier, a quadrillion flops, in 2008. Since then, the fastest computers have been measured in petaflops. Frontier is the first to officially notch speeds over an exaflop—1.102 exaflops, to be exact—or 1,000 times faster than Roadrunner.

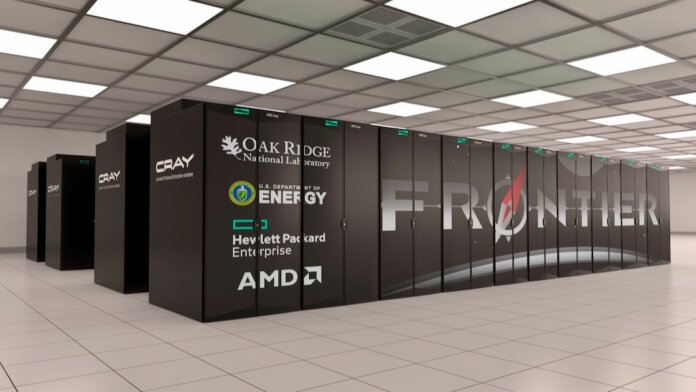

It’s true today’s supercomputers are far faster than older machines, but they still take up whole rooms, with rows of cabinets bristling with wires and chips. Frontier, in particular, is a liquid-cooled system by HPE Cray running 8.73 million AMD processing cores. In addition to being the fastest in the world, it’s also the second most efficient—outdone only by a test system made up of one of its cabinets—with a rating of 52.23 gigaflops/watt.

So, What’s the Big Deal?

Most supercomputers are funded, built, and operated by government agencies. They’re used by scientists to model physical systems, like the climate or structure of the universe, but also by the military for nuclear weapons research.

Supercomputers are now tailor-made to run the latest algorithms in artificial intelligence too. Indeed, a few years ago, Top500 added a new lower precision benchmark to measure supercomputing speed on AI applications. By that mark, Fugaku eclipsed an exaflop way back in 2020. The Fugaku system set the most recent record for machine learning at 2 exaflops. Frontier smashed that record with AI speeds of 6.86 exaflops.

As very large machine learning algorithms have emerged in recent years, private companies have begun to build their own machines alongside governments. Microsoft and OpenAI made headlines in 2020 with a machine they claimed was fifth fastest in the world. In January, Meta said its upcoming RSC supercomputer would be fastest at AI in the world at 5 exaflops. (It appears they’ll now need a few more chips to match Frontier.)

Frontier and other private supercomputers will allow machine learning algorithms to further push the limits. Today’s most advanced algorithms boast hundreds of billions of parameters—or internal connections—but upcoming algorithms will likely grow into the trillions.

So, exascale supercomputers will allow researchers to advance technology and do new cutting-edge science that was once impractical on slower machines.

Is Frontier Really the First Exascale Machine?

When exactly supercomputing first broke the exaflop barrier partly depends on how you define it and what’s been measured.

Folding@Home, which is a distributed system made up of a motley crew of volunteer laptops, broke an exaflop at the beginning of the pandemic. But according to Top500 cofounder Jack Dongarra, Folding@Home is a specialized system that’s “embarrassingly parallel” and only works on problems with pieces that can be solved totally independently.

More relevantly, rumors were flying last year that China had as many as two exascale supercomputers operating in secret. Researchers published some details on the machines in papers late last year, but they have yet to be officially benchmarked by Top500. In an IEEE Spectrum interview last December, Dongarra speculated that if exascale machines exist in China, the government may be trying not to shine a spotlight on them to avoid stirring up geopolitical tensions that could drive the US to restrict key technology exports.

So, it’s possible China beat the US to the exascale punch, but going by the Top500, a benchmark the supercomputing field’s used to determine top dog since the early 1990s, Frontier still gets the official nod.

Next Up: Zettascale?

It took about 12 years to go from terascale to petascale and another 14 to reach exascale. The next big leap forward may well take as long or longer. The computing industry continues to make steady progress on chips, but the pace has slowed and each step has become more costly. Moore’s Law isn’t dead, but it’s not as steady as it used to be.

For supercomputers, the challenge goes beyond raw computing power. It might seem that you should be able to scale any system to hit whatever benchmark you like: Just make it bigger. But scale requires efficiency too, or energy requirements spiral out of control. It’s also harder to write software to solve problems in parallel across ever-bigger systems.

The next 1,000-fold leap, known as zettascale, will require innovations in chips, the systems connecting them into supercomputers, and the software running on them. A team of Chinese researchers predicted we’d hit zettascale computing in 2035. But of course, no one really knows for sure. Exascale, predicted to arrive by 2018 or 2020, made the scene a few years behind schedule.

What’s more certain is the hunger for greater computing power isn’t likely to dwindle. Consumer applications, like self-driving cars and mixed reality, and research applications, like modeling and artificial intelligence, will require faster, more efficient computers. If necessity is the mother of invention, you can expect ever-faster computers for a while yet.

Image Credit: Oak Ridge National Laboratory (ORNL)