Muscle Sensing Enhances Microsoft Surface (Video)

Share

How Love is Like A Computer #234: Reading someone's mind often means paying closer attention to their body.

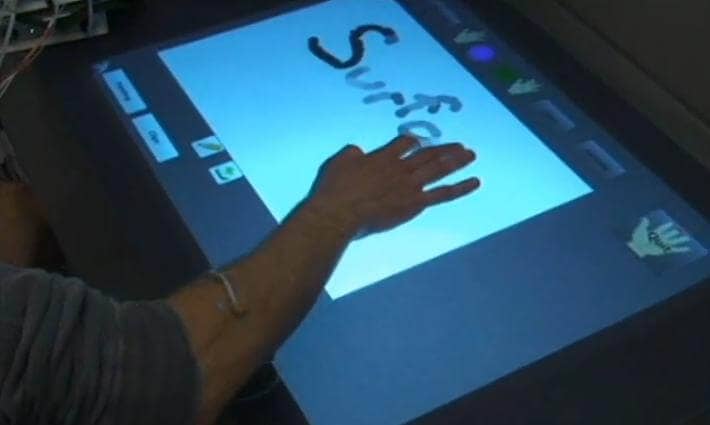

Forearm sensors translate muscle movements into control commands for Microsoft Surface.

Researchers at the University of Washington, University of Toronto, and Microsoft Research have developed a system to control a computer through a device that reads muscle movement. Using eight sensors attached to the surface of the forearm you can now communicate basic commands by moving your fingers and hand in stylized gestures. The team of developers has adapted the new system to work with Microsoft Surface, the advanced table sized touchscreen. We've got a great demonstration video of the muscle control hardware interacting with MS Surface after the break.

It seems like every few days, a tech company finds a new way for us to control computers. These next generation human-computer interfaces all seem to have one goal in common: increasing the physical intuitiveness of computer control. In some cases, the physicality is expressly required by the device. The HAL cyborg from Cyberdyne relies on electromyography (EMG), just like the new muscle sensing control technology. However, tactile interfaces are becoming more popular purely as replacements for keyboards and mice, especially in casual environments like the Hard Rock Cafe in Las Vegas. For those of us who have adapted well to typing and point and click commands, the new physical interfaces may seem imprecise. To some extent they still are. Yet when paired with improved algorithms for speech, gesture, and facial recognition the new line of human-computer interfaces is getting ready to connect us directly to our digital world. Keyboards and mice, like so many middle men in our evolving economy, are being cut for efficiency.

Watching the demonstration video for the Microsoft Surface muscle-enhanced controls, I am struck both by the ease of use, and the lack of necessity. Do we really need a way to let each finger represent a different color? Is it that important to have pressure sensitive finger painting? Of course not, but clearly these are just first steps. On a standard computer, you have two areas: a control space and a display space. In touchscreen and other new mediums, the two areas have merged. Muscle controls are just one way in which we may be able to take advantage of that new overlap. Building on intuition, the simple pinches to pick up and flicks to undo could evolve into a gesture system which maximizes control of the device while being easy to use. It will be interesting to see how these gestures differ across the globe. Will everyone find flicking a common sense way to erase their actions?

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

Clearly the EMG controls have applications far outside of the Microsoft Surface platform. The research team presented some of those applications at the ACM Symposium on User Interface Software and Technology (ACMUIST). Their presentation claims that the technology's recognition of gestures is fairly accurate in all sorts of conditions: 79% while making pinching motions, 85% while holding a mug, and 88% while carrying a weighted bag. Right now, the system requires eight sensors to be attached to skin of the forearm and hardwired to the computer. Future designs may require fewer sensors and take advantage of wireless signaling. We've got the ACMUIST presentation below.

I consider the EMG sensors, Microsoft Surface, and many other "next-generation" input devices to still be in their infancy. They're expensive, not widely available, and in need of many iterations of refinement before they'd be competitive with current technology. Of course, they are also amazingly cool. Which is probably why some, maybe even most, of them will continue to be adapted and evolved in the upcoming years. I can't wait to see what they grow into.

Screen capture and video credits: Microsoft, University of Washington, University of Toronto, ACMUIST

Related Articles

Scientists Send Secure Quantum Keys Over 62 Miles of Fiber—Without Trusted Devices

This Light-Powered AI Chip Is 100x Faster Than a Top Nvidia GPU

How Scientists Are Growing Computers From Human Brain Cells—and Why They Want to Keep Doing It

What we’re reading