Monkeys Control Coordinated Arms Using Brain-Machine Interface

Duke University researchers Miguel Nicolelis and Peter Ifft managed to create a two-handed brain-machine interface using monkeys in a study recently published in Science Translational Medicine.

Share

The term “brain-machine interface” seems to invoke fear and discomfort in the minds of many, and gleeful enthusiasm in the minds of technophiles. Both of these reactions often overlook one of the major reasons for developing such interfaces: to enable amputees and those who suffer from paralysis to regain self-sufficiency by mentally controlling a mobile robot or robotic getup.

Prosthetic limbs controlled by a wearers’ thoughts are already available, though not commercially. They’re great for people who lack use of a single limb, usually just the part below the knee or elbow. But what about quadriplegics, for example?

Duke University researchers Miguel Nicolelis and Peter Ifft managed to create a two-handed brain-machine interface using monkeys in a study [pdf] recently published in Science Translational Medicine. Nicolelis has previously done similar work with a single arm. Attempts to empower two robotic limbs using a brain-machine interface have met with very limited success.

“Bimanual movements in our daily activities — from typing on a keyboard to opening a can — are critically important. Future brain-machine interfaces aimed at restoring mobility in humans will have to incorporate multiple limbs to greatly benefit severely paralyzed patients,” Nicolelis said in a news release.

To observe how the brain produces coordinated movement, Nicolelis and Ifft trained monkeys to use the hands of a realistic on-screen avatar, first with a joystick and, eventually, with just their minds. (An algorithm cleaned up the signals, looking for just the movement commands.)

Using surgically implanted arrays, the researchers achieved a two-handed BMI by recording the activity of a wider selection neurons than had been used in previous efforts — nearly 500 from multiple areas in both sides of the animals’ brains. They then sent the movement impulses on to power the avatar arms and captured them for subsequent analysis.

The mental activity involved with coordinating limbs did not look like the sum of that involved with moving each limb singly, they found.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

“When we looked at the properties of individual neurons, or of whole populations of cortical cells, we noticed that simply summing up the neuronal activity correlated to movements of the right and left arms did not allow us to predict what the same individual neurons or neuronal populations would do when both arms were engaged together in a bimanual task,” Nicolelis said.

The monkeys' brains also exhibited a great deal of adaptability as they learned to manipulate virtual arms, an aspect of the research that suggests that paralyzed people could learn to control robotic limbs. But while Nicolelis is the dominant mind in this sort of technology, his advances haven't yet surmounted the need for electrodes in the brains of his subjects.

The findings will support the Walk Again Project, a Duke-led international consortium of BMI researchers that to unveil prototype robotic exoskeleton that lets quadriplegics walk at the opening ceremony of the 2014 World Cup 2014 in Nicolelis's native Brazil.

Nicolelis reportedly wants to highlight his work on the World Cup stage by having young quadriplegics kick a soccer ball with the help of a robotic exoskeleton.

Photos and video courtesy Duke University

Cameron received degrees in Comparative Literature from Princeton and Cornell universities. He has worked at Mother Jones, SFGate and IDG News Service and been published in California Lawyer and SF Weekly. He lives, predictably, in SF.

Related Articles

How Scientists Are Growing Computers From Human Brain Cells—and Why They Want to Keep Doing It

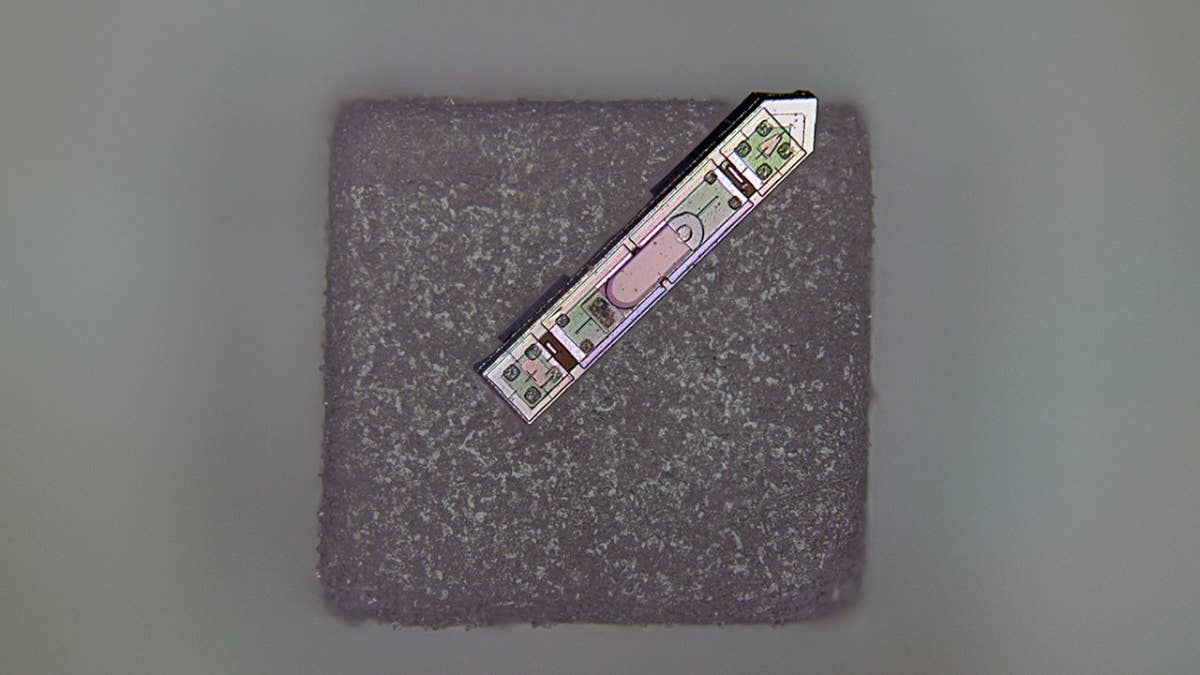

These Brain Implants Are Smaller Than Cells and Can Be Injected Into Veins

This Wireless Brain Implant Is Smaller Than a Grain of Salt

What we’re reading