Two 8-foot robots recently began directing traffic in the capital city of the Democratic Republic of Congo, Kinshasa. The automatons are little more than traffic lights dressed up as campy 1960s robots—and yet, drivers obey them more readily than the humans previously directing traffic there.

Maybe it’s because the robots are bigger than the average traffic cop. Maybe it’s their fearsome metallic glint. Or maybe it’s because, in addition to their LED signals and stilted hand waving, they have multiple cameras recording ne’er-do-wells.

“If a driver says that it is not going to respect the robot because it’s just a machine the robot is going to take that, and there will be a ticket for him,” Isaie Therese, the engineer behind the bots, told CCTV Africa.

The Congolese bots provide a fascinating glimpse into human-robot interaction. It’s a rather surprising observation that humans so readily obey robots, even very simple ones, in certain situations. But the observation isn’t merely anecdotal—there’s research on the subject. (Hat tip to Motherboard for pointing out a fascinating study for us robot geeks.)

Last year, scientists at the University of Manitoba observed a group of 27 volunteers pressured to work on a docket of mundane tasks by either a 27-year-old human actor in a lab coat or an Aldeberan Nao robot—both called “Jim.”

Ever since the controversial 1971 Stanford prison experiment—wherein participants assigned roles of guards and prisoners demonstrated just how situational human morality can be—similar behavioral work has been rare and fraught.

Even so, if carefully conducted with the participants’ well-being in mind, such studies can provide valuable behavioral insights. The results of the Stanford study are still taught over 40 years later.

In this case, the researchers gave participants a moderately uncomfortable situation, told them they were free to quit at any time, and briefed them immediately following the test.

Each participant was paid C$10 to change file extensions from .jpg to .png as part of a “machine learning” experiment. To heighten their discomfort and the sense the task was endless, the workload began with a small batch of 10 files but grew each time the participant completed the assigned files (ultimately reaching a batch of 5,000).

Each time a participant protested, he was urged on by either the human or robot. The human proved the more convincing authority figure, but the robot was far from feckless.

10 of 13 participants said they viewed the robot as a legitimate authority, though they couldn’t explain why. Several people tried to strike up a conversation, and one showed remorse when the robot said it was ending the experiment and notifying the lead researcher, exclaiming, “No! Don’t tell him that! Jim, I didn’t mean that….I’m sorry.”

The researchers write that the novelty of the robot’s design may have detracted from its perceived authority. And involving humans in the robot part of the experiment may have led participants to defer their feeling of responsibility from robot to human.

None of the participants, for example, listed pressure from the robot as a reason for their obedience. Instead, they cited factors like interest in future tasks, trusting the robot had been programmed by a qualified human researcher, and a feeling of obligation to the lead human scientist.

Despite these caveats, the researchers write, the fact remains that, “A small, child-like humanoid robot had enough authority to pressure 46% of participants to rename files for 80 minutes, even after indicating they wanted to quit.”

And in what may be the most disturbing result, a number of the participants expressed concern the robot might be broken or malfunctioning—yet they didn’t stop working. “They followed a possibly “broken” robot to do something they would rather not do.”

Few studies exist outside the University of Manitoba paper, however, the scientists do note there is past research that appears to corroborate their findings.

“In the single previous case that uses a deterrent (embarrassment), the results are striking: a robot can push people to do embarrassing acts such as removing their clothing or putting a thermometer in their rectum.”

Of course, two studies, one with just 27 people, and an anecdotal example (the Congolese bots) don’t prove humans will dutifully yield the planet when the robots revolt.

How much of the behavior is due to fear or respect of the humans behind the scenes? If the Congelese bots were simply traffic lights and cameras, would folks still readily obey them? Maybe the drivers know human cops can be argued with, ignored, or corrupted, but a machine (humanoid or not) won’t be similarly manipulated.

More study would be worthwhile, the University of Manitoba researchers argue. Human-robot interaction will grow in coming years, particularly in healthcare and the military. A greater body of behavioral research can inform future designs and potentially prevent harmful obedience. (Or, we might add, promote healthy disobedience.)

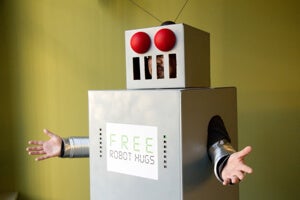

Image Credit: Mike Kabamba/YouTube; University of Manitoba; Ben Husmann/Flickr