Like many other eager early adopters, my first encounter with virtual reality headsets began with heart-pounding excitement, and ended in nightmarish nausea.

To an unfortunate subpopulation of people, virtual reality’s side effects are no joke. The eyestrain and stomach upset can be so severe that Gabe Newell, the president and co-founder of gaming heavyweight Valve, dubbed current headsets as the “world’s best motion sickness inducers.”

And it’s certainly not a fringe problem.

Based on past US Navy studies using VR-like flight simulators, the fraction of affected individuals can be as high as 40% depending on the nature of the task. Even worse, the more active the user is and the longer the user stays in the virtual world, the more likely they will become very, very sick.

With Google Cardboard and Samsung Gear VR already in limited release, and the highly anticipated Oculus Rift poised to hit the market early next year, companies are furiously working to solve VR’s simulator sickness.

Valve, for example, presented a motion-tracking system at this year’s Gaming Developers Conference that orients users by reproducing their real-world motions using spinning laser beams. In conjunction with the HTC Vive headset, the tech—called Lighthouse—is purported to obliterate the dreaded puke problem.

Meanwhile, others, like Fove and Cloudhead Games, are working the problem from different angles—the former with advanced eye and head tracking and the latter with in-game movements more tailored to VR.

Then there’s Magic Leap, the secretive augmented reality (AR) company that shot to fame after receiving $542 million from a team of investors led by Google. This March in an interview with Wired, Magic Leap’s CEO Rony Abovitz tantalizingly hinted that their technology will use “dynamic digital light field signal” to produce “the closest replication to what the real world is doing as it interacts with your eye-brain system.”

Magic Leap may be in stealth mode, but others—who also believe that light field technology is the solution—certainly aren’t.

At SIGGRAPH 2015, an annual conference in Los Angeles last week that promotes the latest in computer graphics and interactive techniques, a team from the Stanford Computational Imaging Group presented a next-gen VR headset prototype that also relied on light field signals to generate virtual objects that mimic how our eyes interact with the real 3D world.

Led by assistant professor Gordon Wetzstein and in collaboration with NVIDIA, the team set out to solve a well-known vision issue in VR called the vergence-accommodation conflict. In a nutshell, this quirky vision problem occurs when the VR world is rendered in an unnatural “flat” representation that dramatically differs from how our visual system usually pieces together 3D information.

When we look at objects in the real world, say bottles of neatly lined up Bushmills Irish Whiskey, two things happen. First, if the object is nearby, the two eyes rotate to converge on it; otherwise they diverge (hence the name “vergence,” or depth cue). If vergence is off, you end up seeing double. Most current-gen VR headsets present slightly offset images to the left and right eye to generate this binocular disparity. Many headsets also support other depths cues, such as motion parallax or binocular occlusions.

The problem comes at the next step.

The problem comes at the next step.

To make that single image sharp and clear, the lens in each eye adjusts individually to bring it into focus (“accommodation,” or a focus cue akin to a camera’s depth-of-field). Normally, in the real world, depth and focus cues are intricately linked, and our eyes don’t have to fight to complete the two tasks.

Strap on a VR headset, however, and the eyes are forced to look at the display screen (a single “depth”) while trying to vary their vergence. In other words, although the eyes can adjust to the depth cues, they aren’t simultaneously offered focus cues. This thorny conflict ends in eyestrain that often causes headaches and the dreaded VR sickness.

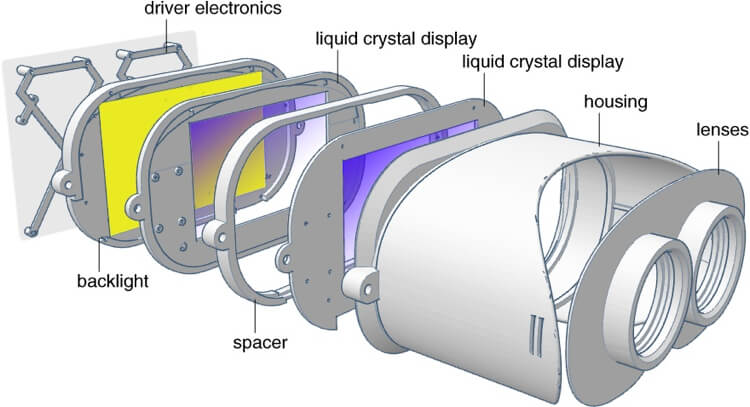

The Stanford team’s solution is to use two transparent LCD displays placed one in front of the other, with a spacer in between and a uniform light in the back.

To start off, the researchers used computer modeling to generate a light field for a virtual object or scene. Unlike a conventional 2D photograph that renders only one image per eye, this step yields 25 images that each show the virtual scene from a slightly different position over the size of a user’s pupil.

“This collection of images is the light field,” explained Wetzstein to Singularity Hub.

Next, a custom-developed algorithm splits the light field into two image layers, so that one represents a front view (one that’s closer to the viewer) and the other a rear view. Then—in real time—the algorithm displays each layer on one of the LCD screens. The two LCDs additively reconstruct the entire depth information of the virtual object.

The result approximates how light fields are generated in real life, in that our eyes are free to explore the object at different depths and focuses where they want to in the virtual space without strain.

Honestly, not a lot of people are working in this area, says Wetzstein. When asked whether he thinks they are directly competing with Magic Leap, Wetzstein shrugged.

“Magic Leap is speculated to address the vergence-accommodation conflict and they have used the term light field in public announcements, but … it’s difficult to predict what they are developing,” he said. “I would assume it’s something related to what we do.”

To be clear, the Stanford prototype does not claim to solve VR sickness once and for all. Other issues such as resolution, high dynamic range and latency can also induce the dreaded nausea.

Then for a smaller set of people there’s the traditional motion sickness problem, that is, a discrepancy between what the eyes see versus what the inner ear’s vestibular system experiences. A classic example of this is reading in a moving car, where the eyes are focused on a static image but the body is rocking along with the wheels. It’s something very interesting but difficult to deal with, says Huang.

Even so, experts are incredibly hopeful.

Although new problems keep popping up, says Wetzstein, that’s not necessarily a bad thing. VR is near, and “it will be transformative.”

Image Credit: Shutterstock.com; Maurizio Pesce/Flickr; Fu-Chung Huang and Gordon Wetzstein, Stanford Computational Imaging Lab