The Star Wars universe is full of droids. Everywhere you turn, there are medical droids, exploration droids, labor droids, pilot droids, even battle droids. They carry out clearly defined tasks, often with a degree of independence, without needing to interact with people. In real life, we now have the technology to create many of them ourselves. But what about robots that can also interact with people? When it comes to the likes of R2-D2, C-3PO and Force Awakens newbie BB-8, it’s a much more mixed picture.

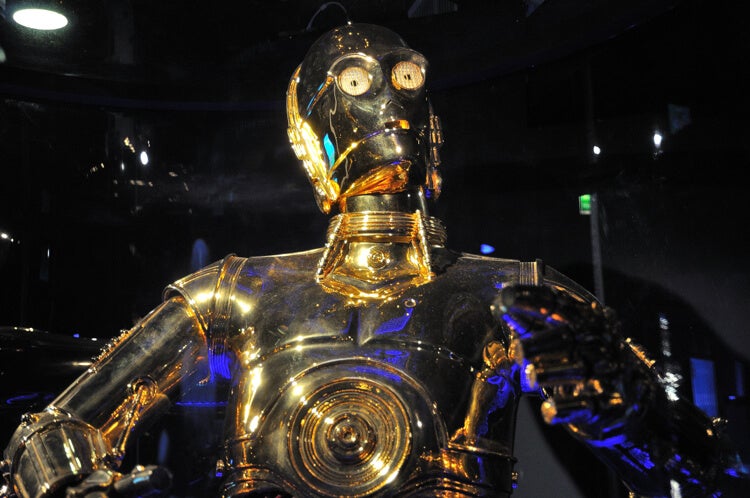

The two most famous Star Wars robots are themselves designed to carry out different tasks — R2-D2 is officially an astromech droid, for piloting and servicing starships; C-3PO is a protocol droid, with knowledge of language and etiquette. They look very different from one another — adult-sized humanoid vs small trashcan on wheels. But obviously it is their social intelligence that makes them the fully realized and well loved team members that they are.

When it comes to basic capabilities such as walking, talking, and sensing surroundings, we are getting closer and closer to what would be required. Advanced humanoid robots like Google’s Atlas and NASA’s Valkyrie are already good at walking (and even dancing). The state of the art in speech recognition and synthesis is rapidly developing, too: Microsoft recently even added speech-to-speech translation to Skype, for example. And sensing technologies such as computer vision, and even artificial noses, are also becoming increasingly human-like.

Talk is not cheap

Unfortunately, creating the basic technical building blocks for either a humanoid C-3PO or a rolling-around R2-D2 is almost the easy part. The real challenge is putting those components together to enable robots to interact in a socially intelligent way.

To understand why this is so hard, think about what happens when people talk face to face. We use our voices, faces and bodies together in a rich, continuous way. A surprising amount of information is conveyed by non-verbal signals. The meaning of a simple word like “maybe” can be dramatically affected by all the other things a speaker is doing.

Real-world communication doesn’t take place in a context-free vacuum either. Other people may be entering and leaving the scene, while the history of the interaction and indeed all previous interactions can also have a large effect. And not only must the robot fully understand all the nuances of human communicative signals, it must also produce understandable and appropriate signals in response. We are talking about an immense challenge.

For this reason, even our most advanced robots generally operate in constrained environments such as a lab. They are capable of a limited amount of communication, and generally can only interact in very specific situations. All these limitations reduce the number of signals that the robot must understand and produce, but at the cost of natural social interaction.

Bot and sold

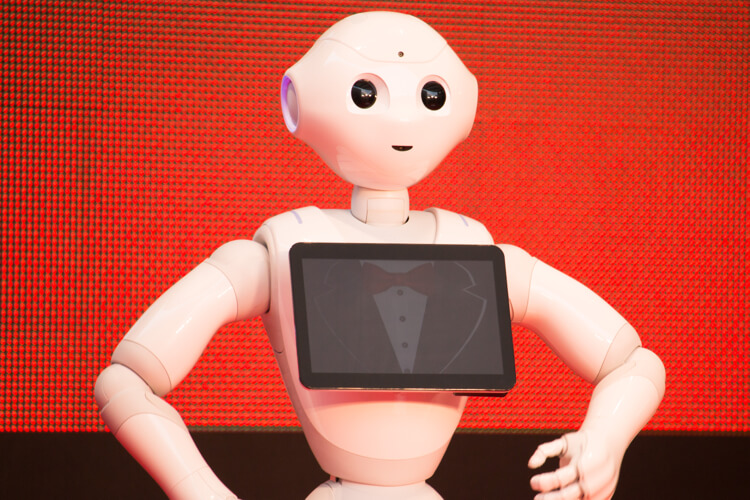

There is clearly a significant consumer appetite for more socially interactive robots, however. Already on the shelves is the Pepper robot, a life-sized unit produced in Japan that can answer questions, follow instructions and react to feelings and facial expressions. Consumer demand has been so high in its native country that since July 2015, each monthly run of 1,000 units has sold out in under a minute.

Meanwhile, the Jibo robot broke records on the crowdfunding site Indiegogo in July 2014 when it raised $1m (£0.7m) in less than a week as part of a fundraising drive that has now reached $60m. Invented at MIT in the US, the still unreleased robot’s abilities will include talking to and recognizing people and remembering their preferences.

These consumer success stories still can’t interact anywhere near as effectively as our favorite Star Wars droids, however. The solution to developing truly socially intelligent robots such as C-3PO, R2-D2 and BB-8 appears to lie in another highly active research area: data science.

Our early attempts at interactive robots were generally based on pre-programmed rules (“If person says X, say Y. Else say Z.”). More recently, however, robot developers have switched to machine learning: recording interactions between humans or between humans and robots, then “teaching” the robot how to behave based on what that data shows. This allows the robot to be much more flexible and adaptive.

Within machine learning, we are also seeing big improvements. Robots could previously only “learn” after the recordings had been processed, but newer techniques like deep learning are enabling robots to learn how to behave from raw data, making their potential much more open-ended. These techniques have already profoundly affected speech recognition and computer vision, and Google has recently open-sourced its in-house deep-learning toolkit TensorFlow, which should benefit researchers across the world.

In short, there’s still much work to be done before we are able to develop fully socially interactive robots such as R2-D2 and C-3PO. I’m afraid it’s unlikely that we will see a full version of either of them in any of our lifetimes. The good news is that this is a very active area of research, and data-science techniques are rapidly increasing robots’ social intelligence. We should be able to achieve exciting things in the not too distant future.![]()

Image Credit: FlickrCC/Gordon Tarpley; Wikimedia Commons/Andreal90; FlickrCC/Dick Thomas Johnson

Mary Ellen Foster, Lecturer in Computing Science, University of Glasgow

This article was originally published on The Conversation. Read the original article.