The Last Frontiers of AI: Can Scientists Design Creativity and Self-Awareness?

Share

Is creativity a uniquely human trait? What about self-awareness or intuition?

Defining the line between human and machine is becoming blurrier by the day as startups, big companies, and research institutions all compete to build the next generation of advanced AI.

This arms race is bringing a new era of AI that won’t prove its power by mastering human games, but by independently exhibiting ingenuity and creativity.

Sophisticated AI is undertaking increasingly complex tasks like stock market predictions, research synthesis, political speech writing—don’t worry, this article was still written by a human—and companies are beginning to pair deep learning with new robotics and digital manufacturing tools to create “smart manufacturing.”

But this is just the tip of the iceberg.

Hod Lipson, professor of engineering at Columbia University and the director of Columbia’s Creative Machines Labs, is pushing the next frontier of AI. It’s an era that will be defined by biology-inspired machines that can evolve, self-model, and self-reflect—where machines will generate new ideas, and then build them.

Fueling Lipson’s work is the holy grail of AI—the pursuit of self-aware robots. For centuries, philosophers and theologists have debated the nature of self-awareness. Lipson thinks roboticists can pitch into this debate, and perhaps build it themselves.

Though Lipson is speaking at Singularity University’s Exponential Manufacturing summit next month, I wanted a sneak peek into the creative machines he’s building.

“Can an AI design and make a new robot different from anything we've seen before?” asked Lipson during our conversation. Read on, and you shall see.

Do you have a favorite robot that you've worked on?

My favorite one right now is a robot that paints oil on canvas—it actually grabs the paintbrush and paints. It's the most creative robot that we have, and it creates beautiful art.

This one is called PIX18, and it’s already the third generation. It learned to paint much better than anything I can paint and better than most people can. I keep some of the paintings hanging in my office. It’s painted my cat, my parents—that’s my favorite.

Can you tell me about your work at Columbia University’s Creative Machine Labs?

What we do is biology-inspired engineering. We try to learn from biology how to do many things, and we focus on challenges that traditionally you would think only nature could do.

Biology-inspired engineering is about learning from nature, and then using it to try to solve the hardest problems. It happens at all scales. It's not just copying nature at the surface level. It could be copying the learning at a deeper level, such as learning how nature uses materials or learning about the adaptation processes that evolution uses.

One of my favorite topics we look into is: Can you make robots that can self-replicate? Self-replication is traditionally not something you associate with machines. Most machines, for example, can’t recover from damage on their own, heal, or adapt. Until recently, machines couldn't learn, for example, from their own experiences.

We are looking at what I think is the ultimate challenge in artificial intelligence and robotics—creating machines that are creative; machines that can invent new things; machines that can come up with new ideas and then make those very things.

Creativity is one of these last frontiers of AI. People still think that humans are superior to machines in their ability to create things, and we are looking at that challenge.

People also thought that human intuition was needed to master the Chinese game Go—until very recently when Lee Sedol lost to Google DeepMinds’ AI AlphaGo. What are your thoughts on this?

AlphaGo is sort of an end of an era for AI.

Since the 50s people have been building AI to play board games, and this is probably the last board game. Now, we can move onto bigger and more challenging things—that challenge is real life. For example, computers still find it hard to understand how to drive through a busy Manhattan intersection with pedestrians, bicycles, and cars all moving. Apparently, that's even harder than the game of Go.

AI at the moment is very good at making decisions, in taking in a lot of data (big data) and reducing it to a single decision. For example, taking in all of the stock market data and deciding, "Should I buy, or should I sell?” Or taking in all of the data from cameras, radars, and driverless car, and deciding whether to keep going straight or turn. It's all about taking data and distilling it into a decision.

But the other kind of intelligence is when we start with an idea, or with a need, and then we diverge and create many new ideas from it, we expand.

That's an entirely different kind of AI—it’s exploratory. We call it divergent AI, instead of convergent AI. That is what we're trying to do in our lab.

I think the new challenge of AI is about creativity. It's about creating new ideas. This is the next big frontier.

What is a specific application of divergent AI that the lab is working on?

It could be anything from generating new designs for an electronic circuit or a design for a new robot. We’re asking questions like, “Can an AI design and make a new robot that is different from anything we've seen before? Can it create?” Or, “Can a robot paint a painting? Can it create music that we would appreciate?”

Why is this self-awareness—and the ability to self-model or self-reflect—so important to you and your work?

Self-awareness is, I think, the ultimate AI challenge. We're all working on it.

People working in AI and robotics have intermediate goals like, "Can we play chess? Can we play Go? Can we drive a car?"

But in the long-term, if you look at the end game for AI, what's the ultimate thing? It is to create—I think—a sort of self-awareness.

It's almost a philosophical thing—like we're alchemists trying to recreate life—but ultimately I think, at least for many people, it’s the ultimate form of intelligence.

For centuries, philosophers, theologists, and psychologists have all debated, "What is self-awareness?" I think roboticists and people in AI can pitch into this debate and offer a different window—a window that's more quantitative and practical. We’re saying, "We're not just going to debate what is self-awareness, we're actually going to make it.”

If we can do this, I think we’ll finally understand what self-awareness is. For me, this is a grand challenge. It's the grand challenge of AI.

Creativity is a big challenge, but even greater than that is self-awareness. For a long time, in robotics and AI, we sometimes called it the "C" word—consciousness.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

I was going to ask you why I haven’t seen that word on your website.

We don't say it. It's sort of this...It's almost mythical for us. It's not something that most people will admit they're looking for. It's the Holy Grail. Any new faculty that says they're going for it will automatically be put in their place.

Our group is one of the ones who are looking at this, and I think we're beginning to crack this idea of self-awareness and consciousness. We definitely will get there, the question is—will I get there in my lifetime? That's the kind of question that most people want to know.

How might this advanced AI impact jobs in digital manufacturing in the next ten years?

When it comes to manufacturing, there are two angles.

One is the simple automation, where we're seeing robots that can work side-by-side with humans. It's becoming easier to put robots and automation into a factory. It used to be that you had to have skilled programmers and expensive robots with special manufacturing floors designed for the robots. All of these things right now are sort of going away.

You can literally buy a relatively low-cost robot, or one that costs less than a 1-year salary of a human, and put the robot right next to a human, and with no safety issues. You can teach them to do their job almost as you would teach a human. A lot of these barriers are falling away and we're seeing that happening in manufacturing. A lot of this has to do with AI—I’d say most of it—but it also has to do with other exponential growths in cheaper, faster, and better hardware.

The other side of manufacturing, which is disrupted by AI, is the side of design. Manufacturing and design always go hand-in-hand. While manufacturing is the more visible thing that we can film, watch, and show, the design behind the scenes is tightly linked to it.

When AI creeps into the design world through these new types of creative AI, you suddenly expand what you can manufacture because the AI on the design side can take advantage of your manufacturing tools in new ways.

From your TED talk in 2007 you said, “I think it’s important that we get away from this idea of designing machines manually, but actually let them evolve and learn, like children, and perhaps that's the way we'll get there.” It’s been almost a decade since you said this—when you reflect on it, have we moved in the direction you were hoping?

It has happened faster than I thought. In AI there were these two schools of thought that were competing and butting heads politically and financially. There’s the school of thought that is top-down, logic, programming, and search approach, and then there is the machine learning approach.

The machine learning approach says, “Forget about programming robots, forget about programming AI, you just make it learn, and it will figure out everything on its own from data.” The other school of thought says, “We sit down, write algorithms, and program the robot to do what it needs to do.”

For a long time, human programming was far better than machine learning. You could do machine learning for small things, but if you needed something serious, important, or difficult, you would bring in an expert and he or she would program the computer.

But over the last decade—since 2007 when I gave my TED Talk until now—there has been case after case of machine learning surpassing human programming and ability.

For example, people have been talking about driverless cars forever, but until 2012, nobody could program them to do what they need to do—to understand what's happening on the road. Nobody could figure it out. But finally, deep learning algorithms came through and now machines can recognize and understand what they're seeing better than humans can. That's why we're going to have driverless cars within a few years.

I think the machine learning approach has played out perfectly, and we're just at the beginning. It's going to accelerate.

If machine learning has finally surpassed human ability, what is an obstacle that we will to need to overcome to get to that next level of AI that you're talking about?

Perception was the big stumbling block that has been solved over the last year or two. Computers were blind—they couldn't understand what they were seeing. We had cameras, fast computers, and everything we needed, but computers couldn’t understand what they were seeing. That was a major hurdle.

It meant that computers couldn't deal with uncertain open environments and could only work in factories, mines, agricultural fields, or places where the structure is relatively static. But they couldn't work in homes, drive around, or work outside with people.

All of this machine learning paid off. We now have computers that don't just have eyes, but have a brain that can understand what they're seeing. That is opening up so many doors to new applications. I think that was the big hurdle.

What's the next one? I think it is creativity.

This is why I've been working on it. I think it's the ability, not just to analyze and understand what you're seeing, but also to be able to create, and imagine new things. And ultimately, that is on the path to self-awareness.

This is the first year Singularity University has hosted Exponential Manufacturing. Click here to learn more and register today.

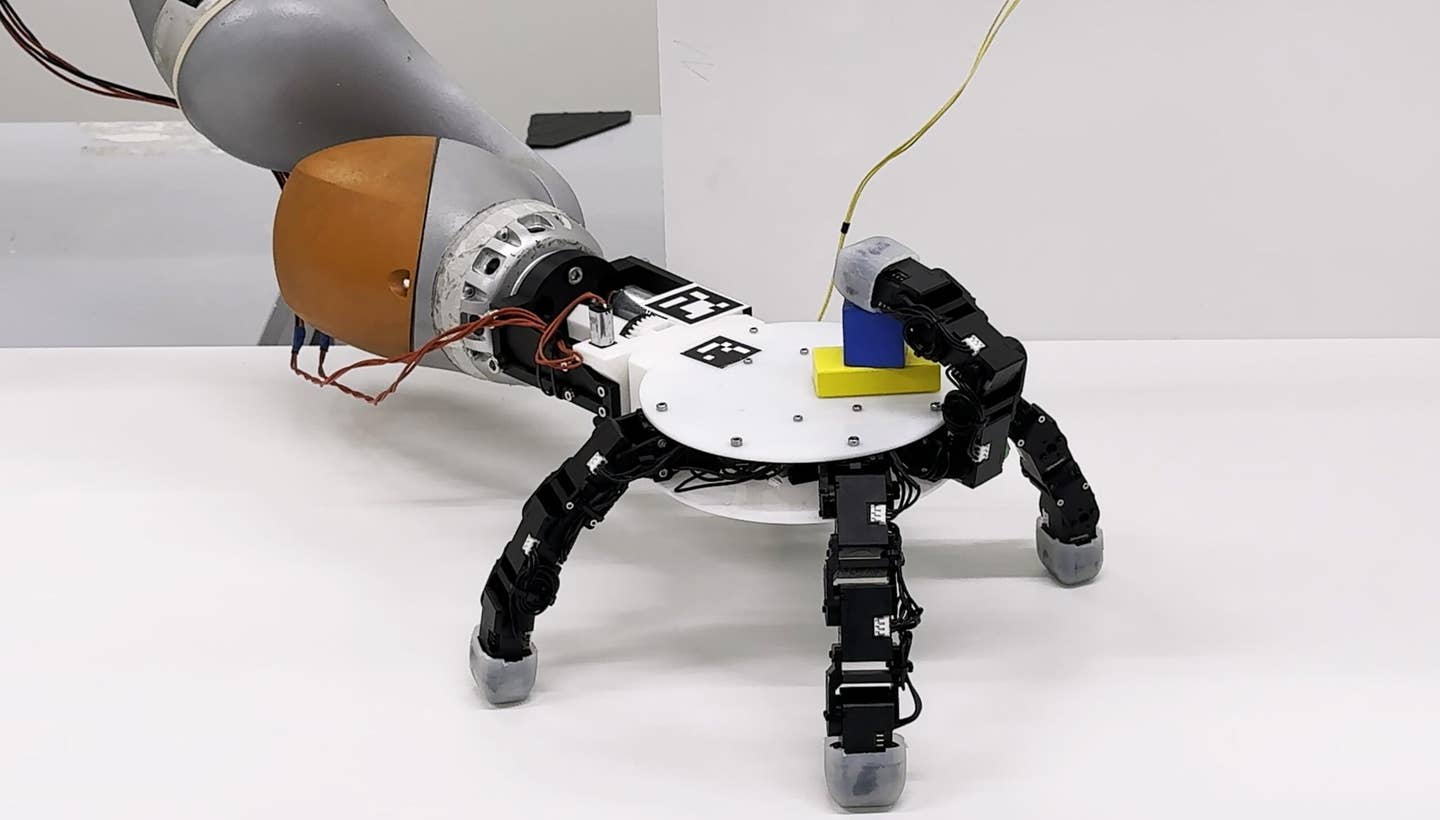

Image Credit: Shutterstock.com, Creative Machine Labs

Alison tells the stories of purpose-driven leaders and is fascinated by various intersections of technology and society. When not keeping a finger on the pulse of all things Singularity University, you'll likely find Alison in the woods sipping coffee and reading philosophy (new book recommendations are welcome).

Related Articles

Scientists Want to Give ChatGPT an Inner Monologue to Improve Its ‘Thinking’

This Robotic Hand Detaches and Skitters About Like Thing From ‘The Addams Family’

Humanity’s Last Exam Stumps Top AI Models—and That’s a Good Thing

What we’re reading