One advantage humans have over robots is that we’re good at quickly passing on our knowledge to each other. A new system developed at MIT now allows anyone to coach robots through simple tasks and even lets them teach each other.

Typically, robots learn tasks through demonstrations by humans, or through hand-coded motion planning systems where a programmer specifies each of the required movements. But the former approach is not good at translating skills to new situations, and the latter is very time-consuming.

Humans, on the other hand, can typically demonstrate a simple task, like how to stack logs, to someone else just once before they pick it up, and that person can easily adapt that knowledge to new situations, say if they come across an odd–shaped log or the pile collapses.

In an attempt to mimic this kind of adaptable, one-shot learning, researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) combined motion planning and learning through demonstration in an approach they’ve dubbed C-LEARN.

First, a human teaches the robot a series of basic motions using an interactive 3D model on a computer. Using the mouse to show it how to reach and grasp various objects in different positions helps the machine build up a library of possible actions.

The operator then shows the robot a single demonstration of a multistep task, and using its database of potential moves, it devises a motion plan to carry out the job at hand.

“This approach is actually very similar to how humans learn in terms of seeing how something’s done and connecting it to what we already know about the world,” says Claudia Pérez-D’Arpino, a PhD student who wrote a paper on C-LEARN with MIT Professor Julie Shah, in a press release.

“We can’t magically learn from a single demonstration, so we take new information and match it to previous knowledge about our environment.”

The robot successfully carried out tasks 87.5 percent of the time on its own, but when a human operator was allowed to correct minor errors in the interactive model before the robot carried out the task, the accuracy rose to 100 percent.

“Most importantly, the robot could teach the skills it learned to another machine with a completely different configuration.”

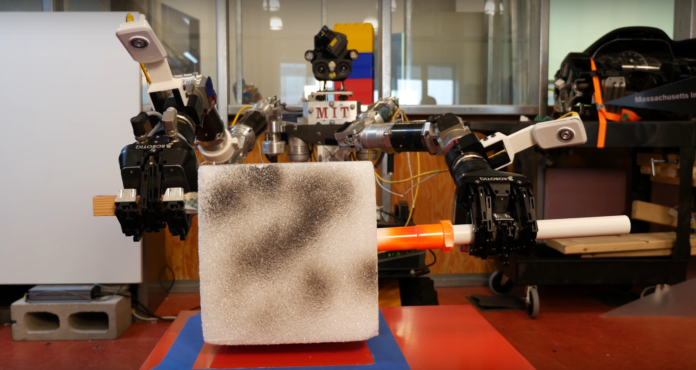

Most importantly, the robot could teach the skills it learned to another machine with a completely different configuration. The researchers tested C-LEARN on a new two-armed robot called Optimus that sits on a wheeled base and is designed for bomb disposal.

But in simulations, they were able to seamlessly transfer Optimus’ learned skills to CSAIL’s 6-foot-tall Atlas humanoid robot. They haven’t yet tested Atlas’ new skills in the real world, and they had to give Atlas some extra information on how to carry out tasks without falling over, but the demonstration shows that the approach can allow very different robots to learn from each other.

The research, which will be presented at the IEEE International Conference on Robotics and Automation in Singapore later this month, could have important implications for the large-scale roll-out of robot workers.

“Traditional programming of robots in real-world scenarios is difficult, tedious, and requires a lot of domain knowledge,” says Shah in the press release.

“It would be much more effective if we could train them more like how we train people: by giving them some basic knowledge and a single demonstration. This is an exciting step toward teaching robots to perform complex multi–arm and multi–step tasks necessary for assembly manufacturing and ship or aircraft maintenance.”

The MIT researchers aren’t the only people investigating the field of so-called transfer learning. The RoboEarth project and its spin-off RoboHow were both aimed at creating a shared language for robots and an online repository that would allow them to share their knowledge of how to carry out tasks over the web.

Google DeepMind has also been experimenting with ways to transfer knowledge from one machine to another, though in their case the aim is to help skills learned in simulations to be carried over into the real world.

A lot of their research involves deep reinforcement learning, in which robots learn how to carry out tasks in virtual environments through trial and error. But transferring this knowledge from highly–engineered simulations into the messy real world is not so simple.

So they have found a way for a model that has learned how to carry out a task in a simulation using deep reinforcement learning to transfer that knowledge to a so-called progressive neural network that controls a real-world robotic arm. This allows the system to take advantage of the accelerated learning possible in a simulation while still learning effectively in the real world.

These kinds of approaches make life easier for data scientists trying to build new models for AI and robots. As James Kobielus notes in InfoWorld, the approach “stands at the forefront of the data science community’s efforts to invent ‘master learning algorithms’ that automatically gain and apply fresh contextual knowledge through deep neural networks and other forms of AI.”

If you believe those who say we’re headed towards a technological singularity, you can bet transfer learning will be an important part of that process.

Image Credit: MITCSAIL/YouTube