Picture this: you’re at a boisterous party, trying to listen in on a group conversation. People are talking over each other and going a mile a minute, but you can only pick up snippets from one person at a time.

Confusing? Sure! Frustrating? Absolutely!

Yet this is how neuroscientists eavesdrop on all the electrical chatter going on in our heads. So much depends on understanding these neuronal conversations: deciphering their secret language is key to understanding—and manipulating—the memories, habits, and other cognitive processes that define us.

To monitor the signals zipping through a network of neurons, scientists often stick a tiny electrode into each single contributor and track its activity. It’s not easy to tease out an entire conversation that way—the process is tedious and prone to serious misunderstandings.

“If you put an electrode in the brain, it’s like trying to understand a phone conversation by hearing only one person talk,” said Dr. Ed Boyden at MIT. A pioneer of optogenetics and the inflatable brain, the neuroscience wunderkind has spent the past decade developing creative neurotechnological toolkits that have sparked excitement and garnered praise.

Now Boyden may have a way to tap into an entire neuronal group chat.

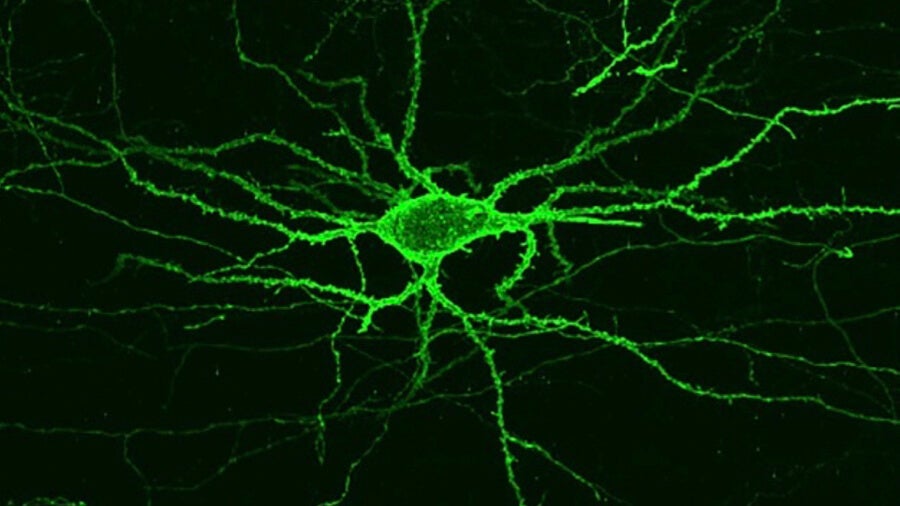

With the help of a robot, the team designed a protein that tunnels into the outer shell, or membrane, of a neuron. If there’s a slight change in the voltage, as when the neuron fires, the protein immediately transforms into a fluorescent torch that’s easy to spot under a microscope.

With a whole network of neurons, the embedded sensors spark like fireworks.

“Now we can record the neural activity of many cells in a neural circuit and hear them as they talk to each other,” said Boyden.

But the new sensor isn’t even the big advance. The robotic system, pieced together from easily available components, allows other neuroscientists to develop their own sensors.

By releasing the blueprint in Nature Chemical Biology, Boyden and his team hope the community will rapidly evolve stronger and more sensitive activity probes for the brain, thereby lighting the way to finally figuring out what exactly is a thought, a decision, or a feeling.

The Neural Lighthouse

To be fair, Boyden is far from the first to come up with these so-called “voltage sensors.”

But finding the perfect one has eluded neuroscientists for two decades. To precisely report neuronal firing, these proteins need to be able to rapidly turn on their light beams after the neuron fires—with a reaction time in the range of a tenth of a second, if not faster.

What’s more, they also need to be able to find the best seat in the house: smack on the neuronal membrane, where the voltage change happens, as opposed to inside a cell.

Finally, they need to shine long and bright. Lots of sensors lose their glow rapidly after exposure to light—dubbed “photobleaching,” the bane of neural cartographers. To match neuronal activity to behaviors, the indicators need to stay bright for at least several seconds.

Developing these sensors has traditionally been an extremely tedious affair. Scientists often start with a known sensor, swap some of its constituent molecules with others like Lego pieces, test the resulting new sensor in cells, and hope for the best. The process can take weeks, if not months.

Boyden’s robotic system, on the other hand, screens hundreds of thousands of potential new sensors in a few hours.

It works like this:

In a process that resembles accelerated evolution, the team started with a known light-sensitive sensor and randomly triggered mutations into the protein, making 1.5 million (!!) versions in total.

They then inserted all of the variants into mammalian cells—one variant per cell—and waited for the sensors to reach the cell’s membrane. Next, they programmed a microscope to automatically take photos of the cells.

It’s a powerful algorithm. “This version was modified from previous versions to be compatible with any microscope…camera and/or other optional hardware,” the authors said.

Once the microscope identified each individual cell, a robotic glass tube sucked up the cell into its private glass tube and examined whether the sensor variant satisfied all the requirements. Here, the team specifically focused on two criteria: the protein’s location and its brightness.

In this way, the team rapidly identified the top five candidates, and then subjected them to another round of mutations generating eight million (!!!) new variants. With help from their trusty robot cell picker, they narrowed the best performers down to seven proteins, which they then characterized using good old electrical recordings to see how fast the sensors responded to voltage fluctuations.

In the end, only two sensors met all criteria, and the authors named them Archon1 and Archon2 respectively.

Normally it’s excruciatingly hard to find sensors that excel in multiple domains, the authors say. The robotic screen works so well because it acts like a multi-round game show. To remain a candidate, each variant has to stand out in each round of testing, whether for its brightness, location, or speed.

“(It’s) a very clever high-throughput screening approach,” said Harvard professor Dr. Adam Cohen, who was not involved in this study. Cohen previously developed a sensor called QuasAr2 (get it?) that Boyden used here as a starting point to generate his mutant forms.

Brain Fireworks

Putting Archon1 to the test, the team inserted the protein onto the neuronal membranes of cortical neurons in mice. These cells come from the outermost region of the brain—the cortex—often considered the seat of higher cognitive functions.

Archon1 performed fabulously in brain slices from these mice. When stimulated with a reddish-orange light, the protein emitted a longer wavelength of red light that matched up to the neuron’s voltage swings—the brightness of the protein corresponds to a particular voltage.

The sensor was extremely quick on its feet, capable of reporting each time a neuron fired in near real time.

The team also tested Archon1 in two of neuroscience’s darling translucent animal models: a zebrafish and a tiny worm called C. elegans. Don’t underestimate these critters: zebrafish are often used to study how the brain encodes vision, hearing movement or fear, whereas C. elegans has shed light on the circuits that drive eating, socializing, and even sex.

Because of their see-through bodies, it’s particularly useful to watch their neurons light up in action because of the higher signal-to-noise ratio. As in the mouse brain, Archon1 performed beautifully, rapidly emitting light that lasted at least eight minutes.

“(This) supports recordings of neural activity over behaviorally relevant timescales,” the authors said.

Even cooler, Archon1 can be used in conjunction with optogenetic tools. In a proof-of-concept, the team used blue light to activate a neuron in C. elegans and watched Archon1 light up in response—an amazing visual feedback, especially since neuroscientists often use electrical recordings to see whether their optogenetic tricks worked.

Brighter Future

The team is now looking to test their sensor in living mice while performing certain behaviors and tasks.

The sensor “opens up the exciting possibility of simultaneous recordings of large populations of neurons” and of capturing each individual firing from every single neuron, the authors said. We’ll be watching neural computations happen in real time under the microscope.

And the best is yet to come. Scientific-grade cameras are increasingly capable of taking images at faster speeds and allowing for higher resolutions with a broader field of view. Mapping the brain with Archon1 and future generation sensors will no doubt yield buckets of new findings and theories about how the brain works.

“Over the next five years or so we’re going to try to solve some small brain circuits completely,” said Boyden.

Image Credit: Rost9 / Shutterstock.com