This New Chip Design Could Make Neural Nets More Efficient and a Lot Faster

Share

Neural networks running on GPUs have achieved some amazing advances in artificial intelligence, but the two are accidental bedfellows. IBM researchers hope a new chip design tailored specifically to run neural nets could provide a faster and more efficient alternative.

It wasn’t until the turn of this decade that researchers realized GPUs (graphics processing units) designed for video games could be used as hardware accelerators to run much bigger neural networks than previously possible.

That was thanks to these chips’ ability to carry out lots of computations in parallel rather than having to work through them sequentially like a traditional CPU. That’s particularly useful for simultaneously calculating the weights of the hundreds of neurons that make up today’s deep learning networks.

While the introduction of GPUs saw progress in the field explode, these chips still separate processing and memory, which means a lot of time and energy is spent shuttling data between the two. That has prompted research into new memory technologies that can both store and process this weight data at the same location, providing a speed and energy efficiency boost.

This new class of memory devices relies on adjusting their resistance levels to store data in analog—that is, on a continuous scale rather than the binary 1s and 0s of digital memory. And because information is stored in the conductance of the memory units, it’s possible to carry out calculations by simply passing a voltage through all of them and letting the system work through the physics.

But inherent physical imperfections in these devices mean their behavior is not consistent, which means attempts to use them to train neural networks have so far resulted in markedly lower classification accuracies than when using GPUs.

“We can perform training on a system that goes faster than GPUs, but if it is not as accurate in the training operation that’s no use,” said Stefano Ambrogio, a postdoctoral researcher at IBM Research who led the project, in an interview with Singularity Hub. “Up to now there was no demonstration of the possibility of using these novel devices and being as accurate as GPUs.”

That was until now. In a paper published in the journal Nature last week, Ambrogio and colleagues describe how they used a combination of emerging analog memory and more traditional electronic components to create a chip that matches the accuracy of GPUs while running faster and on a fraction of the energy.

The reason these new memory technologies struggle to train deep neural networks is that the process involves nudging the weight of each neuron up and down thousands of times until the network is perfectly aligned. Altering the resistance of these devices requires their atomic structure to be reconfigured, and this process isn’t identical each time, says Ambrogio. The nudges aren’t always exactly the same strength, which results in imprecise adjustment of the neurons’ weights.

The researchers got around this problem by creating “synaptic cells” each corresponding to individual neurons in the network, which featured both long- and short-term memory. Each cell consisted of a pair of Phase Change Memory (PCM) units, which store weight data in their resistance, and a combination of three transistors and a capacitor, which stores weight data as an electrical charge.

PCM is a form of “non-volatile memory,” which means it retains stored information even when there is no external power source, while the capacitor is “volatile” so can only hold its electrical charge for a few milliseconds. But the capacitor has none of the variability of the PCM devices, and so can be programmed quickly and accurately.

When the network is trained on images to complete a classification task, only the capacitor’s weights are updated. After several thousand images have been seen, the weight data is transferred to the PCM unit for long-term storage.

The variability of PCM means there’s still a chance the transfer of the weight data could contain errors, but because the unit is only updated occasionally, it’s possible to double-check the conductance without adding too much complexity to the system. This is not feasible when training directly on PCM units, said Ambrogio.

To test their device, the researchers trained their network on a series of popular image recognition benchmarks, achieving accuracy comparable to Google’s leading neural network software TensorFlow. But importantly, they predict that a fully built-out chip would be 280 times more energy-efficient than a GPU and would be able to carry out 100 times as many operations per square millimeter.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

It’s worth noting that the researchers haven’t fully built out the chip. While real PCM units were used in the tests, the other components were simulated on a computer. Ambrogio said they wanted to check that the approach was viable before dedicating time and effort to building a full chip.

They decided to use real PCM devices, as simulations for these are not yet very reliable, he said, but simulations for the other components are, and he’s highly confident they will be able to build a complete chip based on this design.

It is also only able to compete with GPUs on fully connected neural networks, where each neuron is connected to every neuron in the previous layer, Ambrogio said. Many neural networks are not fully connected or only have certain layers fully connected.

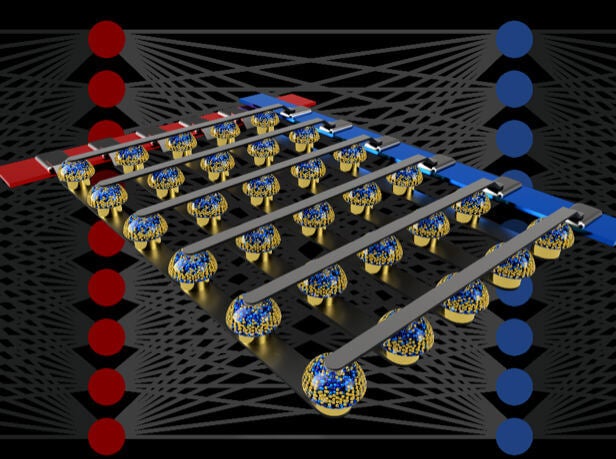

"Crossbar arrays of non-volatile memories can accelerate the training of fully connected neural networks by performing computation at the location of the data." Credit: IBM Research

But Ambrogio said the final chip would be designed so it could collaborate with GPUs, processing fully-connected layers while they tackle others. He also thinks a more efficient way of processing fully-connected layers could lead to them being used more widely.

What could such a specialized chip make possible?

Ambrogio said there are two main applications: bringing AI to personal devices and making datacenters far more efficient. The latter is a major concern for big tech companies, whose servers burn through huge sums in electricity bills.

Implementing AI directly in personal devices would prevent users having to share their data over the cloud, boosting privacy, but Ambrogio says the more exciting prospect is the personalization of AI.

“This neural network implemented in your car or smartphone is then also continuously learning from your experience,” he said. “If you have a phone you talk to, it specializes to your voice, or your car can specialize to your particular way of driving.”

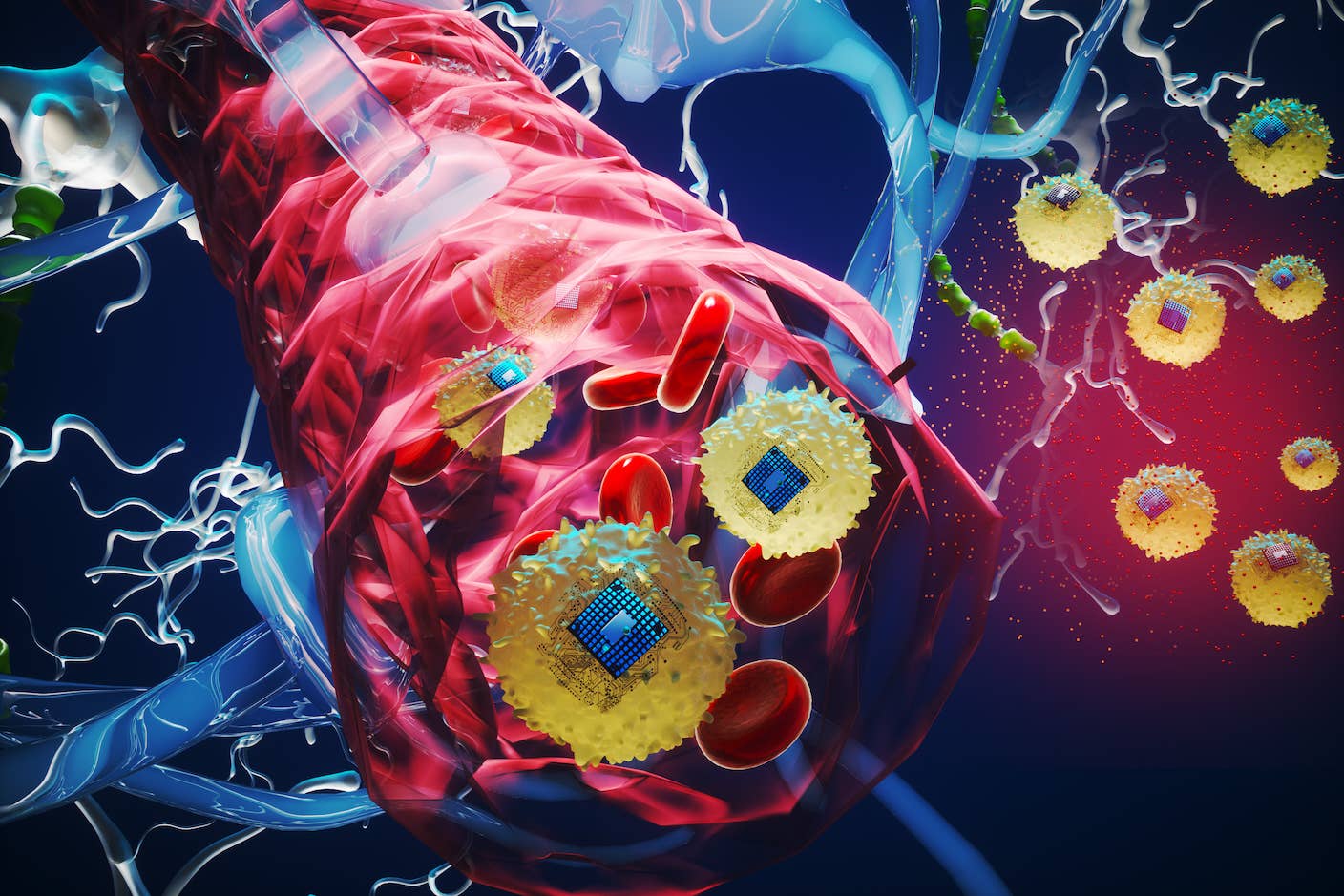

Image Credit: spainter_vfx / Shutterstock.com

Related Articles

How Scientists Are Growing Computers From Human Brain Cells—and Why They Want to Keep Doing It

AI Companies Are Betting Billions on AI Scaling Laws. Will Their Wager Pay Off?

These Brain Implants Are Smaller Than Cells and Can Be Injected Into Veins

What we’re reading