DeepMind’s New Research on Linking Memories, and How It Applies to AI

Share

There’s a cognitive quirk humans have that seems deceptively elementary. For example: every morning, you see a man in his 30s walking a boisterous collie. Then one day, a white-haired lady with striking resemblance comes down the street with the same dog.

Subconsciously we immediately make a series of deductions: the man and woman might be from the same household. The lady may be the man’s mother, or some other close relative. Perhaps she’s taking over his role because he’s sick, or busy. We weave an intricate story of those strangers, pulling material from our memories to make it coherent.

This ability—to link one past memory with another—is nothing but pure genius, and scientists don’t yet understand how we do it. It’s not just an academic curiosity: our ability to integrate multiple memories is the first cognitive step that lets us gain new insight into experiences, and generalize patterns across those encounters. Without this step, we’d forever live in a disjointed world.

It’s a cognitive superpower. And DeepMind wants it to power AI.

"While there are many domains where AI is superior, humans still have an advantage when tasks depend on the flexible use of episodic memory," the kind of memory that lets you remember your life events or where you parked your car, said Martin Chadwick, a researcher at DeepMind.

"If we can understand the mechanisms that allow people to do this, the hope is that we can replicate them within our AI systems, providing them with a much greater capacity for rapidly solving novel problems," said Chadwick. His sentiment echoes DeepMind CEO Demis Hassabi’s prioritization of neuroscience as a fundamental driver of machine learning.

This week, in collaboration with an international team of neuroscientists from the UK and Germany, DeepMind forayed into the messy world of biological wetware. Using state-of-the-art fMRI, which measures blood flow to different brain regions, the team teased out a neural circuit responsible for linking together memories in humans.

Then they distilled an algorithm from biological data—a first, but large step towards infusing AI with the same cognitive prowess.

“This work elegantly…sets a standard for future work,” said Dr. Jozien Goense at the University of Glasgow, who was not involved in the study.

A Memory Conundrum

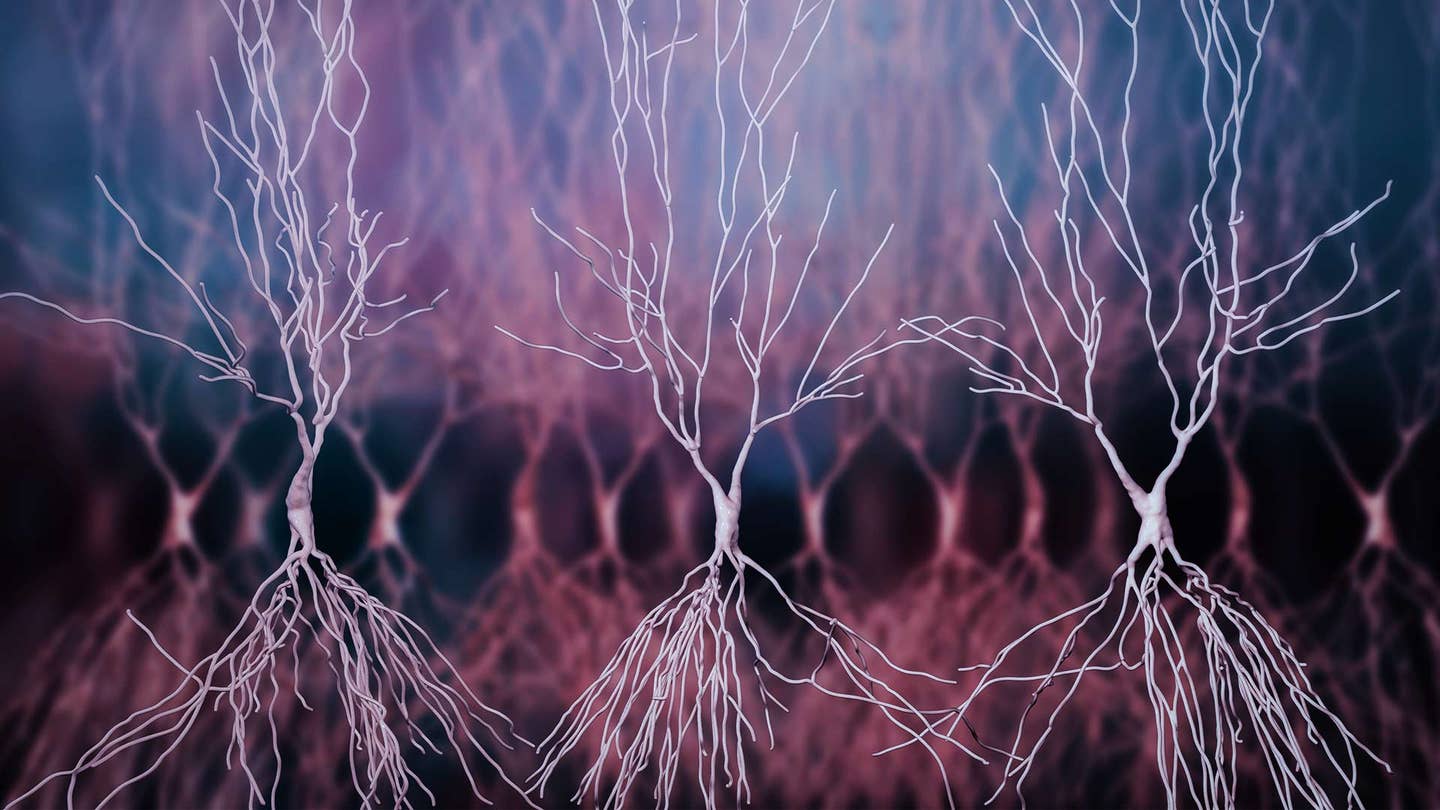

Before we get into the meat of the study, we need to (re)acquaint ourselves with the hippocampus, a seahorse-shaped structure buried deep inside the brain.

Way back in the 1960s, a patient called Henry Molaison (better known as H.M.) blew the neuroscience theory of memory wide open when he went under the knife to cure his epilepsy. Surgeons removed much of his hippocampus and surrounding regions, and Henry began experiencing amnesia—specifically, the type that prohibited him from making new memories. It was an important clue that the hippocampus plays a critical role in episodic memories—like RAM, it rapidly writes down the events of our lives, and eventually transfers those memories to the outer layer of the brain, the cortex, for long-term storage.

Subsequent analyses slowly unveiled that the hippocampus does much more in the memory domain. For one, it’s one of the few regions in the brain that pump out new neurons, and these baby neurons seem to help keep similar memory episodes separate. This ability, called “pattern separation,” lets you remember two visits to your favorite coffee stop as distinct memories. Without pattern separation, these episodes would blend together into a mumbled jumble outside of time.

That’s not all. By carefully tracing the various neural circuits within the hippocampus, scientists began noticing that it also seemed to integrate memories into experiences in a useful way—an ability that’s fundamentally at odds with its function of keeping memories separate.

Most of these studies were done in animals, which can have electrodes directly implanted into various neural circuits for easy eavesdropping. But animals can’t explain what they’re thinking, and the duality of hippocampal powers remained a heatedly-debated theory.

Looping Far and Wide

DeepMind and collaborators waded into this neuroscience problem with a powerful new toy: an ultra-powerful fMRI.

The “f” in fMRI stands for “functional,” and it’s been a popular tool to study brain functions for decades. The machine measures blood flow to a brain region, which loosely (but controversially) correlates with how much activity is going on at any moment. Sophisticated algorithms can then tease out activated brain regions and color them in brilliant pseudo-technicolor based on their activity level. In other words, they “light up.”

But traditional fMRIs have an Achilles’ heel: they have terrible resolution, meaning that they can only look at large chunks of brain tissue—much larger than single neural circuits—at a time.

In this study, published in the prestigious journal Neuron, DeepMind took advantage of new developments in fMRI hardware and teased out algorithms that allowed them to look at hippocampal circuits in fine detail during a memory association task.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

The team showed a volunteer group of 26 people pairs of photographs. One depicted a realistic-looking face, whereas the other showed a scene (for example, a highway through dense forests after the rain) or an object (a globe).

The pairs weren’t unique: each scene or object was paired with two faces. In this way, the experiment established an indirect link between sets of two unique faces.

In the test phase, the team asked the volunteers to pick out the faces that were linked through an object or a scene while scanning their brain activity. This essentially gave the team insight into what was going on with the hippocampus while the volunteers mentally established indirect links from memory.

Remarkably, the hippocampus reached outward, towards a multi-layered cortical area dubbed the EC. Rather than processing all the information in house, the hippocampus uses significant connections with EC to “circulate” the distinct memories. Inputs to the EC varied from its output back to the hippocampus, forming a giant “big loop” of neural connections that seem to underlie our ability to integrate memories. The team dubbed this theory the “big-loop recurrence.”

Training a sophisticated classifier using biological data, the team teased out that connections between the hippocampus and the input or output layer of the EC was significantly different. In other words, something magical seemed to be going on throughout the layers of EC that helps integrate memories.

What’s more, the strength of EC input correlated with how well the volunteer did on the memory association task. We know that the hippocampus keeps memories separate within its structure, explained Goense. By outsourcing memory integration to a related but distinct region, the hippocampus could support both opposing tasks.

"Our data showed that when the hippocampus retrieves a memory, it doesn't just pass it to the rest of the brain," said study author Dharshan Kumaran at DeepMind. "Instead, it recirculates the activation back into the hippocampus, triggering the retrieval of other related memories."

"The results could be thought of as the best of both worlds: you preserve the ability to remember individual experiences by keeping them separate, while at the same time allowing related memories to be combined on the fly at the point of retrieval," he added. "This ability is useful for understanding how the different parts of a story fit together, for example—something not possible if you just retrieve a single memory."

From Brain to AI

The study may seem a radical departure from DeepMind’s other ventures, but it’s in line with the company’s deep–seated belief that neuroscience can inspire more sophisticated AI.

“The algorithm realized through big-loop recurrence has striking parallels with cutting-edge machine learning neural network architectures that utilize external memory to solve problems of real world relevance,” the authors wrote in their conclusion.

Going forward, the team said, they need to figure out how much big-loop recurrence contributes to this type of information integration that help living beings adapt to an ever-changing environment.

And maybe one day, the fruits will also apply to non-living intelligence.

Image Credit: Kateryna Kon / Shutterstock.com

Dr. Shelly Xuelai Fan is a neuroscientist-turned-science-writer. She's fascinated with research about the brain, AI, longevity, biotech, and especially their intersection. As a digital nomad, she enjoys exploring new cultures, local foods, and the great outdoors.

Related Articles

Scientists Want to Give ChatGPT an Inner Monologue to Improve Its ‘Thinking’

Humanity’s Last Exam Stumps Top AI Models—and That’s a Good Thing

AI Now Beats the Average Human in Tests of Creativity

What we’re reading