Facebook Wants to Make Smart Robots to Explore Every Nook and Cranny of Your Home

Share

“Hey Alexa, turn on the kitchen light.”

“Hey Alexa, play soothing music at volume three.”

“Hey Alexa, tell me where to find my keys.”

You can ask an Alexa or Google home assistant questions about facts, news, or the weather, and make commands for whatever you’ve synced them to (lights, alarms, TVs, etc.). But helping you find things is a capability that hasn’t quite come to pass yet; smart home assistants are essentially very rudimentary, auditory-only “brains” with limited functions.

But what if home assistants had a “body” too? How much more would they be able to do for us? (And what if the answer is “more than we want”?)

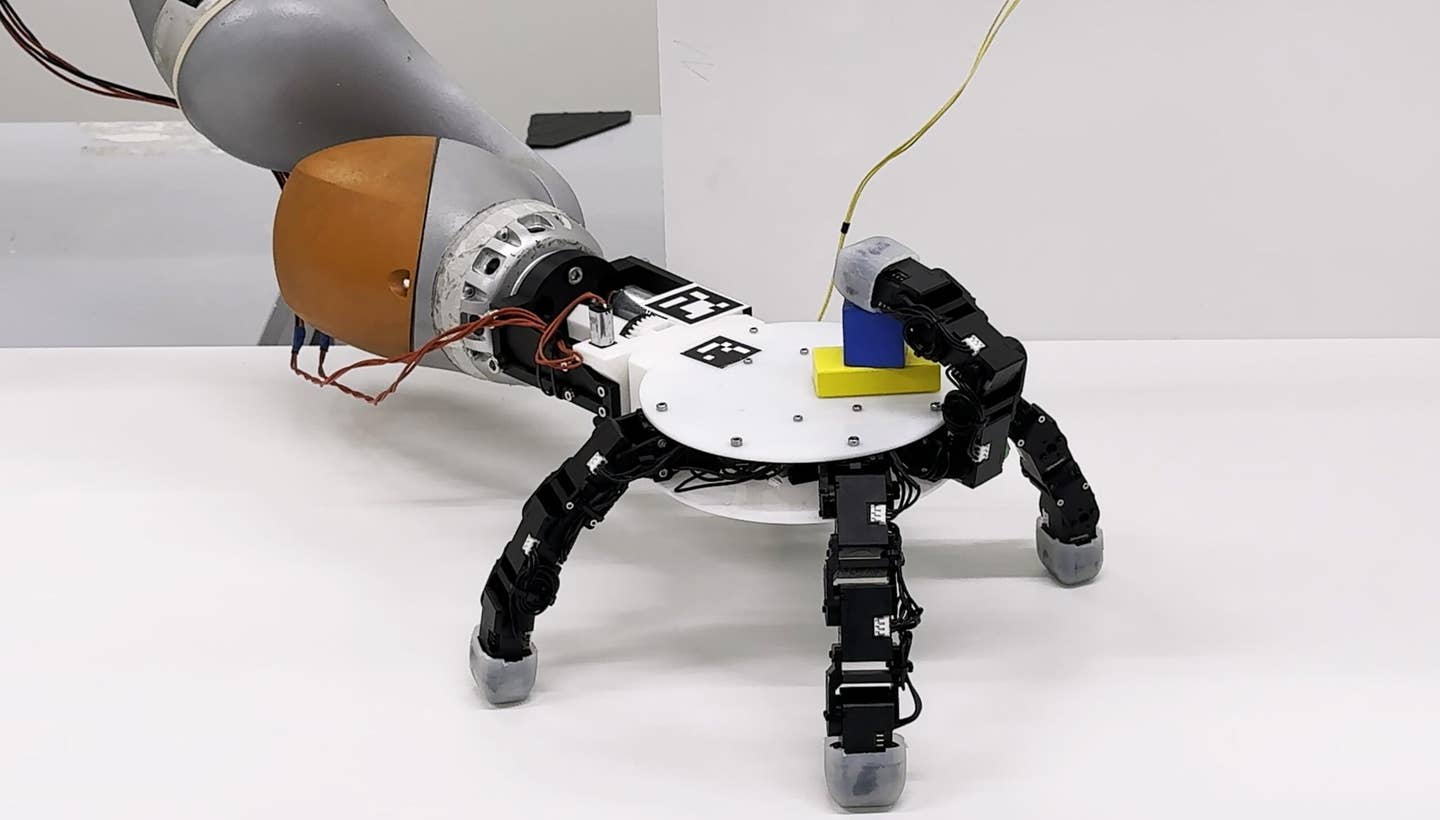

If Facebook’s AI research objectives are successful, it may not be long before home assistants take on a whole new range of capabilities. Last week the company announced new work focused on advancing what it calls “embodied AI”: basically, a smart robot that will be able to move around your house to help you remember things, find things, and maybe even do things.

Robots That Hear, Home Assistants That See

In Facebook’s blog post about audio-visual navigation for embodied AI, the authors point out that most of today’s robots are “deaf”; they move through spaces based purely on visual perception. The company’s new research aims to train AI using both visual and audio data, letting smart robots detect and follow objects that make noise as well as use sounds to understand a physical space.

The company is using a dataset called SoundSpaces to train AI. SoundSpaces simulates sounds you might hear in an indoor environment, like doors opening and closing, water running, a TV show playing, or a phone ringing. What’s more, the nature of these sounds varies based on where they’re coming from; the center of a room versus a corner of it, or a large, open room versus a small, enclosed one. SoundSpaces incorporates geometric details of spaces so that its AI can learn to navigate based on audio.

This means, the paper explains, that an AI “can now act upon ‘go find the ringing phone’ rather than ‘go to the phone that is 25 feet southwest of your current position.’ It can discover the goal position on its own using multimodal sensing.”

The company also introduced SemanticMapnet, a mapping tool that creates pixel-level maps of indoor spaces to help robots understand and navigate them. You can easily answer questions about your home or office space like “How many pieces of furniture are in the living room?” or “Which wall of the kitchen is the stove against?” The goal with SemanticMapnet is for smart robots to be able to do the same—and help us find and remember things in the process.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

These tools expand on Facebook’s Replica dataset and Habitat simulator platform, released in mid-2019.

The company envisions its new tools eventually being integrated into augmented reality glasses, which would take in all kinds of details about the wearer’s environment and be able to remember those details and recall them on demand. Facebook's chief technology officer, Mike Schroepfer, told CNN Business, “If you can build these systems, they can help you remember the important parts of your life.”

Smart Assistants, Dumb People?

But before embracing these tools, we should consider their deeper implications. Don’t we want to be able to remember the important parts of our lives without help from digital assistants?

Take GPS. Before it came along, we were perfectly capable of getting from point A to point B using paper maps, written instructions, and good old-fashioned brain power (and maybe occasionally stopping to ask another human for directions). But now we blindly rely on our phones to guide us through every block of our journeys. Ever notice how much harder it seems to learn your way around a new place or remember the way to a new part of town than it used to?

The seemingly all-encompassing wisdom of digital tools can lead us to trust them unquestioningly, sometimes to our detriment (both in indirect ways—using our brains less—and direct ways, like driving a car into the ocean or nearly off a cliff because the GPS said to).

It seems like the more of our thinking we outsource to machines, the less we’re able to think on our own. Is that a trend we’d be wise to continue? Do we really need or want smart robots to tell us where our keys are or whether we forgot to add the salt while we’re cooking?

While allowing AI to take on more of our cognitive tasks and functions—to become our memory, which is essentially what Facebook’s new tech is building towards—will make our lives easier in some ways, it will also come with hidden costs or unintended consequences, as most technologies do. We must not only be aware of these consequences, but carefully weigh them against a technology’s benefits before integrating it into our lives—and our homes.

Image Credit: snake3d / Shutterstock.com

Vanessa has been writing about science and technology for eight years and was senior editor at SingularityHub. She's interested in biotechnology and genetic engineering, the nitty-gritty of the renewable energy transition, the roles technology and science play in geopolitics and international development, and countless other topics.

Related Articles

Scientists Want to Give ChatGPT an Inner Monologue to Improve Its ‘Thinking’

This Robotic Hand Detaches and Skitters About Like Thing From ‘The Addams Family’

Humanity’s Last Exam Stumps Top AI Models—and That’s a Good Thing

What we’re reading