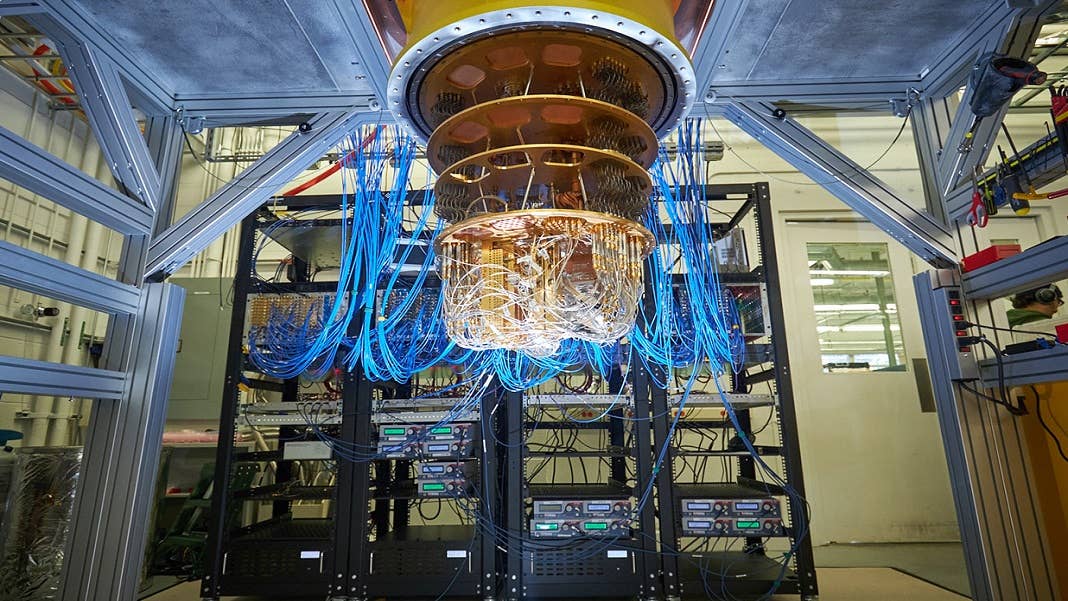

Google Gets One Step Closer to Error-Corrected Quantum Computing

Share

One of the biggest barriers standing in the way of useful quantum computers is how error-prone today's devices are. Now, Google has provided an experimental demonstration of how to correct this problem and scale it up for much larger devices.

The power of quantum computers comes from their ability to manipulate exotic quantum states, but these states are very fragile and easily perturbed by sources of noise, like heat or electromagnetic fields. This can introduce errors into calculations, and it’s widely accepted that error correction will need to be built into these devices before they’re able to carry out any serious work.

The problem is that the most obvious way of checking for errors is out of bounds for a quantum computer. Unlike normal binary bits, the qubits at the heart of a quantum computer can exist in a state known as superposition, where their value can be 0 and 1 simultaneously. Any attempt to measure the qubit causes this state to collapse to a 0 or 1, derailing whatever calculation it was involved in.

To get around this problem, scientists have turned to another quantum phenomenon called entanglement, which intrinsically links the state of two or more qubits. This can be used to lump together many qubits to create one “logical qubit” that encodes a single superposition. In theory, this makes it possible to detect and correct errors in individual physical qubits without the overall value of the logical qubit becoming corrupted.

To detect these errors, the so-called “data qubits” that encode the superposition are also entangled with others known as “measure qubits.” By measuring these qubits it’s possible to work out if the adjacent data qubits have experienced an error, what kind of error it is, and in theory correct it, all without actually reading their state and disturbing the logical qubit’s superposition.

While these ideas are not new, implementing them has so far proved elusive, and there were still some question marks about how effective the scheme could be. But now Google has demonstrated the approach in its 52-qubit Sycamore quantum processor and shown it should scale up to help build the fault-tolerant quantum computers of the future.

Creating a logical qubit relies on what is known as a stabilizer code, which carries out the necessary operations to link together the various physical qubits and periodically check for errors. In their paper in Nature, the Google researchers describe how they trialed two different codes: one that created a long chain of alternating data qubits and measure qubits and another that created a 2D lattice of the two different kinds.

The team started implementing the linear code with 5 physical qubits and then gradually scaled it up to 21. Crucially, for the first time they demonstrated that adding more qubits resulted in an exponential increase in the ability to suppress errors, which suggests the length of time a logical qubit can be maintained should increase significantly as the number of available qubits grows.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

However, there’s still a long way to go. For a start, they only detected errors and didn’t actually test out the process for correcting wayward qubits. And while the linear code can detect the two main types of error—bit flips and phase flips—it can’t do both at once.

The second code they tried out is capable of detecting both kinds of error, but it’s harder to map these detections to corrections. This setup is also more susceptible to errors itself, and the performance of the physical qubits will have to improve before this approach is able to demonstrate error suppression.

However, this lattice-based approach was a small-scale trial of the “surface code” that Google believes will ultimately solve error correction in future large-scale quantum computers. And while it’s not there yet, the researchers say it’s within touching distance of the threshold where error suppression becomes possible.

They conclude by pointing out that practical quantum computing will probably require 1,000 physical qubits for each logical qubit, so the underlying hardware still has a long way to go. But the research makes it clear that the underlying principles of error correction are sound and will be able to support much larger quantum computers in the future.

Image Credit: Rocco Ceselin/Google

Related Articles

Scientists Send Secure Quantum Keys Over 62 Miles of Fiber—Without Trusted Devices

This Light-Powered AI Chip Is 100x Faster Than a Top Nvidia GPU

How Scientists Are Growing Computers From Human Brain Cells—and Why They Want to Keep Doing It

What we’re reading