Quantum Computing in Silicon Breaks a Crucial Threshold for the First Time

Share

Quantum computers made from the same raw materials as standard computer chips hold obvious promise, but so far they've struggled with high error rates. That seems set to change after new research showed silicon qubits are now accurate enough to run a popular error-correcting code.

The quantum computers that garner all the headlines today tend to be made using superconducting qubits, such as those from Google and IBM, or trapped ions, such as those from IonQ and Honeywell. But despite their impressive feats, they take up entire rooms and have to be painstakingly handcrafted by some of the world’s brightest minds.

That’s why others are keen to piggyback on the miniaturization and fabrication breakthroughs we’ve made with conventional computer chips by building quantum processors out of silicon. Research has been going on in this area for years, and it’s unsurprisingly the route that Intel is taking in the quantum race. But despite progress, silicon qubits have been plagued by high error rates that have limited their usefulness.

The delicate nature of quantum states means that errors are a problem for all of these technologies, and error-correction schemes will be required for any of them to reach significant scale. But these schemes will only work if the error rates can be kept sufficiently low; essentially, you need to be able to correct errors faster than they appear.

The most promising family of error-correction schemes today are known as "surface codes" and they require operations on, or between, qubits to operate with a fidelity above 99 percent. That has long eluded silicon qubits, but in the latest issue of Nature three separate groups report breaking this crucial threshold.

The first two papers from researchers at RIKEN in Japan and QuTech, a collaboration between Delft University of Technology and the Netherlands Organization for Applied Scientific Research, use quantum dots for qubits. These are tiny traps made out of semiconductors that house a single electron. Information can be encoded into the qubits by manipulating the electrons’ spin, a fundamental property of elementary particles.

The key to both groups’ breakthroughs was primarily down to careful engineering of the qubits and control systems. But the QuTech group also used a diagnostic tool developed by researchers at Sandia National Laboratories to debug and fine-tune their system, while the RIKEN team discovered that upping the speed of operations boosted fidelity.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

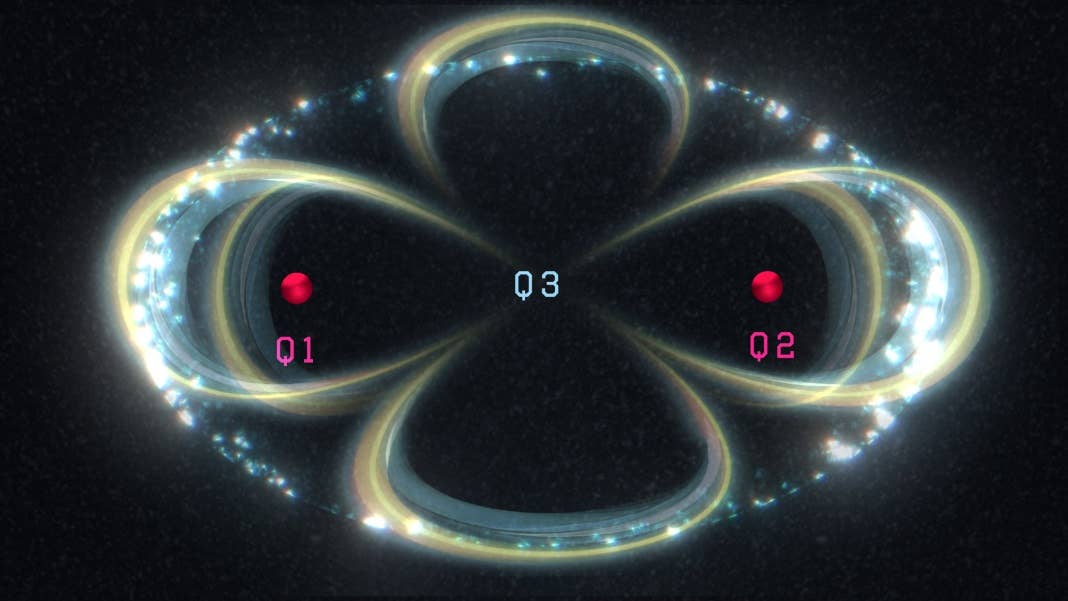

A third group from the University of New South Wales took a slightly different approach, using phosphorus atoms embedded into a silicon lattice as their qubits. These atoms can hold their quantum state for extremely long times compared to most other qubits, but the tradeoff is that it’s hard to get them to interact. The group’s solution was to entangle two of these phosphorus atoms with an electron, which enables them to talk to each other.

All three groups were able to achieve fidelities above 99 percent for both single qubit and two-qubit operations, which crosses the error-correction threshold. They even managed to carry out some basic proof-of-principle calculations using their systems. Nonetheless, they are still a long way from making a fault-tolerant quantum processor out of silicon.

Achieving high-fidelity qubit operations is only one of the requirements for effective error correction. The other is having a large number of spare qubits that can be dedicated to this task, while the remaining ones focus on whatever problem the processor has been set.

As an accompanying analysis in Nature notes, adding more qubits to these systems is certain to complicate things, and maintaining the same fidelities in larger systems will be tough. Finding ways to connect qubits across large systems will also be a challenge.

However, the promise of being able to build compact quantum computers using the same tried-and-true technology as existing computers suggests these are problems worth trying to solve.

Image Credit: UNSW/Tony Melov

Related Articles

Scientists Send Secure Quantum Keys Over 62 Miles of Fiber—Without Trusted Devices

This Light-Powered AI Chip Is 100x Faster Than a Top Nvidia GPU

How Scientists Are Growing Computers From Human Brain Cells—and Why They Want to Keep Doing It

What we’re reading