MIT’s New Robot Dog Learned to Walk and Climb in a Simulation Whipped Up by Generative AI

Share

A big challenge when training AI models to control robots is gathering enough realistic data. Now, researchers at MIT have shown they can train a robot dog using 100 percent synthetic data.

Traditionally, robots have been hand-coded to perform particular tasks, but this approach results in brittle systems that struggle to cope with the uncertainty of the real world. Machine learning approaches that train robots on real-world examples promise to create more flexible machines, but gathering enough training data is a significant challenge.

One potential workaround is to train robots using computer simulations of the real world, which makes it far simpler to set up novel tasks or environments for them. But this approach is bedeviled by the “sim-to-real gap”—these virtual environments are still poor replicas of the real world and skills learned inside them often don’t translate.

Now, MIT CSAIL researchers have found a way to combine simulations and generative AI to enable a robot, trained on zero real-world data, to tackle a host of challenging locomotion tasks in the physical world.

“One of the main challenges in sim-to-real transfer for robotics is achieving visual realism in simulated environments,” Shuran Song from Stanford University, who wasn’t involved in the research, said in a press release from MIT.

“The LucidSim framework provides an elegant solution by using generative models to create diverse, highly realistic visual data for any simulation. This work could significantly accelerate the deployment of robots trained in virtual environments to real-world tasks.”

Leading simulators used to train robots today can realistically reproduce the kind of physics robots are likely to encounter. But they are not so good at recreating the diverse environments, textures, and lighting conditions found in the real world. This means robots relying on visual perception often struggle in less controlled environments.

To get around this, the MIT researchers used text-to-image generators to create realistic scenes and combined these with a popular simulator called MuJoCo to map geometric and physics data onto the images. To increase the diversity of images, the team also used ChatGPT to create thousands of prompts for the image generator covering a huge range of environments.

After generating these realistic environmental images, the researchers converted them into short videos from a robot’s perspective using another system they developed called Dreams in Motion. This computes how each pixel in the image would shift as the robot moves through an environment, creating multiple frames from a single image.

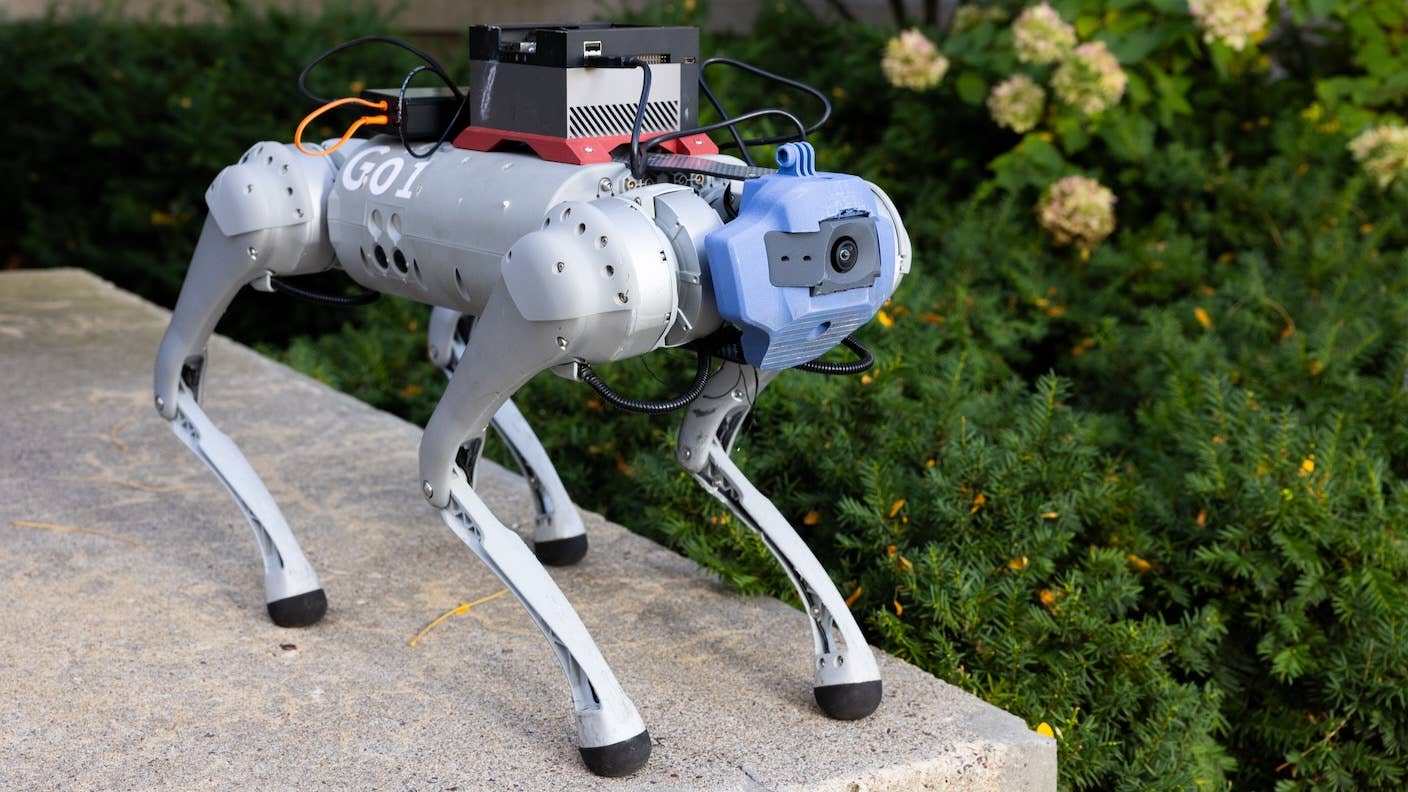

The researchers dubbed this data-generation pipeline LucidSim and used it to train an AI model to control a quadruped robot using just visual input. The robot learned a series of locomotion tasks, including going up and down stairs, climbing boxes, and chasing a soccer ball.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

The training process was split into parts. First, the team trained their model on data generated by an expert AI system with access to detailed terrain information as it attempted the same tasks. This gave the model enough understanding of the tasks to attempt them in a simulation based on the data from LucidSim, which generated more data. They then re-trained the model on the combined data to create the final robotic control policy.

The approach matched or outperformed the expert AI system on four out of the five tasks in real-world tests, despite relying on just visual input. And on all the tasks, it significantly outperformed a model trained using “domain randomization”—a leading simulation approach that increases data diversity by applying random colors and patterns to objects in the environment.

The researchers told MIT Technology Review their next goal is to train a humanoid robot on purely synthetic data generated by LucidSim. They also hope to use the approach to improve the training of robotic arms on tasks requiring dexterity.

Given the insatiable appetite for robot training data, methods like this that can provide high-quality synthetic alternatives are likely to become increasingly important in the coming years.

Image Credit: MIT CSAIL

Related Articles

Sparks of Genius to Flashes of Idiocy: How to Solve AI’s ‘Jagged Intelligence’ Problem

Researchers Break Open AI’s Black Box—and Use What They Find Inside to Control It

What the Rise of AI Scientists May Mean for Human Research

What we’re reading