Minority Report Interface Is Real, Hitting Mainstream Soon (Video)

Share

Underkoffler designed the interfaces for Minority Report and then made them real. While g-speak has been around for a few years, the real debut was at TED 2010 earlier this month.

Steven Spielberg asked John Underkoffler to help design a futuristic human computer interface for a movie that takes place in the year 2054. Little did Spielberg know that Underkoffler would adapt that concept from Minority Report and develop it into a real system 35 years ahead of schedule. Called g-speak, the system is a 'spatial operating environment' that allows you to point and gesture to control and move files across multiple displays. It's absolutely incredible looking. Underkoffler and his fellows at Oblong have already placed several of these systems in branches of the US government and Fortune 500 companies. The real unveiling, however, came at the 2010 TED Conference earlier in February, where g-speak and the spatial operating environment blew people's minds. Underkoffler said that he thought this system could be included with new computers in just five years. We have multiple video demonstrations of g-speak below so you can see for yourself. Be warned: you're going to want one.

Gesture technology is going to be big in 2010. We've already discussed how Hitachi, Toshiba, and Microsoft are all producing breakthrough home systems that utilize your hands (and body) as controllers. That's just the first level of body-digital interaction, however. G-speak lets you go deeper. Cut and paste across multiple displays with multiple users, highlight and edit files as they play in real time, use your hands to move through space and time. Just watch:

Carlton Sparrell, Oblong's VP of Product Development, spoke at MIT's Media Lab back in October 2009. He highlighted how g-speak will allow users at multiple interfaces, in multiple locations, to share files in an intuitive manner as everyone jointly edits them. Sparrell's talk included the following demo video of g-speak that was created in 2008.

As cool as this system looks, it's specifications are even more incredible. G-speak tracks hands with 0.1mm spatial resolution at 100 Hz! Unless you're a virtuoso musician or a brain surgeon I doubt your motor control is anywhere near so precise. In the spatial operating environment, that resolution is put to good use: every pixel has a physical space associated with it. Every single pixel! In this way, a user can interact with anything she sees, manipulating virtual objects in the same area as they appear. Oblong even says that the system can work with 3D displays without any updates to its software. This goes a long way towards directly translating the digital world into the physical one.

Strangely, I can't find much information on how g-speak does this. Users must wear special gloves, which you can see in the videos, and these gloves provide some assistance in tracking gesture recognition. Beyond that, Oblong isn't very forthcoming as to what cameras, accelerometers, or wireless communications are used. Hopefully these hardware details will become more accessible after Underkoffler's TED video is released.

While demonstration videos are great, you have to wonder how the average user will feel trying to learn to work in a spatial operating environment. Luckily, at the same time that Sparrell was discussing Oblong's ambitious plans at MIT, other members of the company were experimenting with real world uses. Here we can see a virtual pottery application where the artist shapes the digital clay and then has a 3D frame of his work. Notice how the user is still adapting to some aspects of the gesture language but feels confident in others.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

Let's take a look at Underkoffler's original concept for spatial operating environments in these clips from Minority Report. Feel free to skip around.

Watching the video above, it's clear that g-speak is an adaptation of the Minority Report idea, or vice versa. Gloves, gestures, huge shared display spaces - it's all there. We also get a good idea of how Oblong may envision future extensions of the spatial operating environment. Anyone familiar with Apple's iPad could see how tablet computing could easily attach to the g-speak concept as a means of taking one's work on the go. In the clips, a data pad is even used as a means of exchanging files between systems. You also get the sense that the environment works best when coupled with a massively powerful graphics engine so that every movement of the user can correspond to instantaneous changes in displayed digital information.

Curiously, what you don't see is any inclusion of haptics. Those special gloves could easily be fitted with vibrating rings to give users the sensation of touching what they are seeing. Also, none of these demos truly give me an idea of who the ultimate user of this product will be. Does Oblong envision g-speak as a universal replacement for mouse and keyboard? Underkoffler mentioned at TED that he thought these systems may be included with new computers in less than five years. If so, how are we going to use g-speak? Most of us aren't video editors, or responsible for coordinating military missions, or cops sifting through the visual memories of psychics - we just edit word processor files, browse the web, and watch media. Those tasks don't require advanced human-computer interfaces.

But maybe they will. Maybe developments in these gesture technologies will change the way in which we use our computers, making our browsing more multi-space, multi-level, and multi-tasking. It's hard to say. With SDKs available for Linux and Mac OSX developers, it could be that third parties take this platform and discover its ultimate end-use. Maybe we'll see something closer to a personal augmented reality system like Pranav Mistry's Sixth Sense. In any case, g-speak and the spatial operating environment look so frakkin' cool that I can't imagine it not succeeding on at least some level. Who wouldn't want to have access to the technology of 2054, today?

Related Articles

How Scientists Are Growing Computers From Human Brain Cells—and Why They Want to Keep Doing It

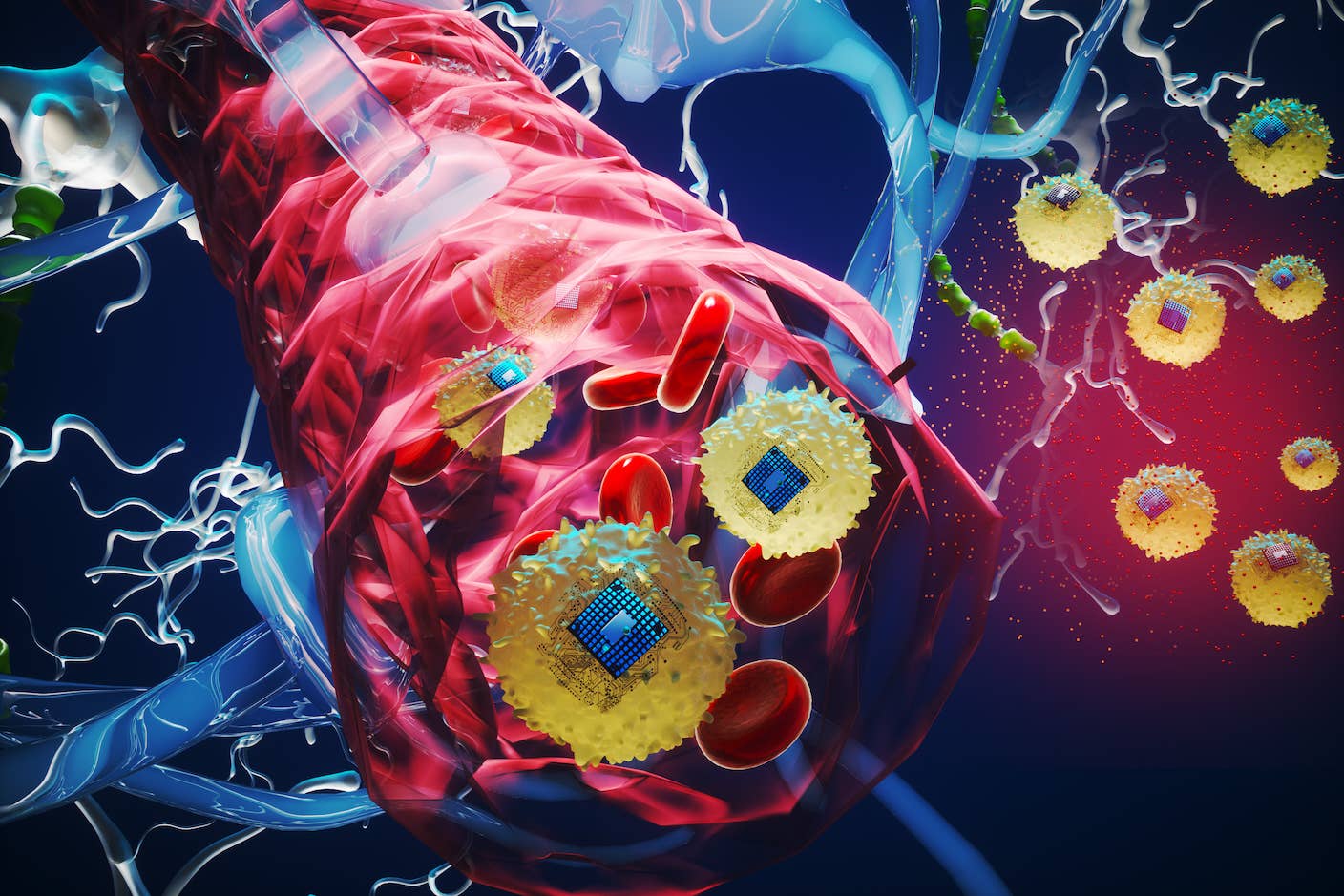

These Brain Implants Are Smaller Than Cells and Can Be Injected Into Veins

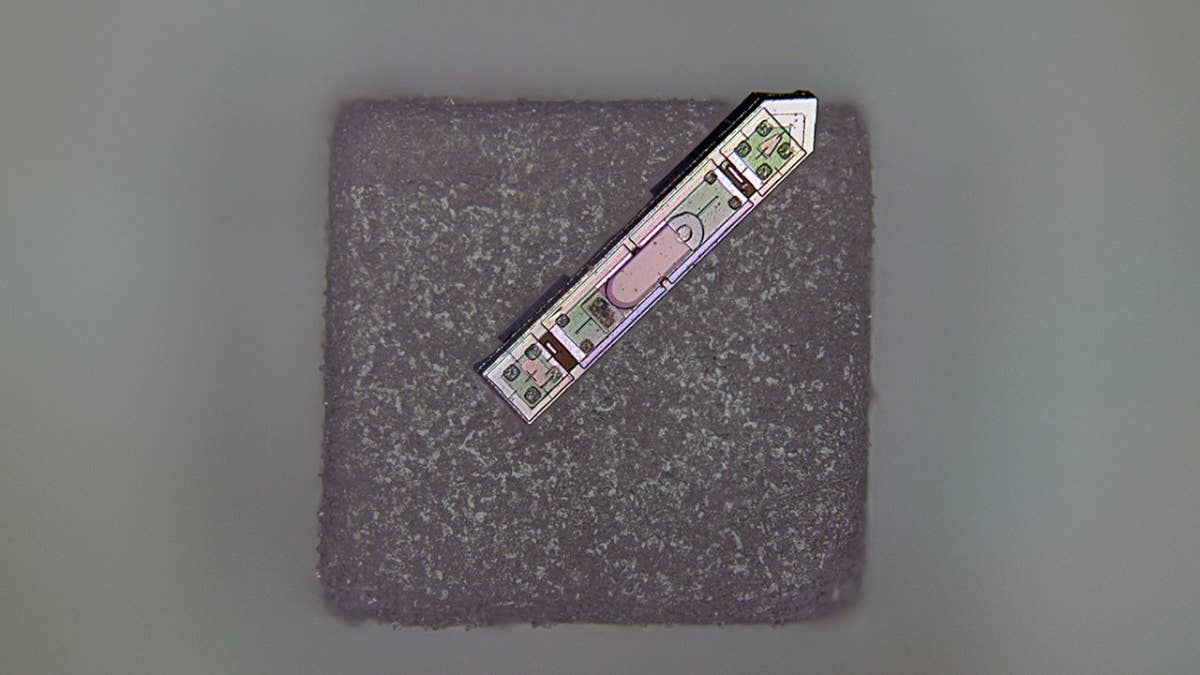

This Wireless Brain Implant Is Smaller Than a Grain of Salt

What we’re reading