Will you ever be treated by Dr. Watson? Not Sherlock Holmes’s right-hand man, but the AI Jeopardy! champion who’s poised to be a sidekick for future physicians. IBM and Nuance Healthcare have teamed up with Columbia University and the University of Maryland to build a medical Watson that’s fine-tuned to address the queries of doctors. The goal is to enhance decision-making and eventually put Watson on every medical center’s computational cloud. But is this the first sign of transitioning healthcare labor from humans to machines? Don’t hold your breath. Given the person-centered focus of treatment, we’re nowhere near having AI that can match the full capabilities of the human medical workforce. But don’t count Watson out either. As a physician’s assistant, AI could be a godsend to America’s healthcare system by facilitating accurate diagnosis.

The video above describes the natural limitations of doctors and how Watson’s powerful AI could supplement clinical cognition. Dr. Herbert Chase, a professor at Columbia University, says that diagnosis can be exceedingly complex (0:33), so pairing symptoms to a condition won’t always lead you to the right answer. Another limitation he points out is that physicians have been unable to keep up with the rapid growth of medical knowledge, which has been doubling every five to seven years (1:35). The ever-rising tide of biomedical literature is simply too much for the human brain to learn. Due to these limitations, Dr. Chase cites the high incidence of delayed diagnosis. As Watson demonstrated on Jeopardy!, the AI could be up to the task on all these fronts. In a fraction of a second, Watson can comb through terabytes of data and formulate an answer. When lives are at stake, the speed and accuracy of a medical Watson could be an invaluable addition to patient care.

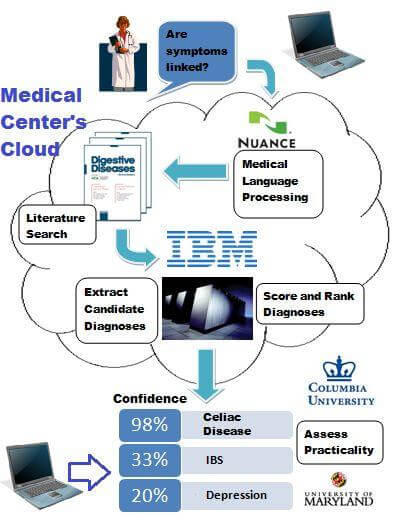

We won’t see a prototype for almost two years, but here’s how a medical Watson might work. Much like his strategy on Jeopardy!, Watson would masterfully dissect natural language. Unlike the game show, this would be handled by Nuance’s front-end speech recognition software, which is specifically tailored for medical jargon. Also, the question would be processed on the medical center’s computational cloud, so clinicians could pose questions remotely. With this approach, there’s no need to wait for laptops with the computing power of IBM’s Blue Gene.

As illustrated in the diagram to the right, an internist could ask, “My patient, Jane, has had digestive issues and has also lost interest in bowling, her favorite hobby. Could these be linked?” Using the Nuance software, IBM’s Blue Gene supercomputers would focus on the words “lost interest.” After a cursory search of the DSM, the computer would recognize this as a symptom of depression. Then Watson would scan hundreds of journals, looking for articles where “depression” and “digestive problems” co-occur. He would eventually come across articles like this one, suggesting that coincident depressive and digestive symptoms are associated with celiac disease, an under-diagnosed autoimmune disorder. Once Watson finds enough articles supporting this hypothesis, the answer would emerge from the cloud to be read by the physician, who would follow-up with lab tests for confirmation. After adopting a gluten-free diet to prevent a relapse, Jane could be back to bowling in no time, all thanks to her physician and the Watson computer. Without Watson, the doctor could have been dancing around the diagnosis for weeks before finally getting it right.

This sounds like an impressive system on its own, but I think IBM and Nuance could do even better. To accommodate the exponential growth of medical knowledge, Dr. Watson must be able to seamlessly integrate new information with existing data. Furthermore, thinking like a scientist and maintaining a computationally-based skepticism would optimize Watson’s accuracy. A medical Watson might adjust an article’s weight according to the number of citations or ignore outdated or unsubstantiated information. Also, the designers could improve Watson’s accuracy by considering epidemiology. For example, Watson could boost its confidence score if an infection was also found in surrounding clinics. If Watson adopts even one of these features, I will be even more impressed than I was during the Jeopardy! performance.

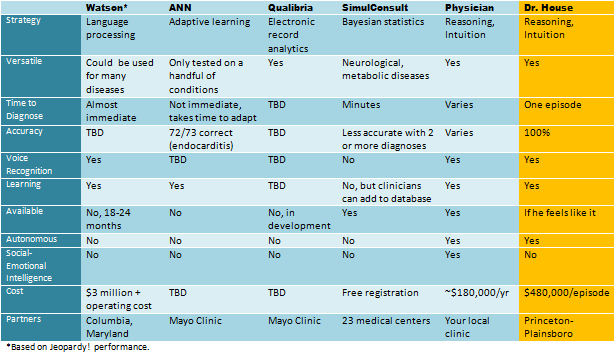

If IBM and Nuance successfully install a practical Watson, how might Watson stack up against other clinical decision support systems? The major competitor will be Qualibria, a joint venture by GE and Intermountain Healthcare. This clinical software is the final product of a prototype we’ve covered before. The purpose of Qualibria is to convert a hospital’s existing health information (i.e. electronic health records or EHRs) into an ongoing clinical trial. In a recent ComputerWorld article, the CIO of Intermountain Healthcare questioned the usefulness of Watson in the clinic. He suggested that Watson’s analytics could be incompatible with the unstructured health information found in EHRs and other hospital data sources. We’ll see if Watson proves him wrong.

Watson and Qualibria aren’t the only players in town. We saw an artificial neural network (ANN) for diagnosis, which is still in the experimental stages. The advantage of this method is that it can adapt to new problems based on trial and error, much like the brains of human doctors. Unfortunately, the system must be “trained” to optimize functioning, and ANN has only been tested on a handful of conditions, such as endocarditis and heart murmurs. There’s also SimulConsult, a system that can be updated by registered physicians, so it’s a bit like clinical crowdsourcing. However, it’s limited to only certain kinds of disorders. Among Qualibria and others players, Watson has his work cut out for him. See how Watson could match up to his competitors (and human doctors) in the table below.

Even though Watson packs a computational punch, there’s no reason to suspect AI will replace doctors in the near term. And IBM agrees. In the video at the beginning of this article, the IBMers make it perfectly clear that Watson is intended to be only an assistant. However, that hasn’t stopped people from speculating. After his historical loss to Watson on Jeopardy!, Ken Jennings made a bold prediction about AI replacing human workers.

Just as factory jobs were eliminated in the 20th century by new assembly-line robots, Brad [Rutter] and I were the first knowledge-industry workers put out of work by the new generation of “thinking” machines. “Quiz show contestant” may be the first job made redundant by Watson, but I’m sure it won’t be the last.

The implications of this statement parallel issues we’ve covered before, like Martin Ford’s hypothesis that advanced AI will cause structural unemployment for even the most highly paid, cognitively demanding jobs. If machines have a better price-performance ratio than people, there’s nothing keeping the higher-ups from adopting automation. But actually building an autonomous AI physician responsible for human life? Easier said than done.

Let’s first identify hospital tasks that are within reach of state-of-the-art AI. Systems that automatically prioritize patients or robots that roam hospital hallways to collect vital signs seem attainable. Also, if Watson becomes a complex question generator (not just an answerer), machines could even perform the initial clinical interview for some patients. It would be relatively uncomplicated to generate standard questions about diet, family history, and health behaviors. With a little algorithmic ingenuity, AI workers may even pursue more in-depth lines of questioning if patients give particular answers or alert doctors or nurses when the patient requires human attention.

But let’s not get ahead of ourselves, techno-optimists. This is only a fraction of the physician’s skill set, and there are certain clinical competencies far beyond any current AI. Think of situations where a patient presents symptoms undocumented in the medical literature. Physicians rely on intuition, experience, and imagination to guide them in these cases, and so far, fluid intelligence for AI is only theoretical. Sorry Watson, but “What is Toronto?” is not an acceptable response when lives are at stake. Furthermore, good physicians also have a keen emotional intelligence. Imagine a robot performing the most challenging task in any doctor’s career, informing a patient they have a terminal illness. It’s not as simple as just saying the words. The exchange must sound sincere and sensitive to the emotional needs of the patient and family. If this emotionally intelligent human-machine dialogue ever passes a Turing Test, it will likely be the zenith of AI. I think that this achievement is so far off that the most common illnesses afflicting patients will be cured by the time it comes to pass. For the foreseeable future, people will be running the healthcare show.

At the dawn of my own medical career, I’m not worried about AI in the clinic at all. In fact, I find it to be a rather exciting prospect, and I hope most doctors will similarly view AI as a partner, not a competitor. I imagine pacing the halls of the hospital, stroking my chin with one arm behind my back, while a mobile AI unit follows closely behind. I’m working on a difficult case and bouncing ideas off Dr. Watson, much like Sherlock Holmes solving a mystery. But I’m jumping the gun. My very own Watson will have to wait. Eight years of medical training, here I come.

<Image credits: IBM (modified), Nuance Communications, University of Maryland, Columbia University, Microsoft Clip Art>

<Video credits: IBM>

<Sources: IBM, ComputerWorld>