Robotic Quintet Composes And Plays Its Own Music

Share

The German engineering firm Festo has developed a self-playing robotic string quintet that will listen to a piece of music and generate new musical compositions in various musical styles effortlessly.

Dubbed Sound Machines 2.0, the acoustic ensemble is made up of two violins, a viola, a cello, and a double bass, each consisting of a single string that is modulated by an electric actuator for pitch, a pneumatic cylinder that acts as a hammer to vibrate the string, and a 40 watt speaker. A new composition is generated in a two-stage process. First, a melody played on a keyboard or xylophone is broken down into the pitch, duration, and intensity of each note, and software with various algorithms and compositional rules derived from Conway's "Game of Life" generates a new composition of a set length. Then, the five robotic instruments receive this new composition, reinterpret it, and listen to one another as they produce an improvisational performance.

Here's an example of what the robots can produce:

Festo is better known for projects from its Bionic Learning Network, which include amazing animal-inspired robots:

- SmartBird

- The elephant trunk-inspired Bionic Handling Assistant

- Robotino

- AirPenguin

- AquaPenguin

- AirJelly and AquaJelly

- AquaRay

- Airacuda (these are just too cool not to mention).

But in 2007, the company also experimented with the blending of technology and art when they developed the first Sound Machines, comprised of a string quartet and a drum.

It's worth taking a look at the Sound Machines 1.0 too:

Essentially, the system's software is mimicking what composers have done for centuries. In music theory, a motif is a short sequence that becomes the theme of a composition, such as a concerto or opera. A composer starts with a motif and evolves it, morphing and changing it throughout the course of the piece. Part of what makes composers like Mozart, for example, great is their ability to take a simple motif and make magic out of it. But a musical piece is not only about how a motif is handled by a composer. Musicians in a quartet, for instance, also work together to bring the motif in a composition to life, interpreting each instrument's role in the performance. On a much larger scale, conductors work to bring all of the instruments of an orchestra together into a cohesive whole to emphasize that motif in their vision.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

What's amazing is that the algorithms of Sound Machine 2.0 are achieving many of these efforts in real time to create the same kind of magic.

The team at Festo isn't the only one interested in robot musicians. Expressive Machines Musical Instruments is an organization that develops machines that play instruments and ran a Kickstarter campaign at the beginning of last year for a band of robots called MARIE. California Institute of the Arts has also employed bots in a human-robot concert that was performed last year. Others are experimenting with robots and music, including jazz legend Pat Metheny and composer David Cope.

The fact that robots can create classical music shouldn't be too surprising as Western music's 12-note system is highly mathematical, lending itself to complex algorithmic analysis. Coupled with all the research going into deconstructing the human brain, including how music changes the brain, robotic music seems logical (pun intended). While these early efforts may not sound pleasing to everyone's ears, it will only be a matter of time before robot produced music become popular. Why? Because software developers have also figured out what makes music popular, as evidenced by the Hit Potential Equation that can predict whether a song will be a hit.

In the not-so-distant future, a system like Sound Machines 2.0 could also turn anyone into a composer. A commercial Orchestra-In-A-Box product may allow a person to play a simple melody and have the robotic instruments create hours of live music. So rather than ripping through your music collection to put together music for a party, you could set the mood for the entire night in under a minute in whatever style you wanted.

In light of this, the true power of this technology may be in the way it places an emphasis on human creativity instead of merely replacing it. Festo could have easily produced a robot that makes music on its own, allowing people just to marvel at what it is capable of. However, the developers specifically chose to have the system respond to direct human input, thereby allowing people to connect to the composition as co-creators with the robots.

Whether Sound Machines 2.0 is merely a cool project or a window into the future of live music remains to be seen, but clearly Festo's efforts are demonstrating that robots aren't just about replacing people's jobs, but in an increasing number of ways, they are empowering human creativity.

[Media: Festo]

David started writing for Singularity Hub in 2011 and served as editor-in-chief of the site from 2014 to 2017 and SU vice president of faculty, content, and curriculum from 2017 to 2019. His interests cover digital education, publishing, and media, but he'll always be a chemist at heart.

Related Articles

Scientists Want to Give ChatGPT an Inner Monologue to Improve Its ‘Thinking’

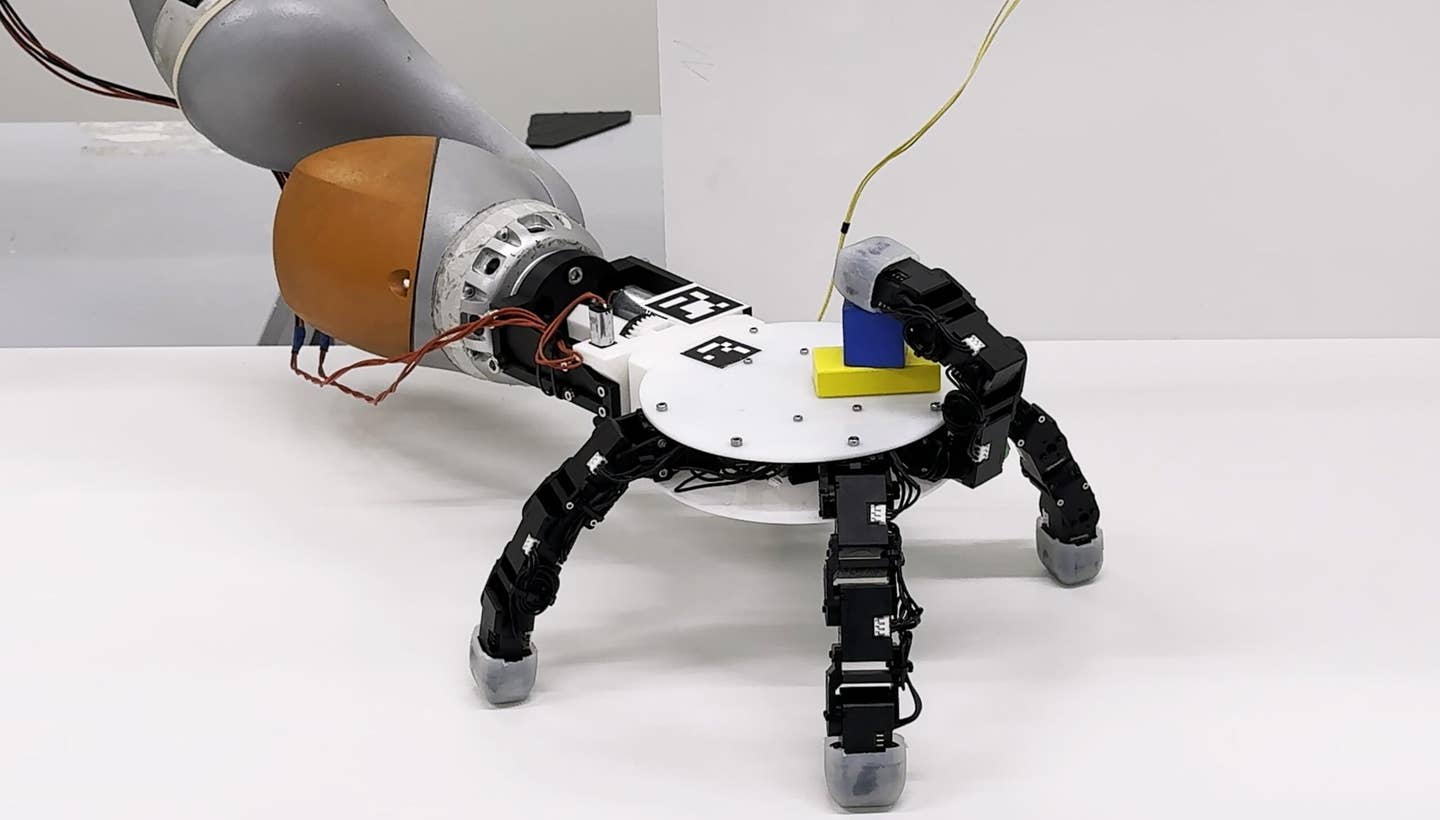

This Robotic Hand Detaches and Skitters About Like Thing From ‘The Addams Family’

Humanity’s Last Exam Stumps Top AI Models—and That’s a Good Thing

What we’re reading