In recent years, the falling cost of 3-D printers has been a major driver of technical innovation and job growth. The gradual entry of individuals and small businesses into 3-D printing accounts for much of the excitement about the industry — but only a small fraction of its $2.2 billion market footprint.

In recent years, the falling cost of 3-D printers has been a major driver of technical innovation and job growth. The gradual entry of individuals and small businesses into 3-D printing accounts for much of the excitement about the industry — but only a small fraction of its $2.2 billion market footprint.

One of major barriers to entry is the difficulty of generating the 3-D computer models that serve as the instruction sheets for printers.

In a paper they will present at Siggraph Asia in December, Ariel Shamir, of the Interdisciplinary Center at Herzliya, and Daniel Cohen-Or and Tao Chen of Tel Aviv University hope to knock that barrier down with software they’ve developed that allows the user to extract the beginnings of a 3-D model of an object from a single photograph.

“The key idea is that you could create 3D objects based only on single images,” Shamir told Singularity Hub. “We wanted a model that would be simple for almost anyone to use.”

In other words, if one saw an object only once and took a decent photograph of it, one could could print up a prototype of it later that day.

Users can already produce 3-D models from photographs, but 3-Sweep ostensibly makes it faster and easier by eliminating the need for expensive, labor-intensive CAD software.

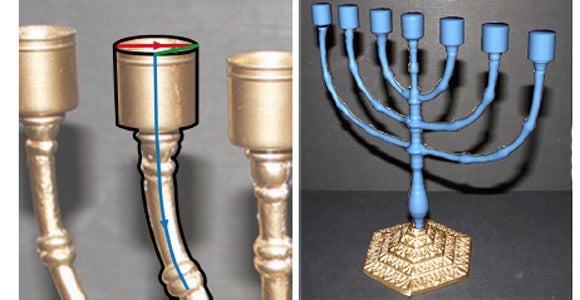

The trouble is one that most users of Photoshop have encountered: Computers are not very good at identifying where one image ends and another begins. But humans are. The software, called 3-Sweep, invites the human user to identify simple three-dimensional objects for the computer by drawing a line across each of its three basic axes.

The computer then highlights the object and allows the user to rotate, re-size and move it around in three dimensions, basically offering power-editing in two dimensions and a bridge to 3-D modeling. When moving and resizing objects, the software retains parallelism, concentricity and orthogonality. It can smooth edits by replicating the lighting and texture of one part of an object and applying to another when the user signals that a part brought in from a different images forms part of a single whole.

The computer then highlights the object and allows the user to rotate, re-size and move it around in three dimensions, basically offering power-editing in two dimensions and a bridge to 3-D modeling. When moving and resizing objects, the software retains parallelism, concentricity and orthogonality. It can smooth edits by replicating the lighting and texture of one part of an object and applying to another when the user signals that a part brought in from a different images forms part of a single whole.

The software works with cuboids and cylinders, even if they are bent. But it doesn’t yet work for something like an engine or anything with a lot of small, intricate parts.

“It’s more for general simple objects, which are still a large part of what people are needing,” Shamir said.

Shamir and his colleagues haven’t decided yet whether to pursue 3-Sweep as a commercial product, but they are continuing to improve the software and have applied for a patent on what they’ve accomplished so far, Shamir told Singularity Hub.

Photos courtesy Ariel Shamir and Daniel Cohen-Or