How One Researcher Is Using VR to Help Our Eyes Adapt to Seeing in Space

Share

It’s not like moon-walking astronauts don’t already have plenty of hazards to deal with. There’s less gravity, extreme temperatures, radiation—and the whole place is aggressively dusty. If that weren’t enough, it also turns out that the visual-sensory cues we use to perceive depth and distance don’t work as expected—on the moon, human eyeballs can turn into scam artists.

During the Apollo missions, it was a well-documented phenomenon that astronauts routinely underestimated the size of craters, the slopes of hilltops, and the distance to certain objects. Objects appeared much closer than they were, which created headaches for mission control. Astronauts sometimes overexerted themselves and depleted oxygen supplies in trying to reach objects that were further than expected.

This phenomenon has also become a topic of study for researchers trying to explain why human vision functions differently in space, why so many visual errors occurred, and what, if anything, we can do to prepare the next generation of space travelers.

According to Katherine Rahill, a PhD candidate at the Catholic University of America who studies psychophysics—a field that looks at human sensory perceptions and responses to physical stimuli like light, sound, touch, and even gravity—the visual distortions that occurred on the moon can be explained by the lack of atmosphere. On the moon, light scatters across the lunar terrain differently than on Earth.

“What I’m proposing in my research is that atmospheric light scattering on the moon resulted in the washing away of certain textures and other ecological features that are inherent to human perception,” she told me.

To test her theories, Rahill is using virtual reality (VR) to simulate the way objects appear on the surface of the moon, and to measure the way people perceive things like slope angles and distance.

“VR is great because in the last five or so years it has increased dramatically in terms of its rendering capabilities and visual acuity,” she said.

Rahill explained that by using game engines like Unity, which she uses to build her simulations, developers like her are required to understand and implement very real physics equations that represent the way light disperses.

“In a gaming environment, if you’re on a planet with any kind of atmosphere, then there’s an individual or team that actually took apart an incredibly long atmospheric equation to recreate the way light would scatter on that hypothetical planet. What I’m doing with my research is controlling for the physics of light, looking at complex particle light scattering, and replicating the visual effects that occur on the moon,” she said.

In one of her experiments, Rahill has student test subjects come to the lab and spend time in three different simulated worlds: an earth environment, a lunar environment, and one that uses a hypothetical Earth-like atmosphere placed on the surface of the moon.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

Each test subject is asked to give an assessment of things like distance and the slope of hills from inside all three environments, using both a large curved screen display and a fully-immersive VR headset like the Oculus Rift.

The fact that Rahill is using a large screen in addition to the VR headsets points to the fact that it’s not yet entirely clear whether a fully immersive display provides any benefits over a regular screen in measuring depth perception.

"These headsets have come a long way, but I want to make an additional contribution to the literature given there’s a lot of conflicting evidence in terms of what is actually most beneficial if you’re examining depth perception,” she told me. “I’m working to see what we can do, if anything, to improve their usefulness.”

Understanding the way humans see in space will be increasingly important, especially as NASA recently announced plans to return to the moon, and perhaps Mars, in the next decade.

“If we’re going to be sending these astronauts on long-duration space missions, we’ll need to find the tools that will help prevent them from underestimating distance to ensure they don’t deplete essential resources like oxygen during navigation,” Rahill said.

She’s hopeful that VR can help us prepare for that.

Image Credit: Gorodenkoff / Shutterstock.com

Aaron Frank is a researcher, writer, and consultant who has spent over a decade in Silicon Valley, where he most recently served as principal faculty at Singularity University. Over the past ten years he has built, deployed, researched, and written about technologies relating to augmented and virtual reality and virtual environments. As a writer, his articles have appeared in Vice, Wired UK, Forbes, and VentureBeat. He routinely advises companies, startups, and government organizations with clients including Ernst & Young, Sony, Honeywell, and many others. He is based in San Francisco, California.

Related Articles

Elon Musk Says SpaceX Is Pivoting From Mars to the Moon

What If We’re All Martians? The Intriguing Idea That Life on Earth Began on the Red Planet

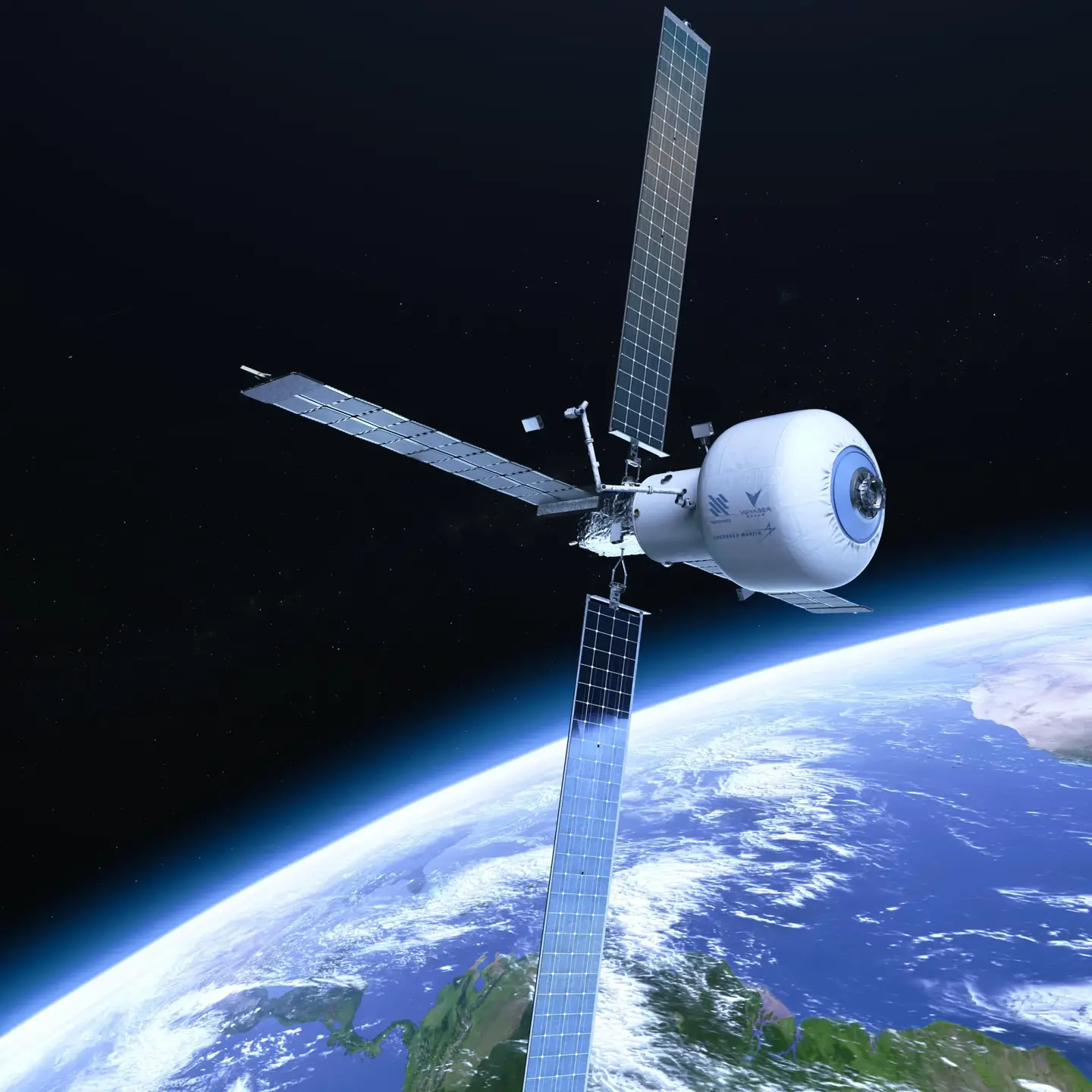

The Era of Private Space Stations Launches in 2026

What we’re reading