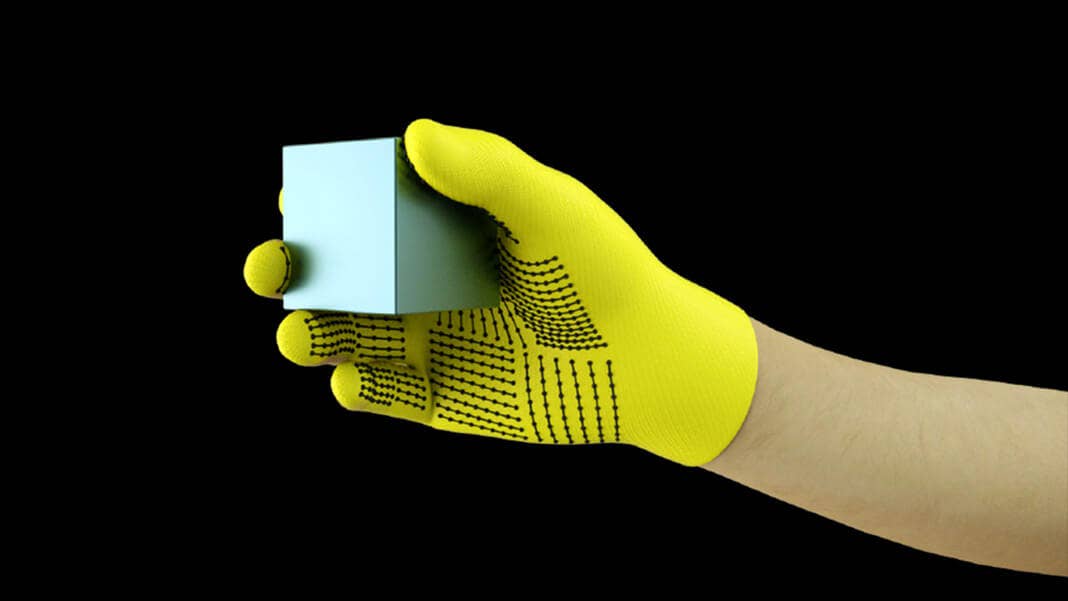

These $10 Sensor-Packed Gloves Could Give Robots a Sense of Touch

Share

Machines are mastering vision and language, but one sense they're lagging behind on is touch. Now researchers have created a sensor-laden glove for just $10 and recorded the most comprehensive tactile dataset to date, which can be used to train machine learning algorithms to feel the world around them.

Dexterity would be an incredibly useful skill for robots to master, opening up new applications everywhere from hospitals to our homes. And they’ve been coming along in leaps and strides in their ability to manipulate objects, OpenAI’s cube juggling robotic hand being a particularly impressive example.

So far, though, they’ve had one hand tied behind their backs. Most approaches have relied on using either visual data or demonstrations to show machines how they should grasp objects. But if you look at how humans learn to manipulate objects, you realize that’s just one part of the puzzle.

Our sense of touch gives us constant feedback as we handle objects. This is how we learn to handle soft and rigid objects differently, know when something is slipping out of our hands, and are even able to identify things in the dark.

“Humans can identify and handle objects well because we have tactile feedback,” Subramanian Sundaram, a former graduate student at MIT now working at Boston University and Harvard University, said in a press release. “As we touch objects, we feel around and realize what they are. Robots don’t have that rich feedback.”

That’s why Sundaram and colleagues at MIT designed a sensor-packed glove that they hope could eventually give robots that same sense of touch. In a recent paper in Nature they describe how they used the glove to compile the largest-ever collection of tactile data, which can be used to train machine learning algorithms to identify objects from touch alone.

The device costs just $10 and is surprisingly simple. A flexible, force-sensitive film is attached to the outside of a standard knitted glove and interwoven with a 32 by 32 grid of conducting threads. The 548 points where the horizontal and vertical threads overlap act as pressure sensors because the force-sensitive film below alters the resistance when pressed.

These electrical signals are converted into a “tactile map,” which is essentially a video of a graphical representation of a hand with 548 pressure points represented as pixels that range in color from black to white. The lighter the points become, the higher the pressure.

The researchers then used the glove to create a dataset by using it to handle 26 everyday objects like batteries, scissors, mugs, and chains. The output was a series of tactile maps made up of a total of 135,000 video frames. To demonstrate the usefulness of this kind of data, they then used it to train a convolutional neural network (CNN)—a type of deep learning algorithm typically used for visual tasks—to identify objects from their tactile maps.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

To prepare the training data the researchers first identified the frames from the tactile maps where the objects were actually in contact with the glove. Then, because objects can feel very different depending on how you grasp them, the first step of the algorithm was to split the frames into clusters based on how similar the tactile map is.

This groups frames generated using the same grasp together, and the CNN then selects one frame from each of these clusters for a total of up to eight different frames. After training on this data the system was able to identify objects from their tactile map 76 percent of the time. A separate dataset created by picking up and dropping objects was also used to train another CNN to predict their weight.

Prior to this, say the researchers, the only way to collect this kind of tactile data across the entire hand was using the Tekscan Grip system, which is much more expensive and has only 349 sensors. The new low-cost device could make it feasible for far more researchers to collect their own tactile datasets and use them to push forward tactile sensing in machines.

The main limitations at the minute are the dense sensor array of the glove and corresponding large number of wires required, as well as the relatively slow frame rate (seven per second) at which the tactile maps are recorded, writes Giulia Pasquale, a computer scientist at the Italian Institute of Technology, in an accompanying review of the research in Nature.

But it should be relatively simple to adapt the technology so it can be added to both robotic and prosthetic hands, she adds. In both cases that sense of touch could lead to breakthroughs in dexterity, and for prosthetic patients the tactile feedback could also help reduce phantom-limb pain and increase their sense of ownership over their prostheses.

Image Courtesy of the researchers Subramanian Sundaram, Petr Kellnhofer, Yunzhu Li, Jun-Yan Zhu, Antonio Torralba & Wojciech Matusik - MIT. / CC BY NC-ND 3.0

Related Articles

This ‘Machine Eye’ Could Give Robots Superhuman Reflexes

This Robotic Hand Detaches and Skitters About Like Thing From ‘The Addams Family’

Waymo Closes in on Uber and Lyft Prices, as More Riders Say They Trust Robotaxis

What we’re reading