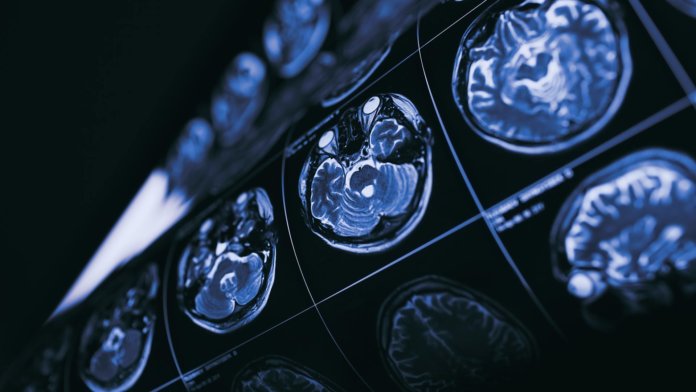

Medicine is one of the hottest fields when it comes to applying AI to real-world problems, in particular using deep learning systems to detect disease in medical imagery. There have been promising early results, in particular DeepMind’s work on eye disease, but there’s been widespread skepticism around whether results would translate to the clinic.

Now the authors of a paper in The Lancet Digital Health have carried out the first systematic review and meta-analysis of all studies between January 2012 and June 2019 comparing deep learning models’ ability to detect disease through medical imaging to that of health professionals.

The trawl found 20,500 articles tackling the topic, but shockingly, less than 1 percent of them were scientifically robust enough to be confident in their claims, say the authors. Of those, only 25 tested their deep learning models on unseen data, and only 14 actually compared performance with health professionals on the same test sample.

Nonetheless, when the researchers pooled the data from the 14 most rigorous studies, they found the deep learning systems correctly detected disease in 87 percent of cases, compared to 86 percent for healthcare professionals. They also did well on the equally important metric of excluding patients who don’t have a particular disease, getting it right 93 percent of the time compared to 91 percent for humans.

Ultimately, then, the results of the review are broadly positive for AI, but damning of the hype that has built up around the technology and the research practices of most of those trying to apply it to medical diagnosis.

“A key lesson from our work is that in AI—as with any other part of healthcare—good study design matters,” first author Xiaoxuan Liu, from the University of Birmingham, UK, said in a press release. “Without it, you can easily introduce bias which skews your results. These biases can lead to exaggerated claims of good performance for AI tools which do not translate into the real world,” he added.

The authors also note that even in the better framed studies, the comparison was still not entirely realistic. Very few were done in real clinical environments, and only four provided health professionals with clinical information that would be available in a real-world setting to help them make a diagnosis.

Perhaps even more importantly, studies did not measure the most important metric in medical research: patient outcomes.

“Evidence on how AI algorithms will change patient outcomes needs to come from comparisons with alternative diagnostic tests in randomized controlled trials,” co-first author Livia Faes, from Moorfields Eye Hospital, London, said in the press release.

“So far, there are hardly any such trials where diagnostic decisions made by an AI algorithm are acted upon to see what then happens to outcomes which really matter to patients, like timely treatment, time to discharge from hospital, or even survival rates,” she said.

Despite the limitations, though, the authors say there’s cause for cautious optimism. For a start, research standards already seem to be improving; the majority of the studies that met the review’s minimum inclusion criteria were identified in the last year.

Their early results suggest that deep learning holds huge potential in medical diagnosis, but the authors say there needs to be more standardized and rigorous approaches to testing them—otherwise that promise will be undermined by over-the-top claims from dubious studies.

Image Credit: MriMan/Shutterstock.com