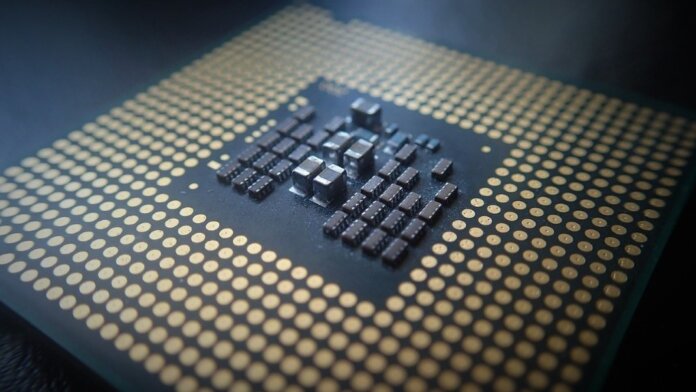

For decades, the computer chips that run everything from PCs to spaceships have looked remarkably similar. But as Moore’s Law slows, industry leaders are moving towards specialized chips, which experts say threatens to undermine the economic forces fueling our rapid technological growth.

The earliest computers were often designed to carry out very specific tasks, and even if they could be reprogrammed it would often require laborious physical rewiring. But in 1945, computer scientist John von Neumann proposed a new architecture that allowed a computer to store and execute many different programs on the same underlying hardware.

The idea was rapidly adopted, and the “von Neuman architecture” has underpinned the overwhelming majority of processors made since. That’s why, despite vastly different processing speeds, the chip in your laptop and one in a supercomputer operate in more or less the same way and are based on very similar design principles.

This made computers what is known as a “general-purpose technology.” These are innovations that can be applied to broad swathes of the economy and can have profound impacts on society. And one of the characteristics of these technologies is that they typically benefit from a virtuous economic cycle that boosts the pace of their development.

As early adopters start purchasing a technology, it generates revenue that can be ploughed back into further developing products. This boosts the capabilities of the product and reduces prices, which means more people can adopt the technology, fueling the next round of progress.

With a broadly applicable technology like computers, this cycle can repeat for decades, and indeed has. This has been the economic force that has powered the rapid improvement in computers over the last 50 years and their integration into almost every industry imaginable.

But in a new article in Communications of the ACM, computer scientists Neil Thompson and Svenja Spanuth argue that this positive feedback loop is now coming to an end, which could soon lead to a fragmented computing industry where some applications continue to see improvements but others get stuck in a technological slow lane.

The reason for this fragmentation is the slowing pace of innovation in computer chips characterized by the slow death of Moore’s Law, they say. As we approach the physical limits of how much we can miniaturize the silicon chips all commercial computers rely on, the time it takes for each leap in processing power has increased significantly, and the cost of reaching it has ballooned.

Slowing innovation means fewer new users adopting the technology, which in turn reduces the amount of money chipmakers have to fund new development. This creates a self-reinforcing cycle that steadily makes the economics of universal chips less attractive and further slows technical progress.

In reality, the authors note that the cost of building state-of-the-art chip foundries has also risen dramatically, putting further pressure on the industry. For some time, the mismatch between market growth and increased costs has seen the number of chipmakers consolidate from 25 in 2003 to just 3 by 2017.

As performance boosts slow, this makes the case for specialized chips increasingly attractive, say the authors. The design decisions that make chips universal can be sub-optimal for certain computing tasks, particularly those that can run many calculations in parallel, can be done at lower precision, or whose computations can be done at regular intervals.

Building chips specially designed for these kinds of tasks can often bring significant performance boosts, but if they only have small markets then they typically improve more slowly and cost more than universal chips. This is why their uptake has historically been low, but with the slowdown in universal chip progress that’s starting to change.

Today all major computing platforms, from smartphones to supercomputers and embedded chips, are becoming more specialized, say the authors. The rise of the GPU as the workhorse of machine learning—and increasingly of supercomputing—is the most obvious example. Since then, leading technology companies like Google and Amazon have even started building their own custom machine learning chips, as have bitcoin miners.

What this means is that those with sufficient demand for their niche applications, which can benefit from specialization, will see continued performance boosts. But where specialization isn’t an option, chip performance is likely to stagnate considerably, the authors say.

The shift to cloud computing could help to mitigate this process slightly by corralling demand for specialized processors, but more realistically, it will take a major breakthrough in computing technology to jolt us back into the kind of virtuous cycle we’ve enjoyed for the last 50 years.

Given the huge benefits our societies have reaped from ever-improving computing power, bringing about that kind of breakthrough should be a major priority.

Image Credit: Tobias Dahlberg from Pixabay