There was a time when converting an old photograph into a digital image impressed people. These days we can do a bit more, like bringing vintage photos to life à la Harry Potter. And this week, chipmaker NVIDIA performed another magic trick.

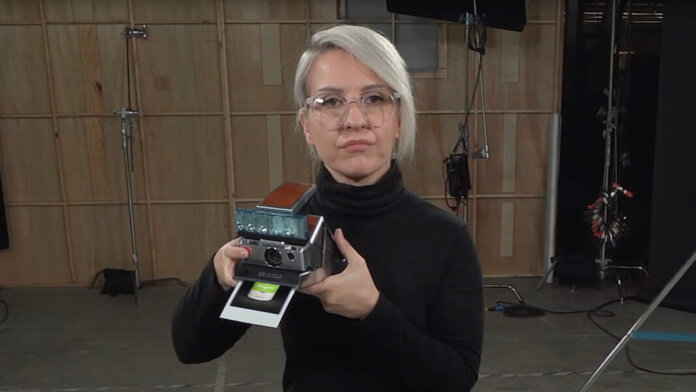

Building on previous work, NVIDIA researchers showed how a small neural network trained on a few dozen images can render the pictured scene in full 3D. As a demo, the team transformed images of a model holding a Polaroid camera—an ode to Andy Warhol—into a 3D scene.

The work stands out for a few reasons.

First, it’s very speedy. Earlier AI models took hours to train and minutes to render 3D scenes. NVIDIA’s neural network takes no more than a few minutes to train and renders the scene in tens of milliseconds. Second, the AI itself is diminutive in comparison to today’s hulking language models. Large models like GPT-3 train on hundreds or thousands of graphics processing units (GPUs). NVIDIA’s image rendering AI runs on a single GPU.

The work builds on neural radiance fields (NeRFs), a technique developed by researchers at UC Berkeley, UC San Diego, and Google Research, a couple years ago. In short, a NeRF takes a limited data set—say, 36 photographs of a subject captured from a variety of angles—and then predicts the color, intensity, and direction of light radiating from any point in the scene. That is, the neural net fills in the gaps between images with best guesses based on the training data. The result is a continuous 3D space stitched together from the original images.

NVIDIA’s recent contribution, outlined in a paper, puts NeRFs on performance enhancing drugs. According to the paper, the new method, dubbed Instant NeRF, exploits an approach known as multi-resolution hash grid encoding to simplify the algorithm’s architecture and run it in parallel on a GPU. This upped performance by a few orders of magnitude—their algorithm runs up to 1,000 times faster, according to an NVIDIA blog post—without sacrificing quality.

NVIDIA imagines the technology could find its way into robots and self-driving cars, helping them better visualize and understand the world around them. It could also be used to make high-fidelity avatars people can import into virtual worlds or to replicate real-world scenes in the digital world where designers can modify and build on them.

The speed and size of neural networks matter in such cases, as huge algorithms requiring prodigious amounts of computing power can’t be used by most people, nor are they practical for robots and cars without lightning-quick, dependable connections to the cloud.

The demo was part of NVIDIA’s developer conference this week. Other highlights included a system for self-driving cars that aims to map 300,000 miles of roads down to centimeters by 2024 and an AI supercomputer the company says will be fastest in the world upon release (a claim also made by Meta recently).

All this fits snugly into a larger narrative. The digital world is bleeding into the real world, and vice versa. And not just books, music, photos, documents, and payments—but people, places, and infrastructure. Given NVIDIA’s chips excel at AI and graphics, the company is well-positioned to have a hand in it all. Indeed, not content with creating digital replicas of individual scenes, the company has said it’s building a digital twin of the Earth too.

Granted, it’s getting increasingly difficult to draw the line between marketing and sales pitches and serious developments. It’s not uncommon to see mashups of all tech’s top buzzwords—NFTs, the metaverse, AI, blockchain—in one headline. But while vision seems to be outpacing capability, there are plenty of hints we’ll get there sooner or later.

A mini AI that can turn a pile of polaroids into a 3D scene is just one of them.

Image Credit: NVIDIA

Looking for ways to stay ahead of the pace of change? Rethink what’s possible. Join a highly curated, exclusive cohort of 80 executives for Singularity’s flagship Executive Program (EP), a five-day, fully immersive leadership transformation program that disrupts existing ways of thinking. Discover a new mindset, toolset and network of fellow futurists committed to finding solutions to the fast pace of change in the world. Click here to learn more and apply today!