AI and conventional computers are a match made in hell.

The main reason is how hardware chips are currently set up. Based on the traditional Von Neumann architecture, the chip isolates memory storage from its main processors. Each computation is a nightmarish Monday morning commute, with the chip constantly shuttling data to-and-fro from each compartment, forming a notorious “memory wall.”

If you’ve ever been stuck in traffic, you know the frustration: it takes time and wasted energy. As AI algorithms become increasingly complex, the problem gets increasingly worse.

So why not design a chip based on the brain, a potential perfect match for deep neural nets?

Enter compute-in-memory, or CIM, chips. Faithful to their name, these chips compute and store memory at the same site. Forget commuting; the chips are highly efficient work-from-home alternatives, nixing the data traffic bottleneck problem and promising higher efficiency and lower energy consumption.

Or so goes the theory. Most CIM chips running AI algorithms have solely focused on chip design, showcasing their capabilities using simulations of the chip rather than running tasks on full-fledged hardware. The chips also struggle to adjust to multiple different AI tasks—image recognition, voice perception—limiting their integration into smartphones or other everyday devices.

This month, a study in Nature upgraded CIM from the ground up. Rather than focusing solely on the chip’s design, the international team—led by neuromorphic hardware experts Dr. H.S. Philip Wong at Stanford and Dr. Gert Cauwenberghs at UC San Diego—optimized the entire setup, from technology to architecture to algorithms that calibrate the hardware.

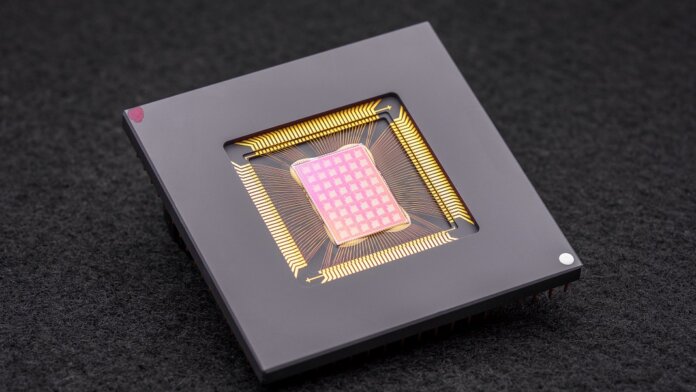

The resulting NeuRRAM chip is a powerful neuromorphic computing behemoth with 48 parallel cores and 3 million memory cells. Extremely versatile, the chip tackled multiple AI standard tasks—such as reading hand-written numbers, identifying cars and other objects in images, and decoding voice recordings—with over 84 percent accuracy.

While the success rate may seem mediocre, it rivals existing digital chips but dramatically saves energy. To the authors, it’s a step closer to bringing AI directly to our devices rather than needing to shuttle data to the cloud for computation.

“Having those calculations done on the chip instead of sending information to and from the cloud could enable faster, more secure, cheaper, and more scalable AI going into the future, and give more people access to AI power,” said Wong.

Neural Inspiration

AI-specific chips are a now an astonishing dime a dozen. From Google’s Tensor Processing Unit (TPU) and Tesla’s Dojo supercomputer architecture to Baidu and Amazon, tech giants are investing millions in the AI chip gold rush to build processors that support increasingly sophisticated deep learning algorithms. Some even tap into machine learning to design chip architectures tailored for AI software, bringing the race full circle.

One particularly intriguing concept comes straight from the brain. As data passes through our neurons, they “wire up” into networks through physical “docks” called synapses. These structures, sitting on top of neural branches like little mushrooms, are multitaskers: they both compute and store data through changes in their protein composition.

In other words, neurons, unlike classic computers, don’t need to shuttle data from memory to CPUs. This gives the brain its advantage over digital devices: it’s highly energy efficient and performs multiple computations simultaneously, all packed into a three-pound jelly stuffed inside the skull.

Why not recreate aspects of the brain?

Enter neuromorphic computing. One hack was to use RRAMs, or resistive random-access memory devices (also dubbed ‘memristors’). RRAMs store memory even when cut from power by changing the resistance of their hardware. Similar to synapses, these components can be packed into dense arrays on a tiny area, creating circuits capable of highly complex computations without bulk. When combined with CMOS, a fabrication process for building circuits in our current microprocessors and chips, the duo becomes even more powerful for running deep learning algorithms.

But it comes at a cost. “The highly-parallel analogue computation within RRAM-CIM architecture brings superior efficiency, but makes it challenging to realize the same level of functional flexibility and computational accuracy as in digital circuits,” said the authors.

Optimization Genie

The new study delved into every part of a RRAM-CIM chip, redesigning it for practical use.

It starts with technology. NeuRRAM boasts 48 cores that compute in parallel, with RRAM devices physically interwoven into CMOS circuits. Like a neuron, each core can be individually turned off when not in use, preserving energy while its memory is stored on the RRAM.

These RRAM cells—all three million of them—are linked so that data can transfer in both directions. It’s a crucial design, allowing the chip to flexibly adapt to multiple different types of AI algorithms, the authors explained. For example, one type of deep neural net, CNN (convolutional neural network), is particularly great at computer vision, but needs data to flow in a single direction. In contrast, LSTMs, a type of deep neural net often used for audio recognition, recurrently processes data to match signals with time. Like synapses, the chip encodes how strongly one RRAM “neuron” connects to another.

This architecture made it possible to fine-tune data flow to minimize traffic jams. Like expanding single-lane traffic to multi-lane, the chip could duplicate a network’s current “memory” from most computationally intensive problems, so that multiple cores analyze the problem simultaneously.

A final touchup to previous CIM chips was a stronger bridge between brain-like computation—often analogue—and digital processing. Here, the chip uses a neuron circuit that can easily convert analogue computation to digital signals. It’s a step up from previous “power-hungry and area-hungry” setups, the authors explained.

The optimizations worked out. Putting their theory to the test, the team manufactured the NeuRRAM chip and developed algorithms to program the hardware for different algorithms—like Play Station 5 running different games.

In a multitude of benchmark tests, the chip performed like a champ. Running a seven-layer CNN on the chip, NeuRRAM had less than a one percent error rate at recognizing hand-written digits using the popular MNIST database.

It also excelled on more difficult tasks. Loading another popular deep neural net, LSTM, the chip was roughly 85 percent correct when challenged with Google speech command recognition. Using just eight cores, the chip—running on yet another AI architecture—was able to recover noisy images, reducing errors by roughly 70 percent.

So What?

One word: energy.

Most AI algorithms are total energy hogs. NeuRRAM operated at half the energy cost of previous state-of-the-art RRAM-CIM chips, further translating the promise of energy savings with neuromorphic computing into reality.

But the study’s standout is its strategy. Too often when designing chips, scientists need to balance efficiency, versatility, and accuracy for multiple tasks—metrics that are often at odds with one another. The problem gets even tougher when all the computing is done directly on the hardware. NeuRRAM showed that it’s possible to battle all beasts at once.

The strategy used here can be used to optimize other neuromorphic computing devices such as phase-change memory technologies, the authors said.

For now, NeuRRAM is a proof-of-concept, showing that a physical chip—rather than a simulation of it—works as intended. But there’s room for improvement, including further scaling RRAMs and shrinking its size to one day potentially fit into our phones.

“Maybe today it is used to do simple AI tasks such as keyword spotting or human detection, but tomorrow it could enable a whole different user experience. Imagine real-time video analytics combined with speech recognition all within a tiny device,” said study author Dr. Weier Wan. “As a researcher and an engineer, my ambition is to bring research innovations from labs into practical use.”

Image Credit: David Baillot/University of California San Diego