This Ant-Inspired AI Brain Helps Farm Robots Better Navigate Crops

Share

Picture this: the setting sun paints a cornfield in dazzling hues of amber and gold. Thousands of corn stalks, heavy with cobs and rustling leaves, tower over everyone—kids running though corn mazes; farmers examining their crops; and robots whizzing by as they gently pluck ripe, sweet ears for the fall harvest.

Wait, robots?

Idyllic farmlands and robots may seem a strange couple. But thanks to increasingly sophisticated software allowing robots to “see” their surroundings—a technology called computer vision—they’re rapidly integrating into our food production mainline. Robots are now performing everyday chores, such as harvesting ripe fruits or destroying crop-withering weeds.

With an ongoing shortage in farmworkers, the hope is that machines could help boost crop harvests, reliably bring fresh fruits and veggies to our dinner tables, and minimize waste.

To fulfill the vision, robot farmworkers need to be able to traverse complex and confusing farmlands. Unfortunately, these machines aren’t the best navigators. They tend to get lost, especially when faced with complex and challenging terrain. Like kids struggling in a corn maze, robots forget their location so often the symptom has a name: the kidnapped robot problem.

A new study in Science Robotics aims to boost navigational skills in robots by giving them memory.

Led by Dr. Barbara Webb at the University of Edinburgh, the inspiration came from a surprising source—ants. These critters are remarkably good at navigating to desired destinations after just one trip. Like seasoned hikers, they also remember familiar locations, even when moving through heavy vegetation along the way.

Using images collected from a roaming robot, the team developed an algorithm based on brain processes in ants during navigation. When it was run on hardware also mimicking the brain’s computations, the new method triumphed over a state-of-the-art computer vision system in navigation tasks.

“Insect brains in particular provide a powerful combination of efficiency and effectiveness,” said the team.

Solving the problem doesn’t just give wayward robotic farmhands an internal compass to help them get home. Tapping into the brain’s computation—a method called neuromorphic computing—could further finesse how robots, such as self-driving cars, interact with our world.

An Ant’s Life

If you’ve ever wandered around dense woods or corn mazes, you’ve probably asked your friends: Where are we?

Unlike walking along a city block—with storefronts and other buildings as landmarks—navigating a crop field is extremely difficult. A main reason is that it’s hard to tell where you are and what direction you’re facing because the surrounding environment looks so similar.

Robots face the same challenge in the wild. Currently, vision systems use multiple cameras to capture images as the robot transverses terrain, but they struggle to identify the same scene if lighting or weather conditions change. The algorithms are slow to adapt, making it difficult to guide autonomous robots in complex environments.

Here’s where ants come in.

Even with relatively limited brain resources compared to humans, ants are remarkably brilliant at learning and navigating complex new environments. They easily remember previous routes regardless of weather, mud, or lighting.

They can follow a route with “higher precision than GPS would allow for a robot,” said the team.

One quirk of an ant’s navigational prowess is that it doesn’t need to know exactly where it is during navigation. Rather, to find its target, the critter only needs to recognize whether a place is familiar.

It’s like exploring a new town from a hotel: you don’t necessarily need to know where you are on the map. You just need to remember the road to get to a café for breakfast so you can maneuver your way back home.

Using ant brains as inspiration, the team built a neuromorphic robot in three steps.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

The first was software. Despite having small brains, ants are especially adept at fine-tuning their neural circuits for revisiting a familiar route. Based on their previous findings, the team homed in on “mushroom bodies,” a type of neural hub in ant brains. These hubs are critical for learning visual information from surroundings. The information then spreads across the ant’s brain to inform navigational decisions. For example, does this route look familiar, or should I try another lane?

Next came event cameras, which capture images like an animal’s eye might. The resulting images are especially useful for training computer vision because they mimic how the eye processes light during a photograph.

The last component is the hardware: SpiNNaker, a computer chip built to mimic brain functions. First engineered at the University of Manchester in the UK, the chip simulates the internal workings of biological neural networks to encode memory.

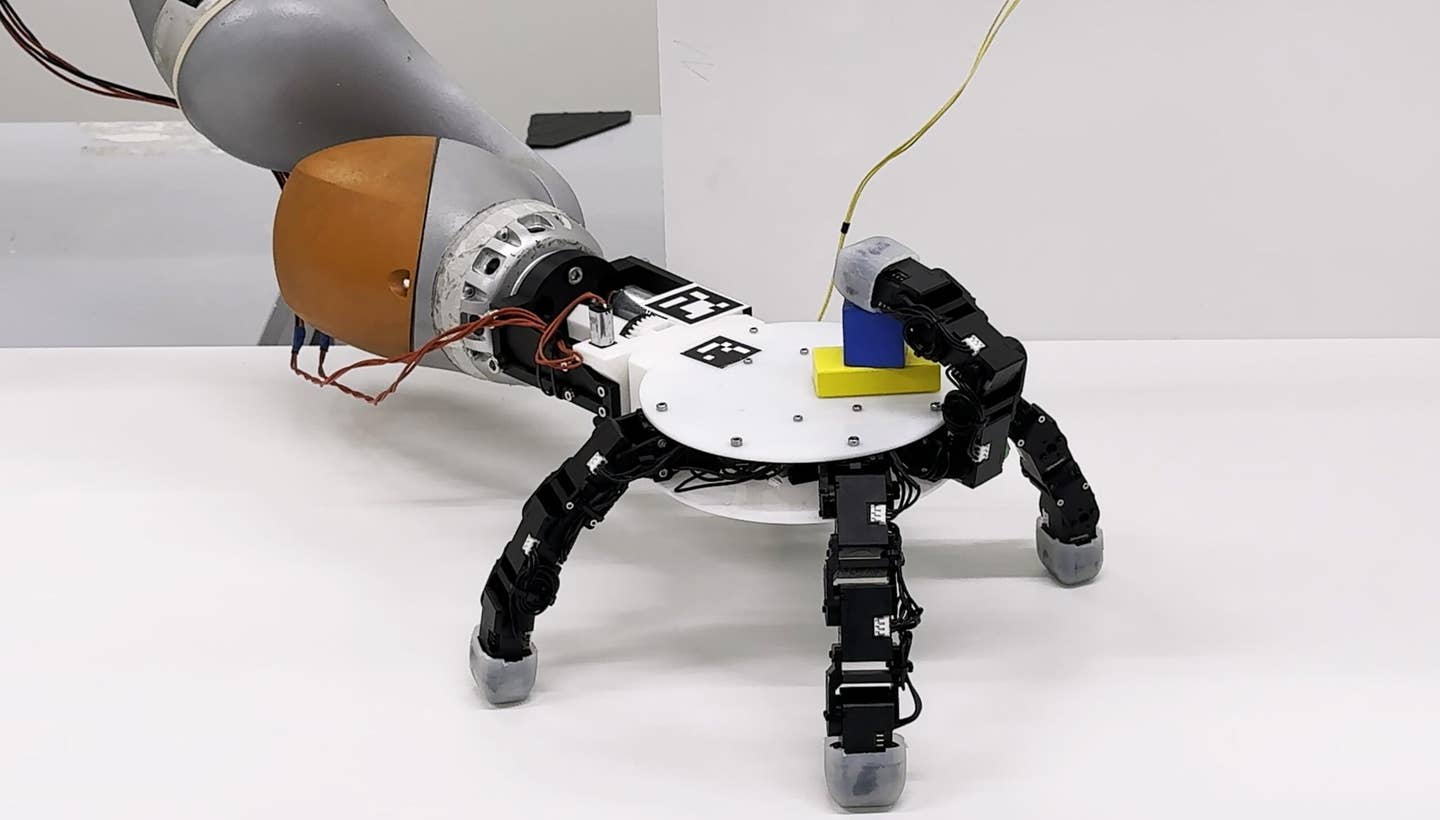

Weaving all three components together, the team built their ant-like system. As a proof of concept, they used the system to power a mobile robot as it navigated difficult terrain. The robot, roughly the size of an extra-large hamburger—and aptly named the Turtlebot3 burger— captured images with the event camera as it went on its hike.

As the robot rolled through forested lands, its neuromorphic “brain” rapidly reported “events” using pixels of its surroundings. The algorithm triggered a warning event, for example, if branches or leaves obscured the robot’s vision.

The little bot traversed roughly 20 feet in vegetation of various heights and learned from its treks. This range is typical for an ant navigating its route, said the team. In multiple tests, the AI model broke down data from the trip for more efficient analysis. When the team changed the route, the AI responded accordingly with confusion—wait, was this here before—showing that it had learned the usual route.

In contrast, a popular algorithm struggled to recognize the same route. The software could only follow a route if it saw the exact same video recording. In other words, compared to the ant-inspired algorithm, it couldn’t generalize.

A More Efficient Robot Brain

AI models are notoriously energy-hungry. Neuromorphic systems could slash their gluttony.

SpiNNaker, the hardware behind the system, puts the algorithm on an energy diet. Based on the brain’s neural network structures, the chip supports massively parallel computing, meaning that multiple computations can occur at the same time. This setup doesn’t just decrease data processing lag, but also boosts efficiency.

In this setup, each chip contains 18 cores, simulating roughly 250 neurons. Each core has its own instructions on data processing and stores memory accordingly. This kind of distributed computing is especially important when it comes to processing real-time feedback, such as maneuvering robots in difficult terrain.

As a next step, the team is digging deeper into ant brain circuits. Exploring neural connections between different brain regions and groups could further boost a robot’s efficiency. In the end, the team hopes to build robots that interact with the world with as much complexity as an ant.

Image Credit: Faris Mohammed / Unsplash

Dr. Shelly Xuelai Fan is a neuroscientist-turned-science-writer. She's fascinated with research about the brain, AI, longevity, biotech, and especially their intersection. As a digital nomad, she enjoys exploring new cultures, local foods, and the great outdoors.

Related Articles

Scientists Want to Give ChatGPT an Inner Monologue to Improve Its ‘Thinking’

This Robotic Hand Detaches and Skitters About Like Thing From ‘The Addams Family’

Humanity’s Last Exam Stumps Top AI Models—and That’s a Good Thing

What we’re reading