Google Adds Kinect-Like 3D Sensing to New Prototype Smartphones

Share

How does a search firm keep its brand fresh and relevant? Not by talking about search. Google’s mastered the art of baiting the hook with exciting moonshot projects, and whether they pan out or not, the firm is one of the hottest tech stories out there.

The latest juicy tidbit? Project Tango. Though Google recently sold off Motorola, they kept a healthy list of patents and Motorola’s innovation lab, ATAP. (Check out our coverage of ATAP’s open-source, modular smartphone Project Ara.)

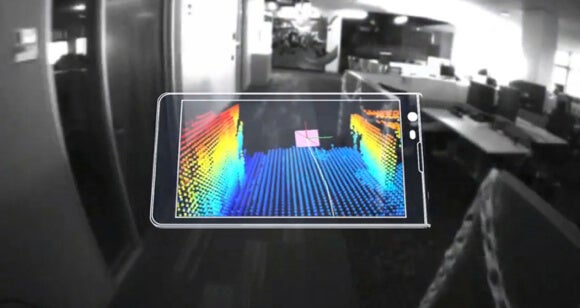

ATAP's latest smartphone project, Project Tango, is a prototype device that doesn’t just know its location—it knows its location in context. Using Kinect-like sensors and processors, the phone can construct a detailed 3D model of its immediate surroundings.

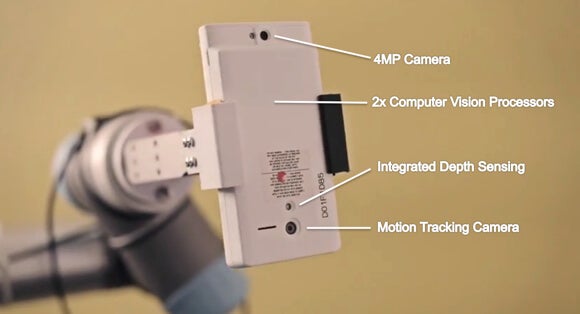

The device is a slightly chunky 5” Android smartphone with all the typical sensors (GPS, gyroscope, accelerometer, 4 megapixel camera) and a few extras, like depth-sensing and motion-sensing cameras. The phone uses two Movidius computer vision processors to stitch together a quarter million 3D measurements a second into a single 3D model of the space around the phone.

Folks might use such devices to map their houses. Instead of measuring every dimension before visiting the furniture store, they could pull up a website or app that embeds furniture in a virtual model of their living room (both in color and to scale).

Other potential applications include indoor navigation of unfamiliar buildings, augmented reality gaming, and a virtual cane for the blind—buzzing or speaking directions as obstacles approach. The tech could serve as a 3D scanner for 3D printing, or why not incorporate it into a drone or telepresence robot to aid autonomous navigation?

We imagine drones flying through trees in a forest (instead of bumping into them), or telepresence robots picking their way through a crowded convention hall. Some robots can already do this, but perhaps Tango would sharpen their abilities.

Truth is, no one knows what applications might grow out of Project Tango. Google is offering 200 Project Tango prototypes and an API (to write apps with) to developers.

Project lead, Johnny Lee, worked on Kinect at Microsoft, and he's been at Google since 2011. Lee says, “We want partners who will push the technology forward and build great user experiences on top of this platform.”

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

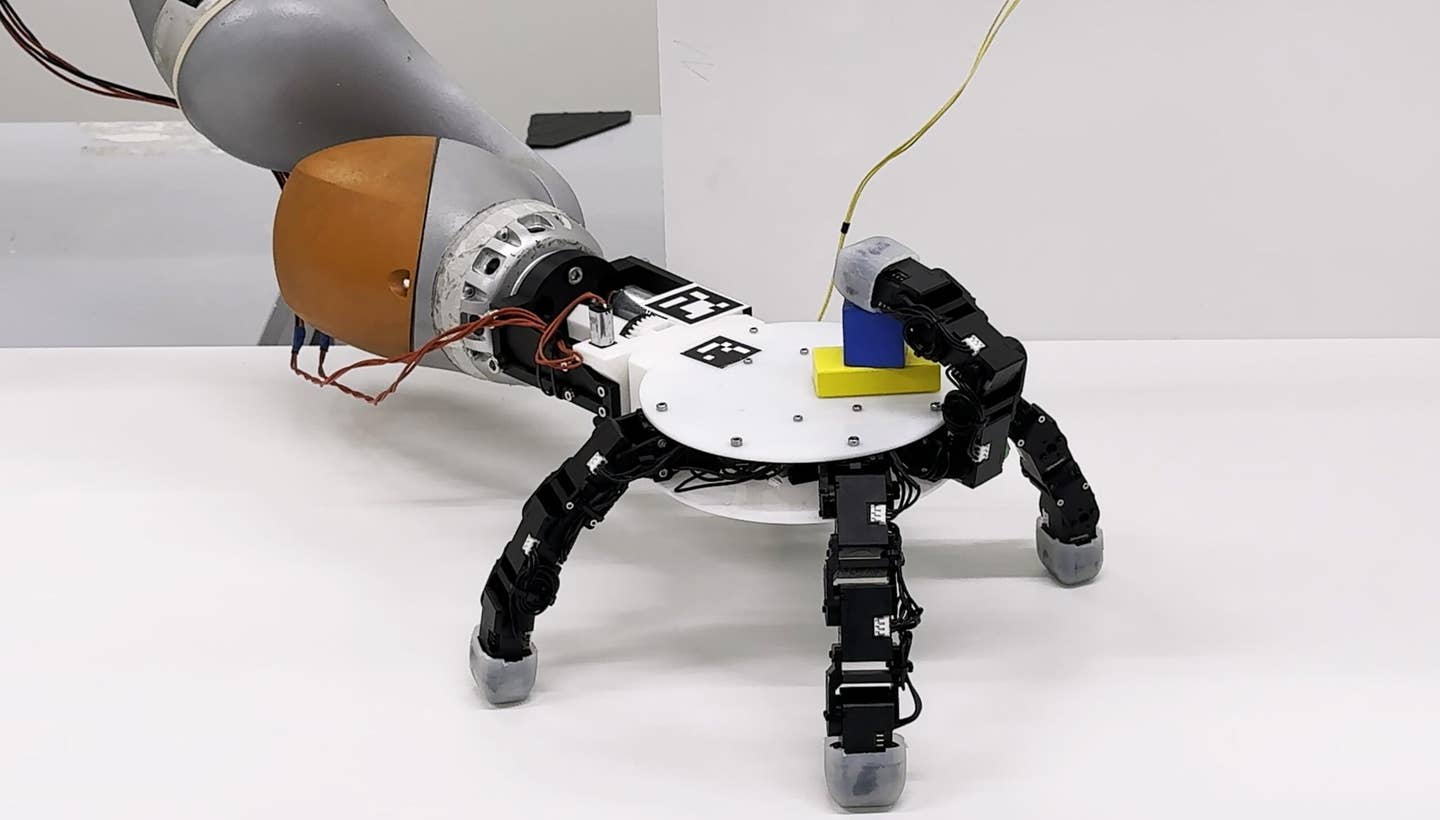

While the tech would be cool in smartphones, and maybe even cooler if it’s linked up to a virtual or augmented reality interface like Glass or Oculus Rift—it's already being used in robotics, and could be most powerful there.

Computer vision combined with rudimentary machine learning algorithms are already giving robots more autonomy.

If Project Tango further miniaturizes computer vision sensors and makes computer vision processors more efficient, we could see improvements crossing over into the firm’s new robotics division. Indeed, one of Google's recent robotics acquisitions, Industrial Perception, already integrates similar tech into robotic arms.

It's also worth mentioning there are others working to give devices 3D awareness. Last year, for example, we wrote about Occipital's Structure sensor. Structure is like Project Tango, with the exception that the hardware isn't integrated into the smartphone itself.

Structure's infrared LEDs and camera are housed in an aluminum device that clips onto an iPad. Added to its own sensors, Structure uses the iPad's camera, accelerometer, and gyroscope to create a 3D model of its environment from 40 cm to 3.5 meters away.

While Project Tango's full integration would be amazing, for now, it adds heft to the device and the computer vision processors hog battery power. Occipital's device, meanwhile, runs on its own four-hour battery and may be attached or detached at will.

Of course, Google has the resources and talent to further develop the technology, and if they're successful, expect it to appear in a widening range of products and applications.

Image Credit: Google, ATAP/YouTube (middle image labels added by Singularity Hub)

Jason is editorial director at SingularityHub. He researched and wrote about finance and economics before moving on to science and technology. He's curious about pretty much everything, but especially loves learning about and sharing big ideas and advances in artificial intelligence, computing, robotics, biotech, neuroscience, and space.

Related Articles

This ‘Machine Eye’ Could Give Robots Superhuman Reflexes

Scientists Send Secure Quantum Keys Over 62 Miles of Fiber—Without Trusted Devices

This Robotic Hand Detaches and Skitters About Like Thing From ‘The Addams Family’

What we’re reading