I recently attended the MIT Technology Review Digital Summit in San Francisco. The topics du jour? The disappearing computer interface, the Internet of Things, and security and privacy in our hyperconnected world.

The overriding sense is we’re taking the next step in the evolution of computing. Over several decades computers have progressed from feeble room-sized counting machines to desktop computers, laptops, smartphones and tablets.

The trend? Smaller, cheaper, more powerful, and more distributed. As Jason Pontin, editor in chief and publisher of MIT Technology Review, noted, “Computing is disappearing into the things around us. Computing is becoming as invisible and ubiquitous as electricity.”

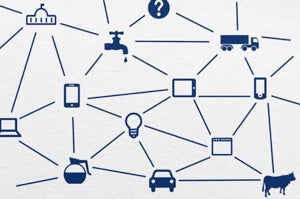

The Internet of Things is the digitization of everyday items and their connection to the existing network of devices. These new “things” are, of course, a diverse group including appliances, buildings, infrastructure, environmental features, and so on.

According to Cisco some 50 billion “things” will come online by 2020. Gartner puts it at 26 billion. Clearly, no one knows for sure, but most think it will be a big number. And the prospect of such wide ranging connectivity has technologists excited.

According to Cisco some 50 billion “things” will come online by 2020. Gartner puts it at 26 billion. Clearly, no one knows for sure, but most think it will be a big number. And the prospect of such wide ranging connectivity has technologists excited.

Peter Lee, who heads up Microsoft Research, said it’s the most exhilarating time in basic research since 1985. Advances in connectivity and computing combined with breakthroughs in machine learning and artificial intelligence are resulting in adaptive, anticipatory systems distributed throughout our environment.

Lee thinks that as computing becomes ubiquitous and connected, “the idea of operating a computer starts to fade away.” Digital access will expand from a few select portals to huge swathes of the physical world. We won’t run apps, apps will run themselves, anticipating our needs and responding to voice or gesture.

Tim Tuttle, CEO and founder of Expect Labs, said in the last 18 months, voice recognition accuracy improved 30%—a bigger gain than the entire decade previous. A third of searches are now being done using voice commands.

Voice recognition uses machine learning algorithms that depend on people actually using them to get better. Tuttle believes we’re at the beginning of a virtuous cycle wherein wider adoption is yielding more data; more data translates into better performance; better performance results in wider adoption, more data, and so on.

As we rely less on traditional devices, what will take their place? It will be some combination of devices embedded in our environment and worn on our bodies. (Though the extinction of the terminal won’t likely happen for awhile.)

Wearable Tech

At this point, of course, you’ve heard and read about wearables (or own a device yourself). They’ve been receiving more and more attention over the last few years.

Google Glass, the miniature computer and display worn like a pair of glasses, is perhaps the most well-known wearable device today. Georgia Tech’s Thad Starner, technical lead on Glass, has been wearing a heads-up display for over two decades.

Starner said attending MIT he could either pay close attention or take notes—but not both at the same time. (I was too busy typing away, Glassless, to recognize the irony.) Whereas you or I might wonder whether Glass will worsen already endemic technological distraction—Starner said it’s supposed to do the opposite.

The idea, he said, is to reduce the time it takes to repetitively check little things like time, appointments, email, search, and maps. For example, Starner said, it takes 23 seconds on average to pull out your phone and fire up an app. With glass, the time is just two seconds. These “microinteractions” will equate to less time staring at our phones and more time getting reacquainted with the world around us. (More on Glass, privacy, and security later.)

Other wearables include fitness trackers, those wristbands with sensors that track basic activity data like steps taken and calories burned and give users insights into their daily habits and ways to make positive changes.

Early experience has shown many of these devices to be less than accurate and easily abandoned by users. But according to Misfit cofounder, Sonny Vu, it’s early days yet. Calling the wearables space crowded is like saying the internet was crowded in 1997.

Early experience has shown many of these devices to be less than accurate and easily abandoned by users. But according to Misfit cofounder, Sonny Vu, it’s early days yet. Calling the wearables space crowded is like saying the internet was crowded in 1997.

First generation wearables are plastic, bulky, and too reliant on regular charge, he said. Worse, they don’t do a lot. Version 2.0 will incorporate materials like metal, leather, and ceramic, and to encourage continuous use, they’ll hold charge for months.

They also need to be more compelling. How will we know when we’ve hit the mark, asked Vu? The turnaround test: The tech should be so useful, if you happen to forget it on the way to work or school, you turn around to pick it up. (Or, I think, if it’s so comfortable it becomes part of your body, the turnaround test is irrelevant.)

Besides a convenient display (heads-up or smartwatch), wearables like Vu’s Misfit Shine and other mobile devices measure useful information, like the body’s vital signs, relay the information to analytical software in the cloud, and communicate insights to people on their smartphones.

AliveCor chief medical officer, Dr. David Albert, for example, explained that his company’s heart monitor is a $200 FDA cleared medical device that delivers an ECG on par with top-of-the-line $10,000 devices, using naught but a smartphone. Albert said cardiologist, Dr. Eric Topol, used the device to diagnose a heart attack on a plane.

The AliveCor heart monitor isn’t wearable yet—but future wearables may be as powerful and find their best application similarly measuring a wide array of key health data.

Venture capitalist and founder of Khosla Ventures, Vinod Khosla, said he has been involved in wearables and mobile medical devices. He’s convinced beyond simple measurement of our bodies, we’ll also turn analysis over to machines.

Khosla said in one study, a group of cardiologists were asked to look at patient data and decide whether the patient should have heart surgery. Opinion was split down the middle. Two years later, the same data was given to the same cardiologists and 40% changed their mind. Khosla thinks machines are better at the cognitive parts of medicine, like diagnosis and prescription. Humans aren’t equipped for big data.

The Internet of Things

Companies like Intel and Freescale are developing tiny chips that are both low-power and inexpensive. They don’t need to do much, just measure a few key statistics and communicate with a more powerful computer somewhere else.

The idea is to chip everything, measure it, and more efficiently and automatically control our homes and cities. Some chips will only collect and communicate data, like stress on key infrastructural components (e.g., a bridge strut or load bearing pillar). Others will collect data and control a system (e.g., your lights, locks, or car).

Pontin noted chipped diapers (yes, to measure local levels of pollution), connected sewers in New York City, even bees saddled with sensors. But an increasingly cited example is right out of the Jetsons or World’s Fair—the automated home.

Qualcomm’s Liat Ben-Zur sketched a vision of the future when all the devices and systems in your home talk to each, coordinating their functions. The lights come on when you walk in the door; thermostats adjust to personal preference; or maybe all these things will be tied together to respond to a specific scenario.

Ben-Zur gave the example of a home emergency. The smoke alarm goes off—which turns on the TV to message key information and makes the lights flash red to alert the hearing impaired. The hardware and software behind all these devices might be designed by different firms—including the developer behind the emergency application.

Ben-Zur gave the example of a home emergency. The smoke alarm goes off—which turns on the TV to message key information and makes the lights flash red to alert the hearing impaired. The hardware and software behind all these devices might be designed by different firms—including the developer behind the emergency application.

Qualcomm’s AllJoyn hopes to be the open operating system behind it all.

Compare AllJoyn to Android. Smartphones have a variety of sensors and software, like we’d expect in an automated home. They all play nice and combine capabilities through the operating system. Give an API to developers, and they can run wild, tying the system together in different ways to create unexpected experiences.

Of her emergency app example, Ben-Zur said, “I think that example is boring. I think it’s boring because we thought of it.”

The idea that the automated home, and in fact the Internet of Things, in general, needs to be better unified was echoed by other speakers.

Currently, those Hue light bulbs you bought at the store? That Apple TV or Nest thermostat? They all have separate apps. They use different wireless protocols to communicate with the network. Their operation is splintered.

As Mike Soucie, cofounder of Revolv, another firm attempting to fuse disparate automated home hardware and systems put it—if you’re not a tech enthusiast, the inconvenience of these supposedly convenience enhancing gadgets will lead you to throw up your hands and ask, “Why bother?”

Privacy, Security, and a Future Without Ads

In his presentation on the upcoming Blackphone, cofounder of Silent Circle Phil Zimmerman said, “If you’re not paying for the product, then you are the product.” In the age of NSA spying and networked devices collecting hoards of information (search, emails, images, videos, location, travel history, health data), privacy is a touchy topic.

One of the prime benefits of a world bristling with sensors and chips is the insight more data may yield. But we pay a price—advertisers know and exploit intimate personal data, government agencies record online activity, hackers gain control of online accounts to spam email contacts or buy big ticket items on someone else’s dime.

Google Glass has garnered criticism from privacy advocates who worry it’s too easy for the device to record images and video without the knowledge or consent of the people being recorded. Starner defended his device, saying negative reports are largely overblown. The general reaction on the street is curiosity and enthusiasm.

Many writing about Glass haven’t tried it, he said. The team purposefully embedded social cues—spoken or touch commands and looking up at the screen—to telegraph when a Glass user is taking a picture or checking the time. And in his experience, a demo wins over even the harshest critics. Or at least eases their concern.

Many writing about Glass haven’t tried it, he said. The team purposefully embedded social cues—spoken or touch commands and looking up at the screen—to telegraph when a Glass user is taking a picture or checking the time. And in his experience, a demo wins over even the harshest critics. Or at least eases their concern.

In an interesting contrast, Genevieve Bell, an anthropologist and Intel’s director of user experience, preceded Starner’s presentation. What’s an anthropologist doing at Intel? Bell says she’s the reality check for technologists who might be out of touch with the general public. If you love tech, your sense of what the market wants may not be 20/20.

Starner (my example not hers) has been wearing heads-up displays since 1993. While he should defend his device (I’d expect nothing less), his views are colored by long experience. Glass is cool. But are negative perceptions more widespread than he realizes, and might the device need to change to move beyond technophiles? Maybe.

Phil Libin, CEO of Evernote, gave an entertaining interview in which he questioned the use of personal information to power ad-based business. As screen real estate shrinks in smartwatches and heads-up displays, like Glass, he thinks the amount of space we’ll willingly allocate to ads will fall almost to zero.

Libin said, “I think people will pay for things they love.” And the idea that we’re happy to give away data for targeted ads in return? “I just call bullshit on that.”

Ads are annoying, but not necessarily threatening.

Zimmerman’s Blackphone is due out a few weeks from now. It’s not impervious to a targeted attack but ought to better safeguard information broad data gathering by governments and criminals. His team built their product from the ground up (hardware and software) to leak as little data as possible.

And what about the Internet of Things? Krisztian Flautner, VP of R&D at ARM, said adoption will require trust and trust will require strong security and transparency.

Flautner noted it’s getting easier to hack connected devices. Famously, a 2008 New York Times article said programmers had successfully hacked a wirelessly connected pacemaker. It took a sustained effort by a team of specialists working near the device. Flautner said last year MIT students hacked a pacemaker from 50 feet away.

A terrifying prospect for users of connected medical devices. But scale it up to a network of 25 or 50 billion connected devices, and it’s easy to imagine a physical world as buggy as a laptop at the turn of the century.

In Mat Honan’s recent exploration of a connected world gone awry, every appliance in his house is infected with malware, paralyzed by viral attacks, and directed to run amok by hackers. Honan’s musing ends with him longing to move to a quaint old house off the grid up in Humboldt County.

A Work in Progress

Will our increasingly high-tech lives devolve into a malfunctioning mess as Honan suggests? The vision he paints effectively portrays the challenges facing a hyperconnected future.

But that these risks are known and debated may prevent some from occurring as anticipated. Anything that can’t be remedied or simply doesn’t work will be discarded. The things that work beautifully will be adopted. There won’t be anything clean or organized about the process. It may well be messy and problematic in the short run.

And that’s perfectly normal.

In his talk, Khosla discussed how regulation, laws, and social norms are colliding with and informing the development of emerging technology. Debating the pros and cons is standard practice repeated through history and critical to innovation and progress.

Ultimately, we’ll stumble into the future as trial, error, and cultural upheaval steadily sharpen the latest technologies to better suit our needs. Khosla described it beautifully, “It’s an iterative process of coming to the right answers.”

Image Credit: Keoni Cabral/Flickr; Cisco/YouTube; University of Salford/Flickr; Dan Taylor/Flickr; Freescale; Shutterstock.com; nolifebeforecoffee (stencil by banksy)/Flickr; Lawrencegs/Flickr