The first time Dr. Blake Richards heard about deep learning, he was convinced that he wasn’t just looking at a technique that would revolutionize artificial intelligence. He also knew he was looking at something fundamental about the human brain.

That was the early 2000s, and Richards was taking a course with Dr. Geoff Hinton at the University of Toronto. Hinton, a pioneer architect of the algorithm that would later take the world by storm, was offering an introductory course on his learning method inspired by the human brain.

The key words here are “inspired by.” Despite Richards’ conviction, the odds were stacked against him. The human brain, as it happens, seems to lack a critical function that’s programmed into deep learning algorithms. On the surface, the algorithms were violating basic biological facts already proven by neuroscientists.

But what if, superficial differences aside, deep learning and the brain are actually compatible?

Now, in a new study published in eLife, Richards, working with DeepMind, proposed a new algorithm based on the biological structure of neurons in the neocortex. Also known as the cortex, this outermost region of the brain is home to higher cognitive functions such as reasoning, prediction, and flexible thought.

The team networked their artificial neurons together into a multi-layered network and challenged it with a classic computer vision task—identifying hand-written numbers.

The new algorithm performed well. But the kicker is that it analyzed the learning examples in a way that’s characteristic of deep learning algorithms, even though it was completely based on the brain’s fundamental biology.

“Deep learning is possible in a biological framework,” concludes the team.

Because the model is only a computer simulation at this point, Richards hopes to pass the baton to experimental neuroscientists, who could actively test whether the algorithm operates in an actual brain.

If so, the data could then be passed back to computer scientists to work out the next generation of massively parallel and low-energy algorithms to power our machines.

It’s a first step towards merging the two fields back into a “virtuous circle” of discovery and innovation.

The blame game

While you’ve probably heard of deep learning’s recent wins against humans in the game of Go, you might not know the nitty-gritty behind the algorithm’s operations.

In a nutshell, deep learning relies on an artificial neural network with virtual “neurons.” Like a towering skyscraper, the network is structured into hierarchies: lower-level neurons process aspects of an input—for example, a horizontal or vertical stroke that eventually forms the number four—whereas higher-level neurons extract more abstract aspects of the number four.

To teach the network, you give it examples of what you’re looking for. The signal propagates forward in the network (like climbing up a building), where each neuron works to fish out something fundamental about the number four.

Like children trying to learn a skill the first time, initially the network doesn’t do so well. It spits out what it thinks a universal number four should look like—think a Picasso-esque rendition.

But here’s where the learning occurs: the algorithm compares the output with the ideal output, and computes the difference between the two (dubbed “error”). This error is then “backpropagated” throughout the entire network, telling each neuron: hey, this is how far off you were, so try adjusting your computation closer to the ideal.

Millions of examples and tweakings later, the network inches closer to the desired output and becomes highly proficient at the trained task.

This error signal is crucial for learning. Without efficient “backprop,” the network doesn’t know which of its neurons are off kilter. By assigning blame, the AI can better itself.

The brain does this too. How? We have no clue.

Biological No-Go

What’s clear, though, is that the deep learning solution doesn’t work.

Backprop is a pretty needy function. It requires a very specific infrastructure for it to work as expected.

For one, each neuron in the network has to receive the error feedback. But in the brain, neurons are only connected to a few downstream partners (if that). For backprop to work in the brain, early-level neurons need to be able to receive information from billions of connections in their downstream circuits—a biological impossibility.

And while certain deep learning algorithms adapt a more local form of backprop— essentially between neurons—it requires their connection forwards and backwards to be symmetric. This hardly ever occurs in the brain’s synapses.

More recent algorithms adapt a slightly different strategy, in that they implement a separate feedback pathway that helps the neurons to figure out errors locally. While it’s more biologically plausible, the brain doesn’t have a separate computational network dedicated to the blame game.

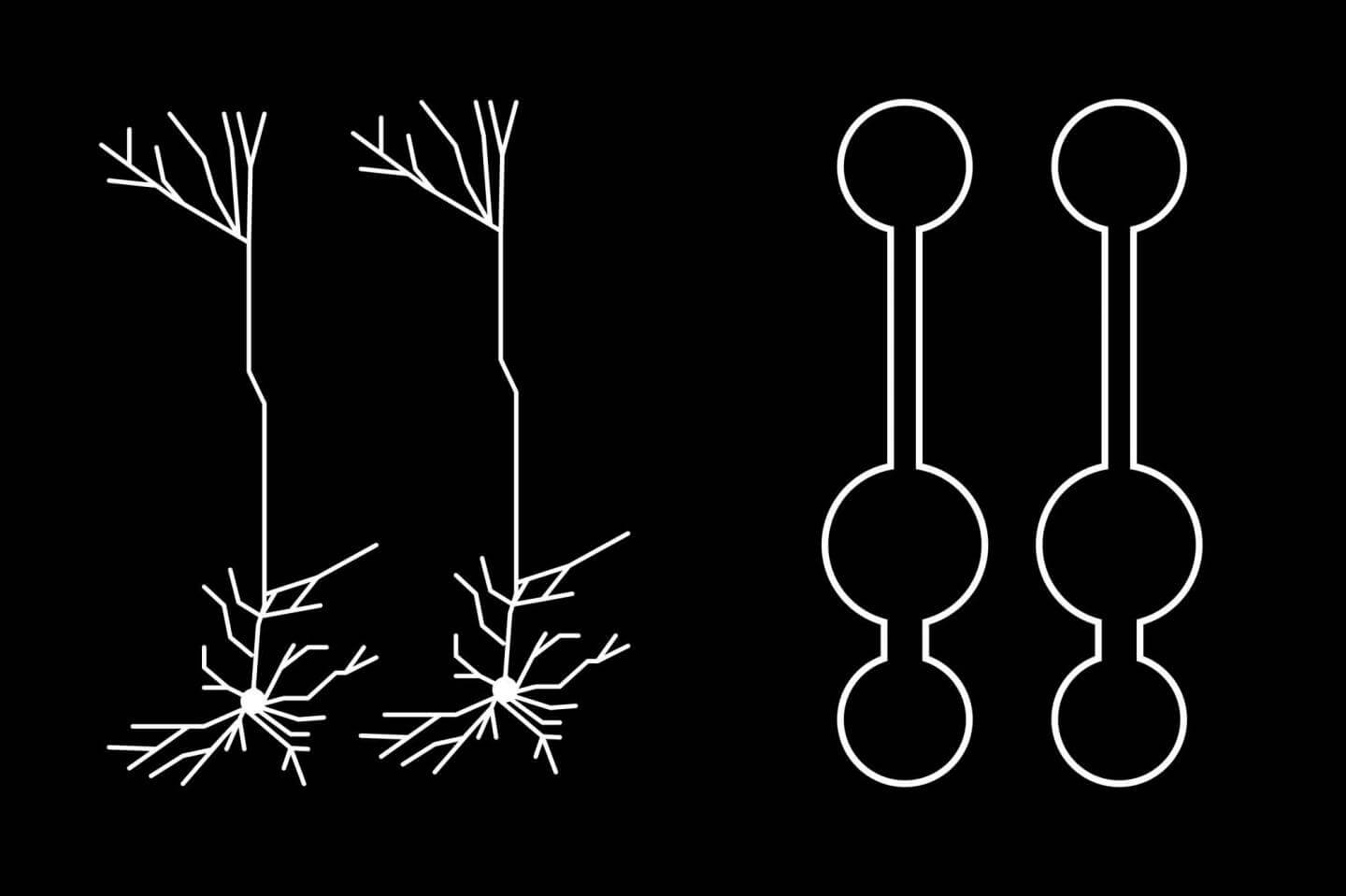

What it does have are neurons with intricate structures, unlike the uniform “balls” that are currently applied in deep learning.

Branching Networks

The team took inspiration from pyramidal cells that populate the human cortex.

“Most of these neurons are shaped like trees, with ‘roots’ deep in the brain and ‘branches’ close to the surface,” says Richards. “What’s interesting is that these roots receive a different set of inputs than the branches that are way up at the top of the tree.”

Curiously, the structure of neurons often turn out be “just right” for efficiently cracking a computational problem. Take the processing of sensations: the bottoms of pyramidal neurons are right smack where they need to be to receive sensory input, whereas the tops are conveniently placed to transmit feedback errors.

Could this intricate structure be evolution’s solution to channeling the error signal?

The team set up a multi-layered neural network based on previous algorithms. But rather than having uniform neurons, they gave those in middle layers—sandwiched between the input and output—compartments, just like real neurons.

When trained with hand-written digits, the algorithm performed much better than a single-layered network, despite lacking a way to perform classical backprop. The cell-like structure itself was sufficient to assign error: the error signals at one end of the neuron are naturally kept separate from input at the other end.

Then, at the right moment, the neuron brings both sources of information together to find the best solution.

There’s some biological evidence for this: neuroscientists have long known that the neuron’s input branches perform local computations, which can be integrated with signals that propagate backwards from the so-called output branch.

However, we don’t yet know if this is the brain’s way of dealing blame—a question that Richards urges neuroscientists to test out.

What’s more, the network parsed the problem in a way eerily similar to traditional deep learning algorithms: it took advantage of its multi-layered structure to extract progressively more abstract “ideas” about each number.

“[This is] the hallmark of deep learning,” the authors explain.

The Deep Learning Brain

Without doubt, there will be more twists and turns to the story as computer scientists incorporate more biological details into AI algorithms.

One aspect that Richards and team are already eyeing is a top-down predictive function, in which signals from higher levels directly influence how lower levels respond to input.

Feedback from upper levels doesn’t just provide error signals; it could also be nudging lower processing neurons towards a “better” activity pattern in real-time, says Richards.

The network doesn’t yet outperform other non-biologically derived (but “brain-inspired”) deep networks. But that’s not the point.

“Deep learning has had a huge impact on AI, but, to date, its impact on neuroscience has been limited,” the authors say.

Now neuroscientists have a lead they could experimentally test: that the structure of neurons underlie nature’s own deep learning algorithm.

“What we might see in the next decade or so is a real virtuous cycle of research between neuroscience and AI, where neuroscience discoveries help us to develop new AI and AI can help us interpret and understand our experimental data in neuroscience,” says Richards.

Image Credit: christitzeimaging.com / Shutterstock.com