DNA Computing Gets a Boost With This Machine Learning Hack

Share

As the master code of life, DNA can do a lot of things. Inheritance. Gene therapy. Wipe out an entire species. Solve logic problems. Recognize your sloppy handwriting.

Wait, What?

In a brilliant study published in Nature, a team from Caltech cleverly hacked the properties of DNA, essentially turning it into a molecular artificial neural network.

When challenged with a classic machine learning task—recognize hand-written numbers—the DNA computer can deftly recognize characters from one through nine. The mechanism relies on a particular type of DNA molecule dubbed the “annihilator,” (awesome name!) which selects the winning response from a soup of biochemical reactions.

But it’s not just sloppy handwriting. The study represents a quantum leap in the nascent field of DNA computing, allowing the system to recognize far more complex patterns using the same amount of molecules. With more tweaking, the molecular neural network could form “memories” of past learning, allowing it to perform different tasks—for example, in medical diagnosis.

"Common medical diagnostics detect the presence of a few biomolecules, for example cholesterol or blood glucose," said study author Kevin Cherry. "Using more sophisticated biomolecular circuits like ours, diagnostic testing could one day include hundreds of biomolecules, with the analysis and response conducted directly in the molecular environment."

Neural Networks

To senior author Dr. Lulu Qian, the idea of transforming DNA into biocomputers came from nature.

“Before neuron-based brains evolved, complex biomolecular circuits provided individual cells with the ‘intelligent behavior’ required for survival,” Qian wrote in a 2011 academic paper describing the first DNA-based artificial neural network (ANNs) system. By building on the richness of DNA computing, she said, it’s possible to program these molecules with autonomous brain-like behaviors.

Qian was particularly intrigued by the idea of reconstituting ANNs in molecular form. The basis of deep learning, a type of machine learning that’s taken the world by storm, ANNs are learning algorithms inspired by the human brain. Similar to their biological inspirations, ANNs contain layers of “neurons” connected to each other through various strengths—what scientists call “weights.” The weights depend on whether a certain feature is likely present in the pattern it’s trying to recognize.

ANNs are precious because they are particularly flexible: in number recognition, for example, a trained ANN can generalize the gist of a hand-written digit—say, seven—and use that memory to identify new potential “sevens,” even if the handwriting is incredibly crappy.

Qian’s first attempt at making DNA-based ANNs was a cautious success. It could only recognize very simple patterns, but the system had the potential for scaling up to perform more complex computer vision-like tasks.

This is what Qian and Cherry set out to do in the new study.

Test-Tube Computers

Because DNA computers literally live inside test tubes, the first problem is how to transform images into molecules.

The team started by converting each hand-written digit into a 10-by-10 pixel grid. Each pixel is then represented using a short DNA sequence with roughly 30 letters of A, T, C, and G. In this way, each single digit has its own “mix” of DNA molecules, which can be added together into a test tube.

Because the strands float freely within the test tube, rather than aligning nicely into a grid, the team cleverly used the concentration of each strand to signify its location on the original image grid.

Similar to the input image, the computer itself is also made out of DNA. The team first used a standard computer to train an ANNs on the task, resulting in a bunch of “weight matrices,” with each representing a particular number.

“We come up with some pattern we want the network to recognize, and find an appropriate set of of weights” using a normal computer, explained Cherry.

These sets of weights are then translated into specific mixes of DNA strands to build a network for recognizing a specific number.

“The DNA [weight] molecules store the pattern we want to recognize,” essentially acting as the memory of the computer, explained Cherry. For example, one set of molecules could detect the number seven, whereas another set hunts down six.

To begin the calculations, the team adds the DNA molecules representing an input image together with the DNA computer in the same test tube. Then the magic happens: the image molecules react with the “weight” molecules—As binding to Ts, Cs tagging to Gs—eventually resulting in a third bunch of output DNA that glow fluorescent light.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

Why does this DNA reaction work as a type of calculation? Remember: if a pixel occurs often within a number, then the DNA strand representing this pixel will be at a higher concentration in the test tube. If the same pixel is also present in the input pattern, then the result is tons of reactions, and correspondingly, lots more glow-in-the-dark output molecules.

In contrast, if the pixel has a low chance of occurring, then the chemical reactions would also languish.

In this way, by tallying up the reactions of every pixel (i.e. every type of DNA strand), the team gets an idea of how similar the input image is to the stored number within the DNA computer. The trick here is a strategy called “winner-takes-all.” A DNA “annihilator” molecule is set free into the test tube, where it tags onto different output molecules and, in the process, turns them into unreactive blobs unable to fluoresce.

"The annihilator quickly eats up all of the competitor molecules until only a single competitor species remains,” explained Cherry. The winner then glows brightly, indicating the neural network’s decision: for example, the input was a six, not a seven.

In a first test, the team chucked roughly 36 handwritten digits—recoded into DNA—with two DNA computers into the same test tube. One computer represented the number six, the other seven. The molecular computer correctly identified all of them. In a digital simulation, the team further showed that the system could classify over 14,000 hand-written sixes and sevens with 98 percent accuracy—even when those digits looked significantly different than the memory of that number.

“Some of the patterns that are visually more challenging to recognize are not necessarily more difficult for DNA circuits,” the team said.

Next, they further souped up the system by giving each number a different output color combination: green and yellow for five, or green and red for nine. This allowed the “smart soup” to simultaneously detect two numbers in a single reaction.

Forging Ahead

The team’s DNA neural net is hardly the first DNA computer, but it’s certainly the most intricate.

Until now, the most popular method is constructing logic gates—AND, OR, NOT, and so on—using a technique called DNA strand displacement. Here, the input DNA binds to a DNA logic gate, pushing off another strand of DNA that can be read as an output.

Although many such gates can be combined into complex circuits, it’s a tedious and inefficient way of building a molecular computer. To match the performance of the team’s DNA ANN, for example, a logic gate-based DNA computer would need more than 23 different gates, making the chemical reactions more prone to errors.

What’s more, the same set of molecular ANNs can be used to compute different image recognition tasks, whereas logic-gate-based DNA computers need a special matrix of molecules for each problem.

Looking ahead, Cherry hopes to one day create a DNA computer that learns on its own, ditching the need for a normal computer to generate the weight matrix. “It’s something that I’m working on,” he said.

“Our system opens up immediate possibilities for embedding learning within autonomous molecular systems, allowing them to ‘adapt their functions on the basis of environmental signals during autonomous operations,’” the team said.

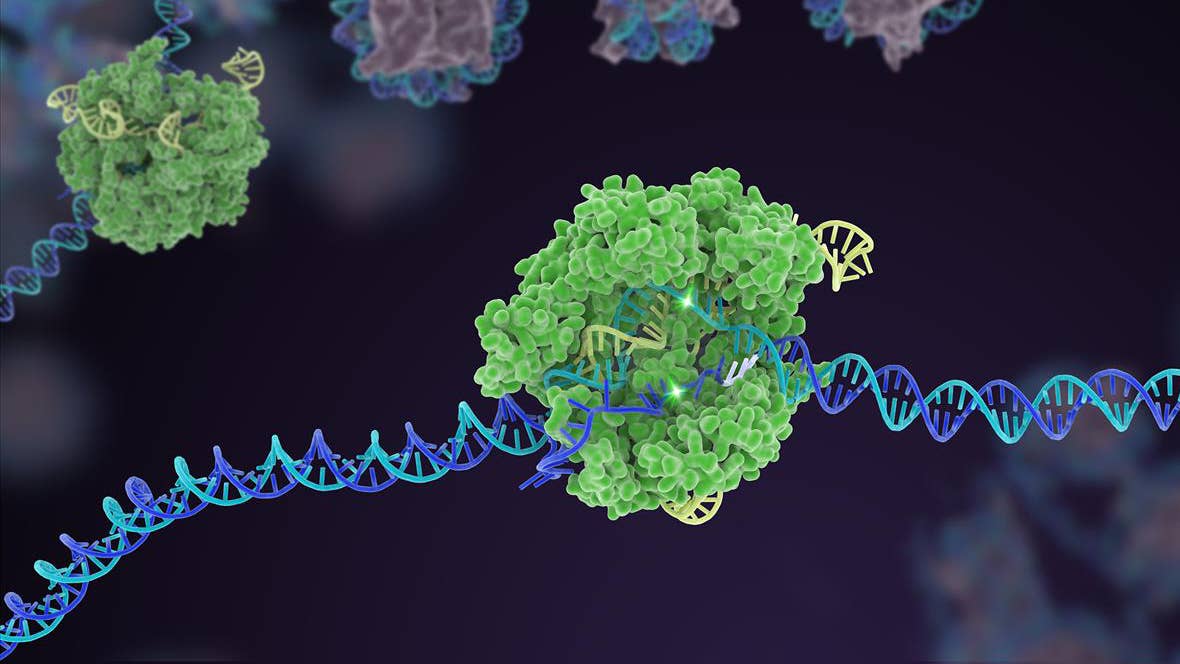

Image Credit: science photo / Shutterstock.com

Dr. Shelly Xuelai Fan is a neuroscientist-turned-science-writer. She's fascinated with research about the brain, AI, longevity, biotech, and especially their intersection. As a digital nomad, she enjoys exploring new cultures, local foods, and the great outdoors.

Related Articles

Souped-Up CRISPR Gene Editor Replicates and Spreads Like a Virus

Your Genes Determine How Long You’ll Live Far More Than Previously Thought

Scientists Send Secure Quantum Keys Over 62 Miles of Fiber—Without Trusted Devices

What we’re reading