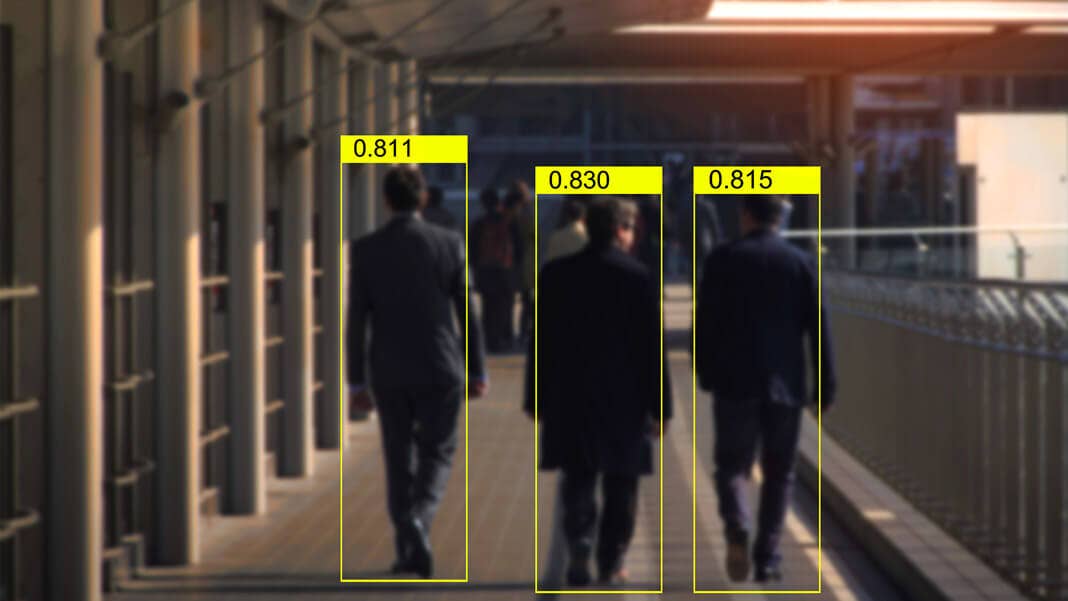

Britain Is Developing an AI-Powered Predictive Policing System

Share

The tantalizing prospect of predicting crime before it happens has got law enforcement agencies excited about AI. Most efforts so far have focused on forecasting where and when crime will happen, but now British police want to predict who will perpetrate it.

The idea is reminiscent of sci-fi classic Minority Report, where clairvoyant “precogs” were used to predict crime before it happened and lock up the would-be criminals. The National Data Analytics Solution (NDAS) under development in the UK will instead rely on AI oracles to scour police records and statistics to find those at risk of violent crime.

Machine learning will be used to analyze a variety of local and national police databases containing information like crime logs, stop and search records, custody records, and missing person reports. In particular, it’s aimed at identifying those at risk of committing or becoming a victim of gun or knife crime, and those who could fall victim to modern slavery.

The program is being led by West Midlands Police (WMP), which covers the cities of Birmingham, Coventry, and Wolverhampton, but the aim is for it to eventually be used by every UK police force. They will produce a prototype by March of 2019.

What police would do with the information has yet to be determined. The head of WMP told New Scientist they won’t be preemptively arresting anyone; instead, the idea would be to use the information to provide early intervention from social or health workers to help keep potential offenders on the straight and narrow or protect potential victims.

But data ethics experts have voiced concerns that the police are stepping into an ethical minefield they may not be fully prepared for. Last year, WMP asked researchers at the Alan Turing Institute’s Data Ethics Group to assess a redacted version of the proposal, and last week they released an ethics advisory in conjunction with the Independent Digital Ethics Panel for Policing.

While the authors applaud the force for attempting to develop an ethically sound and legally compliant approach to predictive policing, they warn that the ethical principles in the proposal are not developed enough to deal with the broad challenges this kind of technology could throw up, and that “frequently the details are insufficiently fleshed out and important issues are not fully recognized.”

The genesis of the project appears to be a 2016 study carried out by data scientists for WMP that used statistical modeling to analyze local and national police databases to identify 32 indicators that could predict those likely to persuade others to commit crimes. These were then used to generate a list of the top 200 “influencers” in the region, which the force said could be used to identify those vulnerable to being drawn into criminality.

The ethics review notes that this kind of approach raises serious ethical questions about undoing the presumption of innocence and allowing people to be targeted even if they’ve never committed an offense and potentially never would have done so.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

Similar approaches tested elsewhere in the world highlight the pitfalls of this kind of approach. Chicago police developed an algorithmically-generated list of people at risk of being involved in a shooting for early intervention. But a report from RAND Corporation showed that rather than using it to provide social services, police used it as a way to target people for arrest. Despite that, it made no significant difference to the city’s murder rate.

Its also nearly inevitable this kind of system will be at risk of replicating the biases that exist in traditional policing. If police disproportionately stop and search young black men, any machine learning system trained on those records will reflect that bias.

A major investigation by ProPublica in 2016 found that software widely used by courts in the US to predict whether someone would offend again, and therefore guide sentencing, was biased against blacks. Its not a stretch to assume that AI used to profile potential criminals would face similar problems.

Bias isn’t the only problem. As the ACLU’s Ezekiel Edwards notes, the data collected by police is simply bad. It’s incomplete, inconsistent, easily manipulated, and slow, and as with all machine learning, rubbish in equals rubbish out. But if you’re using the output of such a system to intervene ahead of whatever you’re predicting, it’s incredibly hard to asses how accurate it is.

All this is unlikely to stem the appetite for these kinds of systems, though. Police around the world are dealing with an ever-changing and increasingly complex criminal landscape (and in the UK, budget cuts too) so any tools that promise to make their jobs easier are going to seem attractive. Let’s just hope that agencies follow the lead of WMP and seek out independent oversight of their proposals.

Image Credit: PaO_STUDIO / Shutterstock.com

Related Articles

Scientists Want to Give ChatGPT an Inner Monologue to Improve Its ‘Thinking’

Humanity’s Last Exam Stumps Top AI Models—and That’s a Good Thing

AI Now Beats the Average Human in Tests of Creativity

What we’re reading