These Breakthroughs Made the 2010s the Decade of the Brain

Share

I rarely use the words transformative or breakthrough for neuroscience findings. The brain is complex, noisy, chaotic, and often unpredictable. One intriguing result under one condition may soon fail for a majority of others. What’s more, paradigm-shifting research trends often require revolutionary tools. When we’re lucky, those come once a decade.

But I can unabashedly say that the 2010s saw a boom in neuroscience breakthroughs that transformed the field and will resonate long into the upcoming decade.

In 2010, the idea that we’d be able to read minds, help paralyzed people walk again, incept memories, or have multi-layered brain atlases was near incomprehensible. Few predicted that deep learning, an AI model loosely inspired by neural processing in the brain, would gain prominence and feed back into decoding the brain. Around 2011, I asked a now-prominent AI researcher if we could automatically detect dying neurons in a microscope image using deep neural nets; we couldn’t get it to work. Today, AI is readily helping read, write, and map the brain.

As we cross into the next decade, it pays to reflect on the paradigm shifts that made the 2010s the decade of the brain. Even as a boo humbug skeptic I’m optimistic about the next decade for solving the brain’s mysteries: from genetics and epigenetics to chemical and electrical communications, networks, and cognition, we’ll only get better at understanding and tactfully controlling the supercomputer inside our heads.

1. Linking Brains to Machines Goes From Fiction to Science

We’ve covered brain-computer interfaces (BCIs) so many times even my eyes start glazing over. Yet I still remember my jaw dropping as I watched a paralyzed man kick off the 2014 World Cup in a bulky mind-controlled exosuit straight out of Edge of Tomorrow.

Flash forward a few years, and scientists have already ditched the exosuit for an implanted neural prosthesis that replaces severed nerves to re-establish communication between the brain’s motor centers and lower limbs.

The rise in BCIs owes much to the BrainGate project, which worked tirelessly to decode movement from electrical signals in the motor cortex, allowing paralyzed patients to use a tablet with their minds or operate robotic limbs. Today, prosthetic limbs coated with sensors can feed back into the brain, giving patients mind-controlled movement, sense of touch, and an awareness of where the limb is in space. Similarly, by decoding electrical signals in the auditory or visual cortex, neural implants can synthesize a person’s speech by reconstructing what they’re hearing or re-create images of what they’re seeing—or even of what they’re dreaming.

For now, most BCIs—especially those that require surgical implants—are mainly used to give speech or movement back to those with disabilities or decode visual signals. The brain regions that support all these functions are on the surface, making them relatively more accessible and easier to decode.

But there’s plenty of interest in using the same technology to target less tangible brain issues, such as depression, OCD, addiction, and other psychiatric disorders that stem from circuits deep within the brain. Several trials using implanted electrodes, for example, have shown dramatic improvement in people suffering from depression that don’t respond to pharmaceutical drugs, but the results vary significantly between individuals.

The next decade may see non-invasive ways to manipulate brain activity, such as focused ultrasound, transcranial magnetic or direct current stimulation (TMS/tDCS), and variants of optogenetics. Along with increased understanding of brain networks and dynamics, we may be able to play select neural networks like a piano and realize the dream of treating psychiatric disorders at their root.

2. The Rise of Massive National Research Programs

Rarely does one biological research field get such tremendous support from multiple governments. Yet the 2010s saw an explosion in government-backed neuroscience initiatives from the US, EU, and Japan, with China, South Korea, Canada, and Australia in the process of finalizing their plans. These multi-year, multi-million-dollar projects focus on developing new tools to suss out the brain’s inner workings, such as how it learns, how it controls behavior, and how it goes wrong. For some, the final goal is to simulate a working human brain inside a supercomputer, forming an invaluable model for researchers to test out their hypotheses—and maybe act as a blueprint for one day reconstructing all of a person’s neural connections, called the connectome.

Even as initial announcements were met with skepticism—what exactly is the project trying to achieve?—the projects allowed something previously unthinkable. The infusion of funding provided a safety blanket to develop new microscopy tools to ever-more-rapidly map the brain, resulting in a toolkit of new fluorescent indicators that track neural activation and map neural circuits. Even rudimentary simulations have generated “virtual epilepsy patients” to help more precisely pinpoint sources of seizures. A visual prosthesis to restore sight, a memory prosthesis to help those with faltering recall, and a push for non-invasive ways to manipulate human brains all stemmed from these megaprojects.

Non-profit institutions such as the Allen Institute for Brain Science have also joined the effort, producing map after map at different resolutions of various animal brains. The upcoming years will see individual brain maps pieced together into comprehensive atlases that cover everything from genetics to cognition, transforming our understanding of brain function from paper-based 2D maps into multi-layered Google Maps.

In a way, these national programs ushered in the golden age of brain science, bringing talent from other disciplines—engineers, statisticians, physicists, computer scientists—into neuroscience. Early successes will likely drive even more investment in the next decade, especially as findings begin translating into actual therapies for people who don’t respond to traditional mind-targeting drugs. The next decade will likely see innovative new tools that manipulate neural activity more precisely and less-invasively than optogenetics. The rapid rise in the amount of data will also mean that neuroscientists will quickly embrace cloud-storage options for collaborative research and GPUs and more powerful computing cores to process the data.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

3. The Brain-AI-Brain Virtuous Cycle

First, brain to AI. The physical structure and information flow in the cortex inspired deep learning, the most prominent AI model today. Ideas such as hippocampal replay—the brain’s memory center replays critical events in fast forward during sleep to help consolidate memory—also benefit AI models.

In addition, the activation patterns of individual neurons merged with materials science to build “neuromorphic chips,” or processors that function more like the brain, rather than today’s silicon-based chips. Although neuromorphic chips remain mainly an academic curiosity, they have the potential to perform complicated, parallel computations at a fraction of the energy used by processors today. As deep neural nets get ever-more power hungry, neuromorphic chips may present a welcome alternative.

In return, AI algorithms that closely model the brain are helping solve long-time mysteries of the brain, such as how the visual cortex processes input. In a way, the complexity and unpredictability of neurobiology is shriveling thanks to these computational advancements.

Although crossovers between biomedical research and digital software have long existed—think programs that help with drug design—the match between neuroscience and AI is far stronger and more intimate. As AI becomes more powerful and neuroscientists collaborate outside their field, computational tools will only unveil more intricacies of neural processing, including more intangible aspects such as memory, decision-making, or emotions.

4. A Mind-Boggling Array of Research Tools

I talk a bunch about the brain’s electrical activity, but supporting that activity are genes and proteins. Neurons also aren’t a uniform bunch; multiple research groups are piecing together a who’s who of the brain’s neural parts and their individual characteristics.

Although invented in the late 2000s, technologies such as optogenetics and single-cell RNA sequencing were widely adopted by the neuroscience community in the 2010s. Optogenetics allows researchers to control neurons with light, even in freely moving animals going about their lives. Add to that a whole list of rainbow-colored proteins to tag active cells, and it’s possible to implant memories. Single-cell RNA sequencing is the queen bee of deciphering a cell’s identity, allowing scientists to understand the genetic expression profile of any given neuron. This tech is instrumental in figuring out the neuron populations that make up a brain at any point in time—infancy, youth, aging.

But perhaps the crown in new tools goes to brain organoids, or mini-brains, that remarkably resemble those of preterm babies, making them excellent models of the developing brain. Organoids may be our best chance of figuring out the neurobiology of autism, schizophrenia, and other developmental brain issues that are difficult to model with mice. This decade is when scientists established a cookbook for organoids of different types; the next will see far more studies that tap into their potential for modeling a growing brain. With hard work and luck, we may finally be able to tease out the root causes of these developmental issues.

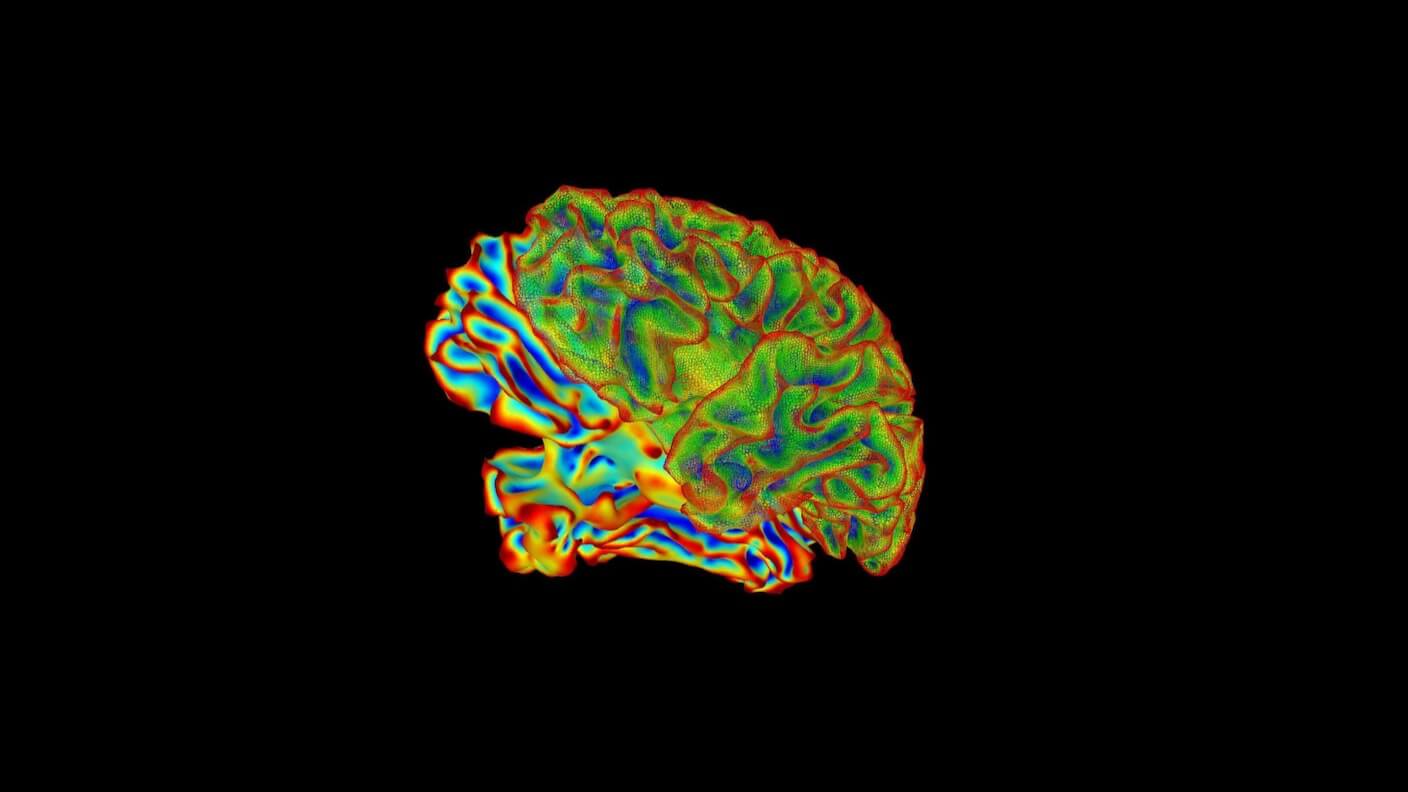

Image Credit: NIH

Dr. Shelly Xuelai Fan is a neuroscientist-turned-science-writer. She's fascinated with research about the brain, AI, longevity, biotech, and especially their intersection. As a digital nomad, she enjoys exploring new cultures, local foods, and the great outdoors.

Related Articles

This Week’s Awesome Tech Stories From Around the Web (Through February 21)

What the Rise of AI Scientists May Mean for Human Research

This ‘Machine Eye’ Could Give Robots Superhuman Reflexes

What we’re reading