What ‘Chernobyl’ Can Teach Us About Failure

Share

I’ve been watching the outstanding HBO series Chernobyl, which details the worst nuclear reactor meltdown in human history—an event that was approximately 400 times more potent than the atomic bomb dropped on Hiroshima.

What occurred to me, and what I discovered over the course of the TV series, this was a cataphoric failure destined to happen. A slow drift into failure from the beginning—only expedited by the people executing the experiment their rules-based culture required. But why? Is failure just a matter of when?

The Mystery Is How Anything Ever Works at All

In the pursuit of success in our dynamic, ever-changing and complex business environment with limited resources and many conflicting desired outcomes, a succession of tiny decisions eventually can produce breakdowns—a domino action of latent failures—on a tremendous scale.

From news feeds to newspapers, daily debacles highlight how the systems we design—with positive intent—can create more unintended consequences and negative effects on the society those very systems are designed to support.

From Facebook hacking to Boeing 737 Max accidents, algorithmic autonomous-bot arguments to legacy top-down management structures, information flows and decision-making, we struggle to cope with much of our context, to the point it's amazing that anything ever works as intended at all.

When problems occur we hunt for a single root cause, that one broken piece or person to hold accountable. Our analyses of complex system breakdowns remains linear, componential, and reductive. In short, it’s inhumane.

The growth of complexity in society has outpaced our understanding of how complex systems succeed and fail. Or as Sidney Dekker, human factors and safety author, said, “Our technologies have gotten ahead of our theories.”

Modeling the Drift into Failure

Another pioneering safety researcher, Jens Rasmussen, identified this failure-mode phenomenon which he called “drift to danger,” or the “systemic migration of organizational behavior toward accident under the influence of pressure toward cost-effectiveness in an aggressive, competing environment.”

Any major initiative is subjected to multiple pressures, and our responsibility is to operate within the space of possibilities formed by economic, workload, and safety constraints to navigate towards the desired outcomes we hope to achieve at a given time.

Yet our capitalist landscape encourages decision-makers to focus on short-term incentives, financial success, and survival over long-term criteria such as safety, security, and scalability. Workers must be more productive to stay ahead and become “cheaper, faster, better.” Customer expectations accelerate exponentially with each compounding innovation cycle of progress. These pressures push against and migrate teams towards the limits of acceptable (safe) performance. Accidents occur when the system’s activity crosses the boundary into unacceptable safety conditions.

Rasmussen’s model helps us to map and navigate complexity toward properties for which we wish to optimize. For example, if we want to optimize for Safety, then we need to understand where our safety boundary is in his model for our work. For instance, optimizing for Safety is the primary, explicit outcome of Chaos Engineering.

Spoiler Alerts (Or the Lack Thereof)

The crew at Chernobyl were performing a low power test to understand if residual turbine spin could generate enough electric power to keep the cooling system running as the reactor was shutting down. The standard for the planning of such an experiment should have been detailed and conservative. It was not. It was a “see what happens,” poorly designed experiment—with no criteria established ahead of time for when to abort the experiment. The test had also failed multiple times previously.

Design engineering assistance was not requested, therefore the crew proceeded without safety precautions and without properly coordinating or communicating the procedure with safety personnel. Chernobyl was also the award-winning, top-performance reactor site in the Soviet Union.

The experiment went out of control.

In order to keep the reactor from shutting down completely, the crew shut down several safety systems. Then, when the remaining alarm signaled, ignored it for 20 seconds.

While the behavior of the team was questionable, there was also a deeper, unknown latent failure in waiting. The reactor at Chernobyl had a unique engineering flaw that caused the reactor to overheat during the test, one which had to be obfuscated from the scientists by the government's policy to insure state secrets remained so—the graphite components they selected for the reactor design were also believed to offer similar safety standards at cheaper costs. Needless to say, they did not.

Under standard operating conditions, reactor No.4’s max power output was 3,200 MWt (megawatt thermal) during the power surge that followed the reactors output spiked to over 320,000 MWt. This caused the reactor housing to rupture, resulting in a massive steam explosion and fire that demolished the reactor building and released large amounts of radiation into the atmosphere.

The first official explanation of the Chernobyl accident was quickly published in August 1986, three months after the accident. It effectively placed the blame on the power plant operators, noting that the catastrophe was caused by gross violations of operating rules and regulations. The operator error was due to their lack of knowledge of nuclear reactor physics and engineering, as well as lack of experience and training. The hunt for the single root cause and individual's error was complete, case closed.

It wasn’t until later and the International Atomic Energy Agency’s 1993 revised analysis that debate around the reactor’s design was called into question.

One reason there are such contradictory viewpoints and debate about the causes of the Chernobyl accident was that the primary data covering the disaster, as registered by the instruments and sensors, were not completely published in the official sources.

Much of the low-level information for how the plant was designed was also kept from operators due to secrecy and censorship by the Soviet government.

The four Chernobyl reactors were pressurized water reactors of the Soviet RBMK design, which were very different from standard commercial designs and employed a unique combination of a graphite moderator and water coolant. This makes the RBMK design very unstable at low power levels, and prone to suddenly increasing energy production to a dangerous level. This behavior is counter-intuitive, and was unknown to the operating crew.

Additionally, the Chernobyl plant did not have the fortified containment structure common to most nuclear power plants elsewhere in the world. Without this protection, radioactive material escaped into the environment.

Contributing Factors to Failure to Consider

KPIs drive behavior

The crew at Chernobyl were required to complete the test to confirm to standard operation rules to ‘be safe.’ They had a narrow focus on what mattered in terms of safety, e.g. completing the test versus operating the plant safely. They did not define boundaries or success and failure criteria for the experiment in advance of performing it. They didn’t have all the information to set themselves up for success. And they disregarded other indicators flagged by the system as anomalies and pushed ahead to complete the experiment with the timeline they were assigned.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

Flow of information

The quality of your decisions is based on the quality of your information, supported by a good process to make decisions. The operators were following a bad process with missing information.

Often we find that people setting policy are not the people doing the actual work, and this causes breakdowns between work-as-expected and work-as-done, such as the graphite design of the reactor.

Often policy violations are chosen by workers because they are in a double-blind position, and so they choose to optimize for one value (like timeliness or efficiency) at the expense of another (like verification).

Values guide behavior, limited resources put pressure on behavior

What if there's a conflict between what the company tells you are the behaviors that lead to success, and the behaviors you believe lead to success? What would you do when following the rules goes against your values?

KPIs are sometimes set in such a way that pressure puts behaviors under stress and creates unsafe systems. The Chernobyl team that was fully prepared to run the test ultimately had to be replaced by a night shift crew when the test was delayed, and that night shift had far less preparation.

The chief engineer was thus put under further pressure to complete the test (and avoid further delay), ultimately clouding his judgment around the inherent risk of using an alternate crew.

How to Drift into Value (Over Failure)

Performance variability may introduce a drift in your situation. However, we can drift to success over failure by creating experiences and social structures for people to safely learn how to handle uncertainty, and navigate towards the desired outcomes we hope to achieve at a given time.

Here’s a set of principles and practices to consider.

- Try to encourage sharing of high-quality information as frequently and liberally as possible

- Look into the layers of your organization—where are decisions made? How can you move authority to where the information is richest, the context most current, and the employees closest to the customers or situation at hand?

- Be aware of conflicting KPIs, and compare them with the organization’s values and the behaviors they might drive

- Have explicit and communicated boundaries for economic, workload, and safety constraints. For example, in Rasmussen’s mode these exist implicitly, whether you acknowledge them or not. Make sure the people doing the work understand where all three boundaries actually are in your context

- Have fast feedback mechanisms in place to tell you when you’re hitting your pre-defined risk and experiment boundaries

Conclusion

Complex systems have emergent properties, which means explaining accidents by working backwards from the particular part of the system that has failed will never provide a full explanation of what went wrong.

There will rarely be a single source of failure, but many tiny acts and decisions along the way that eventually unearth the latent failures in your system. This is why it’s important to remember that our work doesn’t only progress through time, it progresses through new information, understanding, and knowledge.

The question is: what systems do you have in place to safely control your problem domain (not your people)?

Chernobyl, while the world’s worst nuclear accident, did lead to major changes in safety culture and in industry cooperation, particularly between East and West before the end of the Soviet Union. Former President Gorbachev said that the Chernobyl accident was a more important factor in the fall of the Soviet Union than Perestroika, his program of liberal reform.

What mental models, theories, and methods are you using but not driving the outcomes you’re seeking—and which must be unlearned?

This article is republished from Barry O’Reilly with permission. Read the original article here.

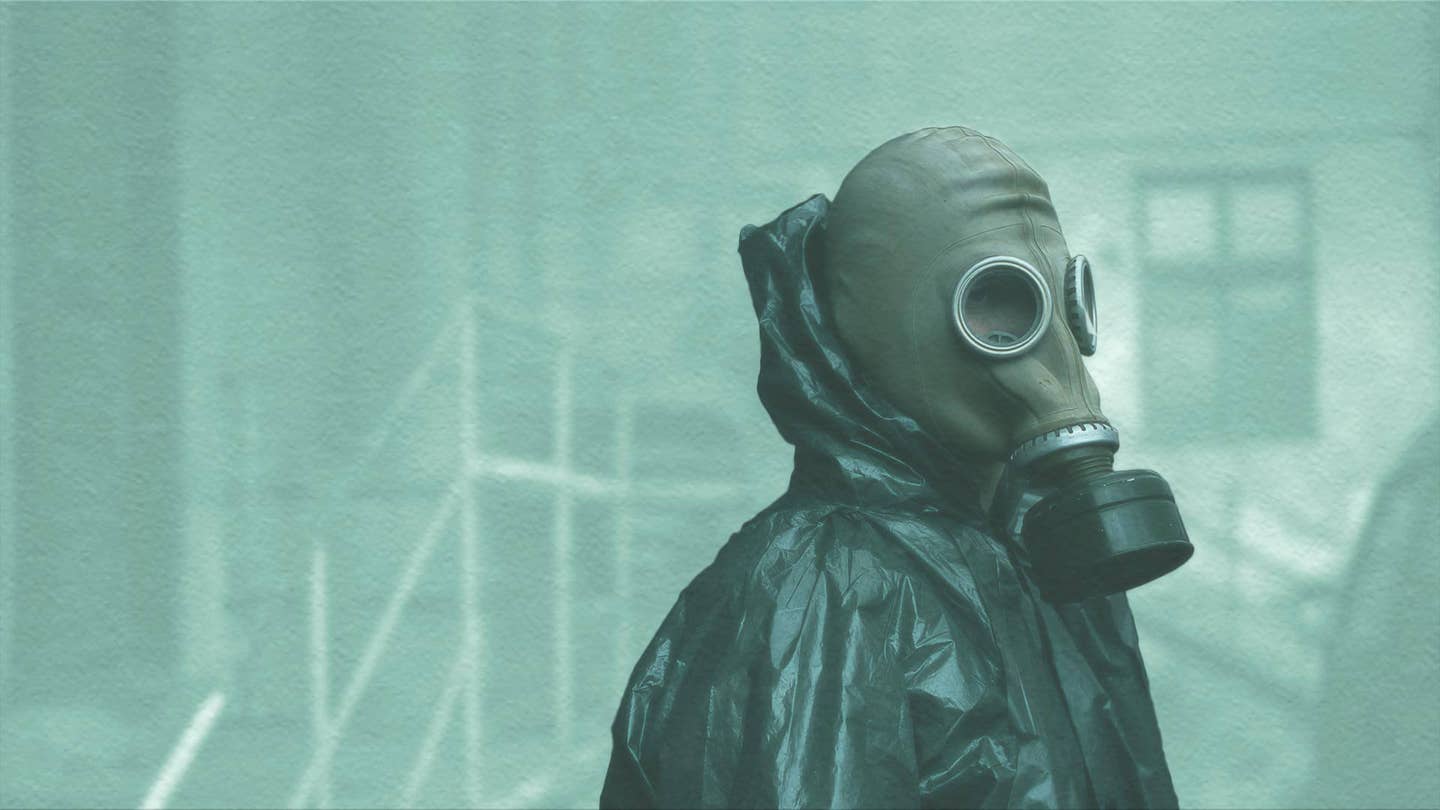

Image Credit: Mariana Smaha / Shutterstock.com

Barry O’Reilly is a business advisor, entrepreneur, and author who has pioneered the intersection of business model innovation, product development, organizational design, and culture transformation. Barry works with business leaders and teams from global organizations that seek to invent the future, not fear it. Every day, Barry works with many of the world’s leading companies to break the vicious cycles that spiral businesses toward death by enabling experimentation and learning to unlock the insights required for better decision making and higher performance and results. Barry is co-author of the international bestseller Lean Enterprise: How High Performance Organizations Innovate at Scale—included in the Eric Ries Lean series, and a Harvard Business Review must read for CEOs and business leaders. He is an internationally sought-after speaker, frequent writer and contributor to The Economist, Strategy+Business, and MIT Sloan Management Review. Barry is Faculty at Singularity University, advising and coaching on Singularity’s executive and accelerator programs based in San Francisco, and throughout the globe. Barry is also founder and CEO of ExecCamp, the entrepreneurial experience for executives, and management consultancy Antennae. His mission is to help purposeful technology-led businesses innovate at scale.

Related Articles

This Week’s Awesome Tech Stories From Around the Web (Through February 21)

What the Rise of AI Scientists May Mean for Human Research

This ‘Machine Eye’ Could Give Robots Superhuman Reflexes

What we’re reading