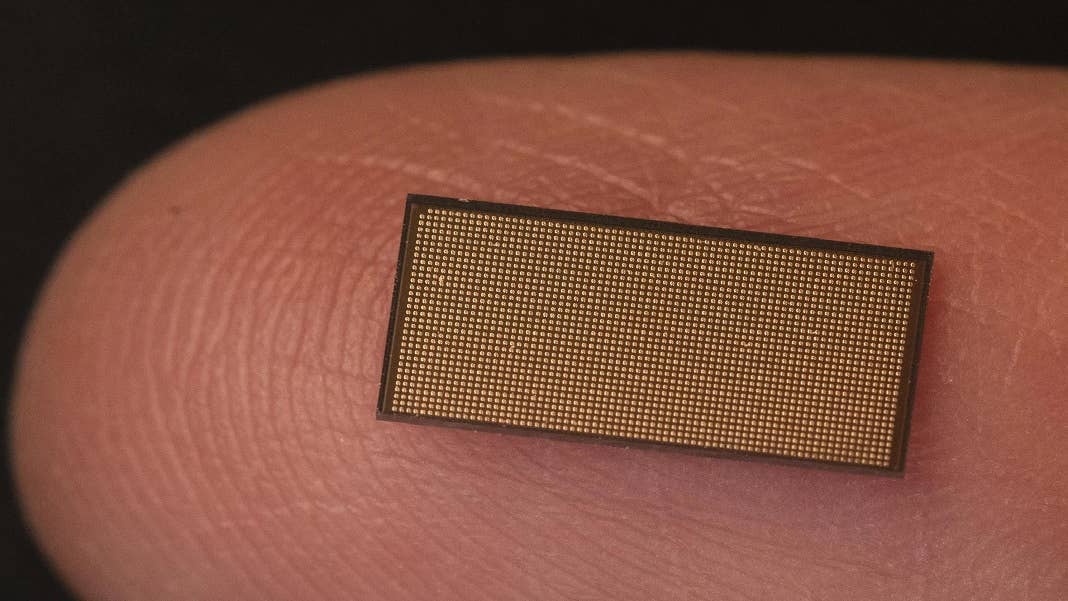

Intel’s Brain-Inspired Loihi 2 Chip Can Hold a Million Artificial Neurons

Share

Computer chips that recreate the brain's structure in silicon are a promising avenue for powering the smart robots of the future. Now Intel has released an updated version of its Loihi neuromorphic chip, which it hopes will bring that dream closer.

Despite frequent comparisons, the neural networks that power today’s leading AI systems operate very differently than the brain. While the “neurons” used in deep learning shuttle numbers back and forth between one another, biological neurons communicate in spikes of electrical activity whose meaning is tied up in their timing.

That is a very different language from the one spoken by modern processors, and it’s been hard to efficiently implement these kinds of spiking neurons on conventional chips. To get around this roadblock, so-called “neuromorphic” engineers build chips that mimic the architecture of biological neural networks to make running these spiking networks easier.

The field has been around for a while, but in recent years it’s piqued the interest of major technology companies like Intel, IBM, and Samsung. Spiking neural networks (SNNs) are considerably less developed than the deep learning algorithms that dominate modern AI research. But they have the potential to be far faster and more energy-efficient, which makes them promising for running AI on power-constrained edge devices like smartphones or robots.

Intel entered the fray in 2017 with its Loihi neuromorphic chip, which could emulate 125,000 spiking neurons. But now the company has released a major update that can implement one million neurons and is ten times faster than its predecessor.

“Our second-generation chip greatly improves the speed, programmability, and capacity of neuromorphic processing, broadening its usages in power and latency constrained intelligent computing applications,” Mike Davies, director of Intel’s Neuromorphic Computing Lab, said in a statement.

Loihi 2 doesn’t only significantly boost the number of neurons, it greatly expands their functionality. As outlined by IEEE Spectrum, the new chip is much more programmable, allowing it to implement a wide range of SNNs rather than the single type of model the previous chip was capable of.

It’s also capable of supporting a wider variety of learning rules that should, among other things, make it more compatible with the kind of backpropagation-based training approaches used in deep learning. Faster circuits also mean the chip can now run at 5,000 times the speed of biological neurons, and improved chip interfaces make it easier to get several of them working in concert.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

Perhaps the most significant changes, though, are to the neurons themselves. Each neuron can run its own program, making it possible to implement a variety of different kinds of neurons. And the chip’s designers have taken it upon themselves to improve on Mother Nature’s designs by allowing the neurons to communicate using both spike timing and strength.

The company doesn’t appear to have any plans to commercialize the chips, though, and for the time being they will only be available over the cloud to members of the Intel Neuromorphic Research Community.

But the company does seem intent on building up the neuromorphic ecosystem. Alongside the new chip, it has also released a new open-source software framework called LAVA to help researchers build “neuro-inspired” applications that can run on any kind of neuromorphic hardware or even conventional processors. "LAVA is meant to help get neuromorphic [programming] to spread to the wider computer science community," Davies told Ars Technica.

That will be a crucial step if the company ever wants its neuromorphic chips to be anything more than a novelty for researchers. But given the broad range of applications for the kind of fast, low-power intelligence they could one day provide, it seems like a sound investment.

Image Credit: Intel

Related Articles

Researchers Break Open AI’s Black Box—and Use What They Find Inside to Control It

What the Rise of AI Scientists May Mean for Human Research

Scientists Send Secure Quantum Keys Over 62 Miles of Fiber—Without Trusted Devices

What we’re reading