Quantum Chip Takes Microseconds to Do a Task a Supercomputer Would Spend 9,000 Years On

Share

Are quantum computers overhyped?

A new study in Nature says no. A cleverly-designed quantum device developed by Xanadu, a company based in Toronto, Canada, obliterated conventional computers on a benchmark task that would otherwise take over 9,000 years.

For the quantum chip Borealis, answers came within 36 microseconds.

Xanadu’s accomplishment is the latest to demonstrate the power of quantum computing over conventional computers—a seemingly simple idea dubbed quantum advantage.

Theoretically, the concept makes sense. Unlike conventional computers, which calculate in sequence using binary bits—0 or 1—quantum devices tap into the weirdness of the quantum world, where 0 and 1 can both exist at the same time with differing probabilities. The data is processed in qubits, a noncommittal unit that simultaneously performs multiple calculations thanks to its unique physics.

Translation? A quantum computer is like a hyper-efficient multitasker, whereas conventional computers are far more linear. When given the same problem, a quantum computer should be able to trounce any supercomputer in any problem in terms of speed and efficiency. The idea, dubbed “quantum supremacy,” has been the driving force to push for a new generation of computers completely alien to anything previously made.

The problem? Proving quantum supremacy is extremely difficult. As quantum devices increasingly leave the lab to solve more real-world problems, scientists are embracing an intermediate benchmark: quantum advantage, which is the idea that a quantum computer can beat a conventional one at just one task—any task.

Back in 2019, Google broke the internet showcasing the first example of a quantum computer, Sycamore, solving a computational problem in just 200 seconds with 54 qubits—compared to a conventional supercomputer’s estimate of 10,000 years. A Chinese team soon followed with a second fascinating showcase of quantum computational advantage, with the machine spitting out answers that would take a supercomputer over two billion years.

Yet a crucial question remains: are any of these quantum devices even close to being ready for practical use?

A Drastic Redesign

It’s easy to forget that computers rely on physics. Our current system, for example, taps into electrons and cleverly-designed chips to perform their functions. Quantum computers are similar, but they rely on alternative particle physics. Initial generations of quantum machines looked like delicate, shimmering chandeliers. While absolutely gorgeous, compared to a compact smartphone chip, they’re also completely impractical. The hardware often requires tightly-controlled climates—for example, near absolute zero temperature—to reduce interference and boost the computer’s efficacy.

The core concept of quantum computing is the same: qubits processing data in superposition, a quantum physics quirk that allows them to encode 0s, 1s, or both at the same time. The hardware that supports the idea vastly differs.

Google’s Sycamore, for example, uses superconducting metal loops—a setup popular with other tech giants including IBM, which introduced Eagle, a powerful 127-qubit quantum chip in 2021 that’s about the size of a quarter. Other iterations from companies such as Honeywell and IonQ took a different approach, tapping into ions—atoms with one or more electrons removed—as their main source for quantum computing.

Another idea relies on photons, or particles of light. It’s already been proven useful: the Chinese demonstration of quantum advantage, for example, used a photonic device. But the idea’s also been shunned as a mere stepping stone towards quantum computing rather than a practical solution, largely because of difficulties in engineering and setup.

A Photonic Revolution

Xanadu’s team proved naysayers wrong. The new chip, Borealis, is marginally similar to the one in the Chinese study in that it uses photons—rather than superconducting materials or ions—for computation.

But it has a huge advantage: it’s programmable. “Previous experiments typically relied on static networks, in which each component is fixed once fabricated,” explained Dr. Daniel Jost Brod at the Federal Fluminense University at Rio de Janeiro in Brazil, who was not involved in the study. The earlier quantum advantage demonstration in the Chinese study used a static chip. With Borealis, however, the optical elements “can all be readily programmed,” making it less of a single-use device and more of an actual computer potentially capable of solving multiple problems. (The quantum playground is available on the cloud for anyone to experiment and explore once you sign up.)

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

The chip’s flexibility comes from an ingenious design update, an “innovative scheme [that] offers impressive control and potential for scaling,” said Brod.

The team zeroed in on a problem called Gaussian boson sampling, a benchmark for evaluating quantum computing prowess. The test, while extraordinarily difficult computationally, doesn’t have much impact on real-world problems. However, like chess or Go for measuring AI performance, it acts as an unbiased judge to examine quantum computing performance. It’s a “gold standard” of sorts: “Gaussian boson sampling is a scheme designed to demonstrate the advantages of quantum devices over classical computers,” explained Brod.

The setup is like a carnival funhouse mirror tent in a horror movie. Special states of light (and photons)—amusingly called “squeezed states”—are tunneled onto the chip embedded with a network of beam splitters. Each beam splitter acts like a semi-reflective mirror: depending on how the light hits, it splits into multiple daughters, with some reflecting back and others passing through. At the end of the contraption is an array of photon detectors. The more beam splitters, the more difficult it is to calculate how any individual photon will end up at any given detector.

As another visualization: picture a bean machine, a peg-studded board encased in glass. To play, you drop a puck into the pegs at the top. As the puck falls, it randomly hits different pegs, eventually landing in a numbered slot.

Gaussian boson sampling replaces the pucks with photons, with the goal of detecting which photon lands in which detector slot. Due to quantum properties, the possible resulting distributions grow exponentially, rapidly outpacing any supercomputer powers. It’s an excellent benchmark, explained Brod, largely because we understand the underlying physics, and the setup suggests that even a few hundred photons can challenge supercomputers.

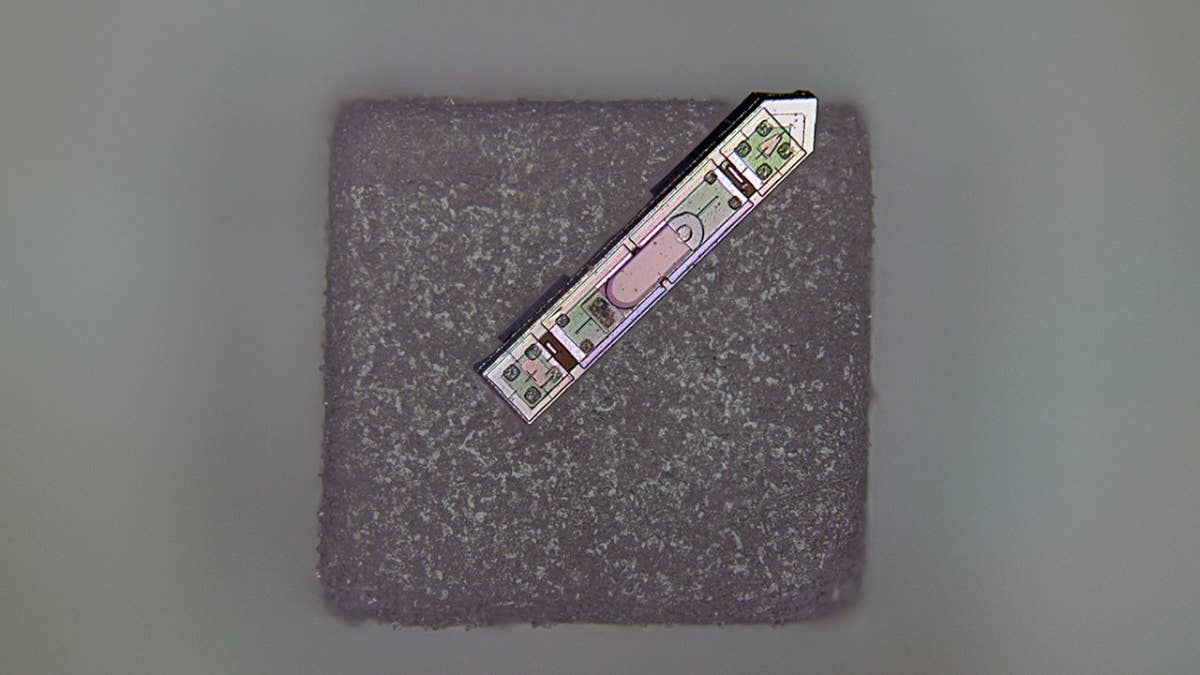

Taking up the challenge, the new study reimagined a photonic quantum device with an admirable 216 qubits. Contradicting classic designs, the device calculated photons in bins of arrival time rather than the previous standard of direction. The trick was to introduce loops of optical fibers to delay photons so they can interfere at specific spots important for quantum computation.

These tweaks led to a vastly slimmed-down device. The usual large network of beam splitters—normally needed for photon communications—can be reduced to just three to accommodate all the necessary delays for photons to interact and compute the task. The loop designs, along with other components, are also “readily programmable” in that a beam splitter can be fine-tuned in real time—like editing computer code, but at the hardware level.

The team also aced a standard sanity check, certifying that the output data was correct.

For now, studies that reliably show quantum supremacy remain rare. Conventional computers have a half century head start. As algorithms keep evolving on conventional computers—especially those that tap into powerful AI-focused chips or neuromorphic computing designs—they may even readily outperform quantum devices, leaving them struggling to catch up.

But that’s the fun of the chase. “Quantum advantage is not a well-defined threshold, based on a single figure of merit. And as experiments develop, so too will techniques to simulate them —we can expect record-setting quantum devices and classical algorithms in the near future to take turns in challenging each other for the top spot,” said Brod.

“It might not be the end of the story,” he continued. But the new study “is a leap forward for quantum physics in this race.”

Image Credit: geralt / 24493 images

Dr. Shelly Xuelai Fan is a neuroscientist-turned-science-writer. She's fascinated with research about the brain, AI, longevity, biotech, and especially their intersection. As a digital nomad, she enjoys exploring new cultures, local foods, and the great outdoors.

Related Articles

How Scientists Are Growing Computers From Human Brain Cells—and Why They Want to Keep Doing It

These Brain Implants Are Smaller Than Cells and Can Be Injected Into Veins

This Wireless Brain Implant Is Smaller Than a Grain of Salt

What we’re reading