“One small step for man, one giant leap for mankind.”

When Neil Armstrong stepped onto the dusty surface of the moon on July 20, 1969, it was a victory for NASA and a victory for science.

Backed by billions and led by NASA, the Apollo project is hardly the only government-organized science initiative to change the world. Two decades earlier, the Manhattan Project produced the first atomic bomb and restructured modern warfare; three decades later, the Human Genome Project published the blueprint to our DNA, heralding the dawn of population genomics and personalized medicine.

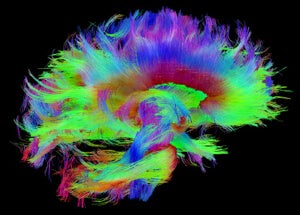

In recent years, big science has become increasingly focused on the brain. And now the government is pushing forward what is perhaps the most high-risk, high-reward project of our time: brain-like artificial intelligence.

And they’re betting $100 million that they’ll succeed in next decade.

Led by IARPA and a part of the larger BRAIN Initiative, the MICrONS (Machine Intelligence from Cortical Networks) project seeks to revolutionize machine learning by reverse engineering algorithms of the mammalian cortex.

It’s a hefty goal, but one that may sound familiar. The controversial European Union-led Human Brain Project (HBP), a nearly $2 billion investment, also sits at the crossroads between brain mapping, computational neuroscience and machine learning.

But MICrONS is fundamentally different, both technically and logistically.

Rather than building a simulation of the human brain, which the HBP set out to do, MICrONS is working in reverse. By mapping out the intricate connections that neurons form during visual learning and observing how they change with time, the project hopes to distill sensory computation into mathematical “neural codes” that can be fed into machines, giving them the power to identify, discriminate, and generalize visual stimulation.

The end goal: smarter machines that can process images and video at human-level proficiency. The bonus: better understanding of the human brain and new technological tools to take us further.

It’s a solid strategy. And the project’s got an all-star team to see it through. Here’s what to expect from this “Apollo Project of the Brain.”

The Billion-Dollar Problem

Much of today’s AI is inspired by the human brain.

Take deep reinforcement learning, a strategy based on artificial neural networks that’s transformed AI in the last few years. This class of algorithm powers much of today’s technology: self-driving cars, Go-playing computers, automated voice and facial recognition — just to name a few.

Nature has also inspired new computing hardware, such as IBM’s neuromorphic SyNAPSE chip, which mimics the brain’s computing architecture and promises lightning-fast computing with minimal energy consumption.

As sophisticated as these technologies are, however, today’s algorithms are embarrassingly brittle and fail at generalization.

When trained on the features of a person’s face, for example, machines often fail to recognize that face if it’s partially obscured, shown at a different angle or under different lighting conditions.

In stark contrast, humans have a knack for identifying faces. What’s more, we subconsciously and rapidly build a model of what constitutes a human face, and can easily tell whether a new face is human or not — unlike some photo-tagging systems.

Even a rough idea of how the brain works has given us powerful AI systems. MICrONS takes the logical next step: instead of guessing, let’s figure out how the brain actually works, find out what AI’s currently missing, and add it in.

See the World in a Grain of Sand

To understand how a computer works, you first need to take it apart, see the components, trace the wiring.

Then you power it up, and watch how those components functionally interact.

The same logic holds for a chunk of brain tissue.

MICrONS plans to dissect one cubic millimeter of mouse cortex at nanoscale resolution. And it’s recruited Drs. David Cox and Jeff Lichtman, both neurobiologists from Harvard University to head the task.

Last July, Lichtman published the first three-dimensional complete reconstruction of a crumb-sized cube of mouse cortex. The effort covered just 1,500 cubic microns, roughly 600,000 times smaller than MICrONS’ goal.

It’s an incredibly difficult multi-step procedure. First, the team uses a diamond blade to slice the cortex into thousands of pieces. Then the pieces are rolled onto a strip of special plastic tape at a rate of 1,000 sections a day. These ultrathin sections are then imaged with a scanning electron microscope, which can capture synapses in such fine detail that tiny vesicles containing neurotransmitters in the synapses are visible.

Mapping the tissue to this level of detail is like “creating a road map of the U.S. by measuring every inch,” says MICrON’s project manager Jacob Volgestein to Scientific American.

Lichtman’s original reconstruction took over six long years.

That said, the team is optimistic. According to Dr. Christof Koch, president of the Allen Institute for Brain Science, various technologies involved in the process will speed up tremendously, thanks to new tools developed under the BRAIN Initiative.

Lichtman and Cox hopes to make tremendous headway in the next five years.

Function Follows Form

Simply mapping the brain’s static roadmap isn’t enough.

Not all neurons and their connections are required to learn a new skill or piece of information. What’s more, the learning process physically changes how neurons are wired to each other. It’s a dynamic system, one in constant flux.

To really understand how neurons are functionally connected, we need to see them in action.

We’re hoping to observe the activity of 100,000 neurons simultaneously while a mouse is learning a new visual task, explained Cox. It’s like wire tapping the brain: the scientists will watch neural computations happen in real time as the animal learns.

To achieve the formidable task, Cox plans on using two-photon microscopy, which relies on fluorescent proteins that only glow in the presence of calcium. When a neuron fires, calcium rushes into the cell and activates those proteins, and their light can be observed with a laser-scanning microscope. This gives scientists a direct visual of neural network activation.

The technique’s been around for a while. But so far, it’s only been used to visualize tiny portions of neural networks. If Cox successfully adapts it for wide-scale imaging, it may well be revolutionary for functional brain mapping and connectomics.

From Brain to Machine

Meanwhile, MICrONS project head Dr. Tai Sing Lee at Carnegie Mellon University is taking a faster — if untraveled — route to map the mouse connectome.

According to Scientific American, Lee plans to tag synapses with unique barcodes — a short chain of random nucleotides, the molecules that make up our DNA. By chemically linking these barcodes together across synapses, he hopes to quickly reconstruct neural circuits.

If it works, the process will be much faster than nanoscale microscopy and may give us a rough draft of the cortex (one cubic millimeter of it) within the decade.

As a computer scientist and machine learning expert, Lee’s formidable skills will likely come into play during the next phase of the project: making sense of all the data and extracting information useful for developing new algorithms for AI.

As a computer scientist and machine learning expert, Lee’s formidable skills will likely come into play during the next phase of the project: making sense of all the data and extracting information useful for developing new algorithms for AI.

Going from neurobiological data to theories to computational models will be the really tough part. But according to Cox, there is one guiding principle that’s a good place to start: Bayesian inference.

During learning, the cortex actively integrates past experiences with present learning, building a constantly shifting representation of the world that allows us to predict incoming data and possible outcomes.

It’s likely that whatever algorithms the teams distill are Bayesian in nature. If they succeed, the next step is to thoroughly test their reverse-engineered models.

Vogelstein acknowledges that many current algorithms already rely on Bayesian principles. The crucial difference between what we have now and what we may get from mapping the brain is implementation.

There are millions of choices that a programmer makes to translate Bayesian theory into executable code, says Vogelstein. Some will be good, others not so much. Instead of guessing those parameters and features in software as we have been doing, it makes sense to extract those settings from the brain and narrow down optimal implementations to a smaller set that we can test.

Using this data-based ground-up approach to brain simulation, MICrONS hopes to succeed where HBP stumbled.

“We think it’s a critical challenge,” says Vogelstein. If MICrONS succeeds, it may “achieve a quantum leap in machine learning.”

For example, we may finally understand how the brain learns and generalizes with only one example. Cracking one-shot learning would circumvent the need for massive training data sets. This sets up the algorithms for functioning in real-world scenarios, which often can’t produce sufficient training data or give the AI enough time to learn.

Finally achieving human-like vision would also allow machines to parse complex sceneries, such as those captured by surveillance cameras.

Think of the implications for terrorism and cybersecurity. AI systems powered by brain-like algorithms will likely have “transformative impact for the intelligence community as well as the world more broadly,” says Volgeinstein.

Lichtmas is even more optimistic.

“What comes out of it — no matter what — it’s not a failure,” he says, “The brain [is] … really complicated, and no one’s ever really seen it before, so let’s take a look. What’s the risk in that?”

Image credit: Shutterstock.com